Step-by-Step Guide to Creating a Custom Airflow Operator for Salesforce Integration

Salesforce powers over 150,000 companies worldwide, and combining it with the automation capabilities of Apache Airflow can transform how businesses manage workflows and backups. However, to truly unlock its potential, automating tasks like job triggering and data backups is crucial. Apache Airflow is a powerful tool that can help orchestrate complex workflows across platforms. As organizations scale, creating custom Apache Airflow operators tailored to specific tasks like Salesforce job automation and object backups becomes a game changer. This blog will guide you through building a custom Apache Airflow operator for Salesforce, enabling seamless job triggers and secure data backups while saving time, reducing errors, and ensuring operational efficiency.

Why is this so important?

- 87% of sales teams see a boost in productivity after integrating Salesforce with automated workflows.

- Trusted by 80% of Fortune 500 companies for workflow orchestration, Apache Airflow proves its scalability and reliability.

- 60% of businesses that experience significant data loss close within six months, underscoring the importance of regular backups.

Let’s explore how integrating Apache Airflow with Salesforce can help you automate, protect, and scale your processes more effectively than ever before.

Why a Custom Airflow Operator for Salesforce?

Salesforce offers a powerful API that enables automation for tasks like data exports, job triggers, and object management. However, directly integrating these APIs into an Airflow DAG can be complex and inefficient without a custom operator. A custom operator streamlines this process by providing a reusable, modular solution that reduces repetitive code and enhances maintainability, making your workflows more efficient and easier to manage.

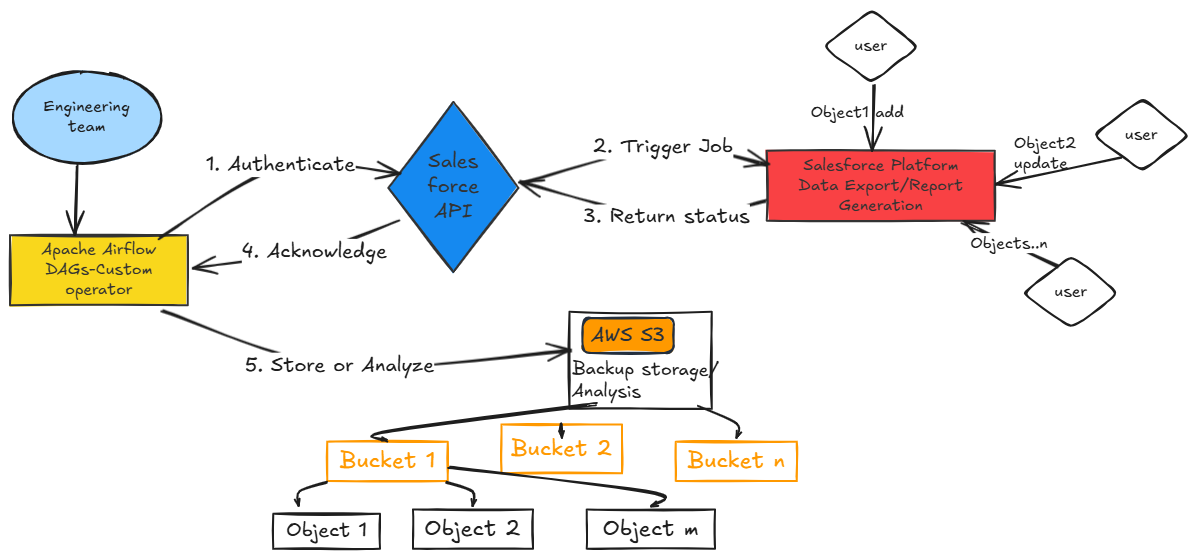

Use Case Overview

We aim to create a custom Airflow operator that:

- Triggers a Salesforce Job Initiate tasks like bulk data exports or report generation through the Salesforce API.

- Backs Up Custom Objects: Retrieve custom object data from Salesforce and securely upload it to a storage service such as AWS S3 for reliable backup.

Prerequisites for Building a Custom Airflow Operator

Before you begin coding, make sure you have the following set up:

- Salesforce API Access: Ensure you have an active Salesforce account with the necessary permissions to access the API.

- Airflow Environment: Your Airflow environment should be configured with the required connections to interact with Salesforce and other services.

- Required Python Packages:

simple-salesforce: This package allows seamless interaction with the Salesforce API.

boto3: Use this package to integrate with AWS S3 for object storage and backup functionality.

Having these prerequisites in place will ensure smooth development and execution of the custom operator.

Creating the Custom Operator

Step 1: Define the Operator

We’ll create a Python class inheriting from BaseOperator. This operator will encapsulate Salesforce API logic for triggering jobs and backing up data.

from airflow.models import BaseOperator

from airflow.utils.decorators import apply_defaults

from simple_salesforce import Salesforce

import boto3

import json

class SalesforceBackupOperator(BaseOperator):

@apply_defaults

def __init__(self,

salesforce_conn_id: str,

object_name: str,

job_type: str,

backup_bucket: str,

aws_conn_id: str,

*args, **kwargs):

super(SalesforceBackupOperator, self).__init__(*args, **kwargs)

self.salesforce_conn_id = salesforce_conn_id

self.object_name = object_name

self.job_type = job_type

self.backup_bucket = backup_bucket

self.aws_conn_id = aws_conn_id

def execute(self, context):

# Step 1: Authenticate with Salesforce

sf = self._get_salesforce_connection()

# Step 2: Trigger the Salesforce Job

self.log.info(f"Triggering {self.job_type} job for {self.object_name}...")

job_id = self._trigger_salesforce_job(sf)

# Step 3: Backup the Salesforce Object

self.log.info(f"Backing up {self.object_name} data...")

object_data = self._fetch_salesforce_object_data(sf)

self._backup_to_s3(object_data)

self.log.info(f"Salesforce job {job_id} completed and data backed up.")

def _get_salesforce_connection(self):

from airflow.hooks.base import BaseHook

conn = BaseHook.get_connection(self.salesforce_conn_id)

return Salesforce(username=conn.login,

password=conn.password,

security_token=conn.extra_dejson.get('security_token'))

def _trigger_salesforce_job(self, sf):

# Example API call to trigger a Salesforce job

response = sf.restful('jobs', method='POST',

data={"jobType": self.job_type,

"object": self.object_name})

return response.get('id')

def _fetch_salesforce_object_data(self, sf):

query = f"SELECT * FROM {self.object_name}"

records = sf.query_all(query)['records']

return records

def _backup_to_s3(self, object_data):

from airflow.providers.amazon.aws.hooks.s3 import S3Hook

s3_hook = S3Hook(aws_conn_id=self.aws_conn_id)

file_name = f"{self.object_name}_backup.json"

s3_hook.load_string(string_data=json.dumps(object_data),

key=file_name,

bucket_name=self.backup_bucket,

replace=True) Step 2: Integrate the Operator in a DAG

Now, we can use this custom operator in a DAG to automate Salesforce job triggering and object backup.

from airflow import DAG

from datetime import datetime

from custom_operators.salesforce_backup_operator import SalesforceBackupOperator

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

}

with DAG(

dag_id='salesforce_backup_dag',

default_args=default_args,

start_date=datetime(2025, 1, 1),

schedule_interval='@daily',

catchup=False,

) as dag:

backup_salesforce_object = SalesforceBackupOperator(

task_id='backup_salesforce_custom_object',

salesforce_conn_id='salesforce_default',

object_name='CustomObject__c',

job_type='export',

backup_bucket='my-salesforce-backups',

aws_conn_id='aws_default',

)

backup_salesforce_object Key Considerations When Developing a Custom Airflow Operator

- Salesforce Authentication: Make sure the Salesforce connection in Airflow is configured with the necessary security token for API access. This is crucial for ensuring secure and authorized communication with the Salesforce platform.

- Error Handling: Incorporate robust error handling into the custom operator. This will help manage potential API failures, network interruptions, or unexpected responses, ensuring the operator can recover gracefully and continue functioning.

Scalability: Design the operator with scalability in mind. If your needs expand, ensure it can handle multiple objects or jobs simultaneously, accommodating growing data volumes or complex workflows.

Conclusion

Building a custom Apache Airflow operator for Salesforce job triggering and object backups is a powerful way to automate key processes and streamline your workflow. By integrating Salesforce’s robust API with the flexibility of Airflow, you can enhance efficiency, reduce manual intervention, and ensure that your data is securely backed up and job triggers are automated seamlessly. This approach not only saves time but also minimizes the risk of human error and ensures your processes are scalable and adaptable as your infrastructure grows.

Implementing a custom operator may seem challenging at first, but with the right prerequisites and attention to detail, it becomes an invaluable asset in your automation toolkit. Whether you’re aiming to automate bulk data exports, manage custom object backups, or integrate multiple systems, Apache Airflow offers the scalability and reliability needed to handle complex workflows with ease.

Streamline your Salesforce workflows with a custom Airflow operator. Get started now!