Building a Scalable Terraform Workflow

In 2024, over 78% of organizations using Infrastructure as Code (IaC) tools encountered challenges with state management as their cloud footprints expand. Just when you think you’ve got it under control to manage your terraform state files, the complexity of your infrastructure adds another head. While these files play a crucial role in keeping track of your resources, but as they expand, they can bring challenges like slower performance, scalability issues, and difficulties in team collaboration. If you’ve found yourself grappling with oversized or unmanageable state files, you’re not alone.

The good news is that with the right strategies and best practices, you can keep your state files well-organized, efficient, and scalable to meet your growing needs. In this blog, we’ll delve into the common challenges associated with managing large Terraform state files and share practical tips to keep your state files streamlined and efficient, along with proven strategies to ensure your Terraform workflow remains smooth and scalable.

Challenges with Large State Files

- Sluggish Performance

As your resource count grows, so does the time it takes for Terraform commands like plan and apply to run. This can make your workflow feel frustratingly slow.

- Collaboration Headaches

When multiple team members work on the same state file, merge conflicts or accidental overwrites can become all too common, creating unnecessary friction.

- Higher Risk of Corruption

Larger state files are more prone to corruption, and when they fail, it’s not just inconvenient, it can disrupt critical operations.

- Increased Complexity

Keeping track of dependencies and relationships in a sprawling state file can feel like navigating a maze, adding unnecessary complications to your infrastructure management.

- Sensitive Data Concerns

Big state files often contain sensitive information, making encryption and careful handling a top priority to avoid potential security breaches.

Best Practices for Handling Large State Files

1. Modularize Configurations

Keeping your configurations well-organized is the key to managing large state files effectively. By breaking down your infrastructure into smaller, reusable modules, you can reduce the size of individual state files and make your code easier to maintain. Plus, modularization ensures changes in one area don’t accidentally impact others.

Here’s how to modularize configurations like a pro:

Step 1: Identify Logical Boundaries

Divide your infrastructure into logical components to keep things clean and manageable. For instance, separate your modules by function:

- Compute: EC2, Kubernetes clusters

- Storage: S3 buckets, RDS databases

- Networking: VPCs, subnets

This approach not only simplifies your state files but also makes your setup easier to scale and troubleshoot.

Step 2: Create Reusable Modules

Think of modules as self-contained building blocks for your infrastructure. Each module should:

- Focus on a single purpose (e.g., creating an S3 bucket or setting up a VPC).

- Use clear inputs and outputs, defined through variables and outputs, so they’re easy to plug into different projects.

By keeping modules focused and reusable, you’ll streamline your Terraform configurations and ensure your state files stay tidy and efficient.

Use case: Terraform module structure for Software Defined Vehicle

For a Software-Defined Vehicle (SDV) architecture, the AWS infrastructure design for network, storage, and compute would involve components optimized for vehicle data processing, telematics, cloud services, and scalability.

Directory Structure:

sdv/

│ ├──terraform-network/

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ ├── outputs.tf

│ │ └── README.md

│ ├──terraform-storage/

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ ├── outputs.tf

│ │ └── README.md

│ ├──terraform-compute/

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ ├── outputs.tf

│ │ └── README.md

└── root/

├── main.tf

├── variables.tf

├── terraform.tfvars

├── outputs.tf

└── README.md

2. Use Data Sources for Cross-State Dependencies

When splitting your infrastructure into modules for better organization, sharing data between them is often necessary. For instance, one module might manage a VPC and its subnets, while another module needs those VPC and subnet IDs to provision EC2 instances. Instead of hardcoding values or duplicating resources across state files, you can use Terraform’s data sources to dynamically fetch the required information.

Here’s how to do it effectively:

- Define Explicit Outputs: In upstream modules, clearly define the outputs for the resources you want to share, such as the VPC ID or subnet IDs. This ensures downstream modules only access the data they need.

- Avoid Cyclic Dependencies: Terraform doesn’t support circular dependencies between state files, so ensure downstream modules don’t rely on outputs from modules they influence.

- Minimize Dependencies: Fetch only the data you truly need. Avoid overloading downstream configurations with unnecessary information to keep things efficient and manageable.

By leveraging data sources thoughtfully, you can create Terraform configurations that are robust, scalable, and easy to maintain, ensuring seamless communication between modules without bloating your state files.

Example:

Step 1: Upstream Module

Define a module (e.g., VPC module) that outputs the required data, for a VPC ID

VPC Module (vpc/main.tf):

resource "aws_vpc" "main" {

cidr_block = "10.0.0.0/16"

tags = {

Name = "Main VPC"

}

}

output "vpc_id" {

value = aws_vpc.main.id

}

output "public_subnet_ids" {

value = aws_subnet.public.*.id

} Step 2: Downstream Module

Use the outputs of the VPC module in another module (e.g., EC2 instances).

# Data source for the existing VPC

data "aws_vpc" "prod" {

id = "vpc-123456"

}

# Data source for public subnets in the existing VPC

data "aws_subnets" "public" {

filter {

name = "vpc-id"

values = [data.aws_vpc.prod.id]

}

filter {

name = "tag:Name"

values = ["*public*"] # Adjust the tag filter to match your naming convention

}

}

# EC2 instance using one of the public subnets

resource "aws_instance" "web" {

ami = "ami-12345678"

instance_type = "t2.micro"

subnet_id = data.aws_subnets.public.ids[0] # Use the first public subnet ID

tags = {

Name = "Web Server"

}

} 3. Use Workspaces for Multi-Environment Management

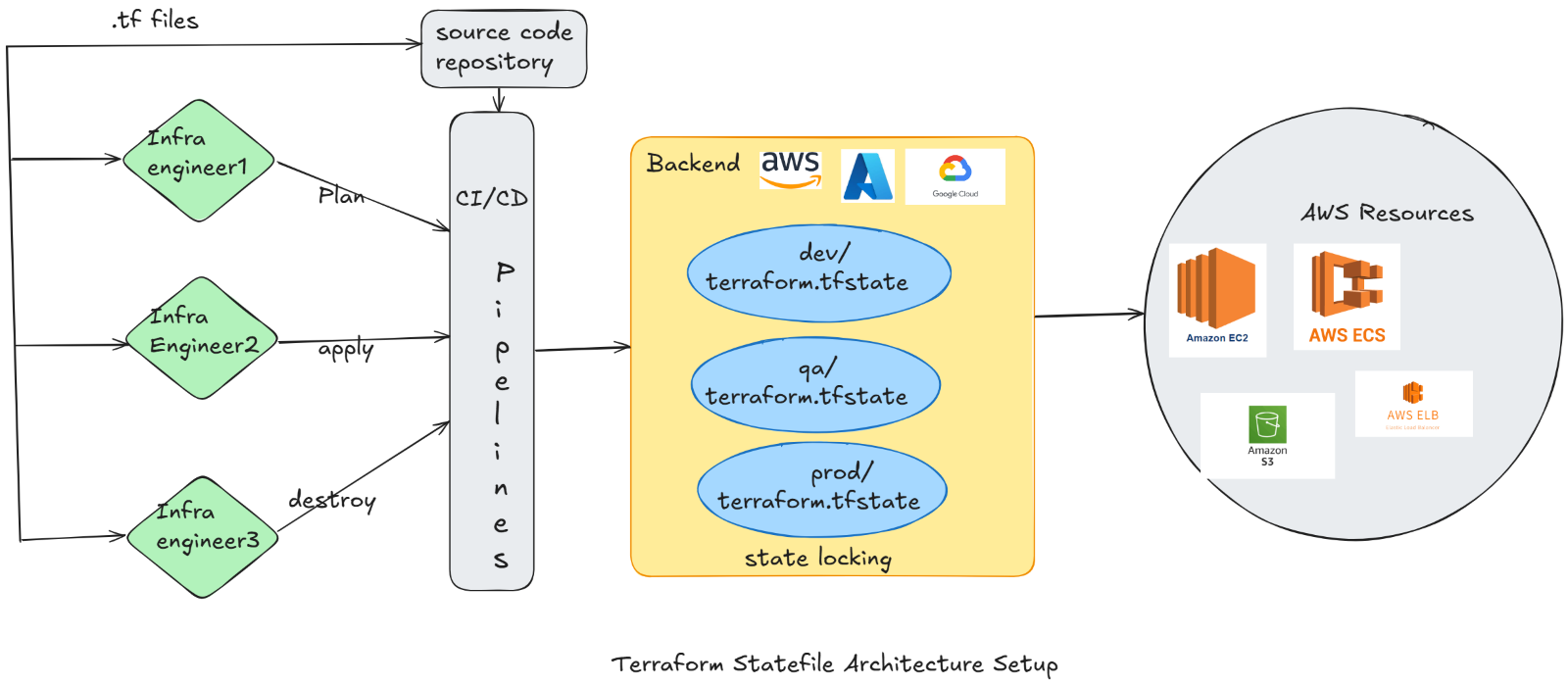

Terraform workspaces are a powerful way to manage multiple environments like development, staging, and production, using the same configuration while maintaining separate state files. Each workspace comes with its own .tfstate file, ensuring resources in one environment don’t interfere with those in another. This separation helps maintain clean, isolated environments.

Why Use Workspaces?

- State Isolation: Each workspace keeps its state file separate, avoiding accidental overlaps between environments.

- Dynamic Configuration Referencing: You can easily reference environment-specific variables dynamically.

- Simplified Workflow: Managing multiple environments becomes more streamlined with a single configuration.

When to Consider Alternatives:

For environments that differ significantly, using separate configurations or repositories might be a better approach than workspaces.

Key Workspace Commands:

- Create a new workspace: terraform workspace new dev

- Switch to an existing workspace: terraform workspace select dev

Workspaces offer a cleaner, more efficient way to handle multi-environment setups, helping you maintain clarity and control over your infrastructure.

Example Configuration for Workspaces:

terraform {

backend "s3" {

bucket = "my-terraform-state"

key = "envs/${terraform.workspace}/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "terraform-lock-table"

}

}

Directory Structure:

.

├── main.tf

├── variables.tf

├── outputs.tf

└── terraform.tfstate.d/ Commands:

- Initialize Terraform:

terraform init

- Create and Switch Workspaces:

terraform workspace new dev

terraform workspace new prod

terraform workspace select dev

- Apply Changes to a Workspace:

terraform apply

- View Current Workspace:

terraform workspace show

4.Limit State File Scope

Keeping your state files lean and focused is crucial for efficient Terraform management. Here’s how to do it:

- Break It Down: Avoid cramming too many resources into a single module. Instead, split them into smaller, purpose-driven modules tailored to specific tasks.

- Use Separate State Files: For unrelated resources—like your VPC, application stack, and monitoring setup—maintain separate state files. This separation ensures better manageability and reduces the risk of dependencies causing issues.

By limiting the scope of each state file, you can improve performance, simplify maintenance, and enhance scalability.

5. Regularly Clean Up State Files

State files can accumulate unnecessary data over time, such as references to deleted or unused resources. Regular cleanups are essential to keep them efficient:

- Use the terraform state rm command to safely remove resources no longer needed in your state file.

This practice prevents bloated state files, ensuring your infrastructure remains agile and easy to manage.

Example Command:

terraform state rm aws_instance.unused_instance

6. State File Management Tools

Use tools like Terragrunt to manage configurations, module dependencies, and remote state setups across multiple environments.

Example Terragrunt Configuration (terragrunt.hcl):

remote_state {

backend = "s3"

config = {

bucket = "my-terraform-state"

key = "envs/${path_relative_to_include()}/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "terraform-lock-table"

}

} 7. Monitor State File Size and Performance

Regularly monitor the state file’s size and execution times for terraform plan and terraform apply. Use Terraform’s debug logs (TF_LOG=DEBUG) for performance analysis.

Conclusion

Managing large Terraform state files doesn’t have to be a daunting task. By modularizing configurations, utilizing data sources, leveraging workspaces, limiting state file scope, and cleaning up unused resources regularly, you can keep your state files efficient and your workflow streamlined.

These best practices will help you avoid common pitfalls like performance issues and collaboration conflicts, ensuring your infrastructure remains scalable and easy to manage. As your infrastructure grows, adopting these strategies will help you stay in control, letting you focus on building without worrying about state file complexity.

Ready to streamline your Terraform workflows? Implementing these practices could be the next step towards a more efficient and scalable infrastructure.