How LangChain Is Powering the Future of AI (2025 Insights)

LangChain for Smarter AI

Artificial Intelligence (AI) is powering a new era of innovation, unlocking opportunities across every major industry. According to IDC's 2025 AI Market Outlook, global AI spending is projected to exceed $600 billion this year, reflecting a 27% increase from 2024. As organizations race to build intelligent, efficient, and dynamic applications, frameworks like LangChain have emerged as game-changers.

LangChain stands out as a powerful and versatile framework, purpose-built to simplify and accelerate the development of AI applications. Be it designing a retrieval-augmented generation (RAG) system, a dynamic customer support agent, or a complex autonomous workflow, LangChain provides the tools and modularity needed to bring advanced AI solutions to life, with remarkable ease.

In this 2025 guide, we’ll dive deep into what LangChain is, explore its core components, examine real-world applications, and explain why it has become a critical foundation for next-generation AI development.

Overview of LangChain

LangChain is an open-source framework designed to simplify the development of applications powered by large language models (LLMs). It connects LLMs to external data sources, allows for complex reasoning, and facilitates multi-step workflows, all while providing robust guardrails and memory features.

Originally launched in 2022, LangChain has rapidly evolved. As of 2025, it supports integrations with cutting-edge models like OpenAI’s GPT-5 Turbo, Meta’s LLaMA 4, Google Gemini 2 Ultra, and open-source models like Mistral and Claude 4. Its plugin ecosystem has expanded dramatically, offering connectors for databases, APIs, vector stores, and even on-device models.

At its heart, LangChain is about chaining LLM calls and actions together logically and predictably, creating powerful, context-aware AI systems.

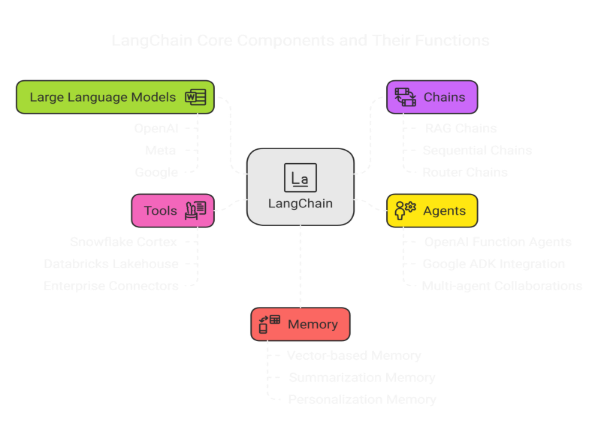

Core Components of LangChain

Let's break down LangChain’s key building blocks:

1. Large Language Models (LLMs)

LangChain provides abstractions over various LLM providers. You can easily switch between different models or ensemble multiple models together, optimizing cost, performance, and accuracy.

If you want a deeper understanding of how to work with different models effectively, check out our detailed guide on Building with LLMs.

Supported LLMs (2025 update):

- OpenAI (GPT-5, GPT-4o)

- Meta (LLaMA 4, Scout)

- Google (Gemini 2, Gemini Nano)

- Anthropic (Claude 4, Claude 4 Haiku)

- Mistral (Mixtral 8x22B)

- Cohere, Hugging Face Hub, and more

2. Chains

Chains are sequences of actions involving LLMs and other components. Simple chains may consist of a single LLM call, while complex chains orchestrate multiple steps like database querying, document retrieval, and final summarization.

Popular types in 2025:

- Retrieval-Augmented Generation (RAG) Chains

- Sequential Chains

- Router Chains (dynamic path selection based on input)

- Multi-Modal Chains (combining text, images, and speech)

3. Agents

Agents make decisions dynamically, determining which tool to use at each step.

Recent advancements include:

- OpenAI Function Agents

- Google ADK Integration (for smart service agents)

- Multi-agent collaborations (using frameworks like CrewAI inside LangChain)

Agents are no longer "just smart", they are goal-driven and autonomously resilient.

4. Tools

LangChain defines "tools" as external capabilities the model can call: APIs, databases, search engines, payment processors, CRMs, and more.

New 2025 updates:

- Native Snowflake Cortex and Databricks Lakehouse plugins

- Enterprise-grade connectors for SAP, Salesforce, and ServiceNow

- Streaming and real-time tools integration

5. Memory

Memory allows applications to maintain context across interactions. LangChain’s memory modules in 2025 now offer:

- Vector-based memory

- Summarization memory (dynamic compression of long conversations)

- Personalization memory for user-adaptive experiences

- Privacy-compliant memory management (GDPR, HIPAA-ready)

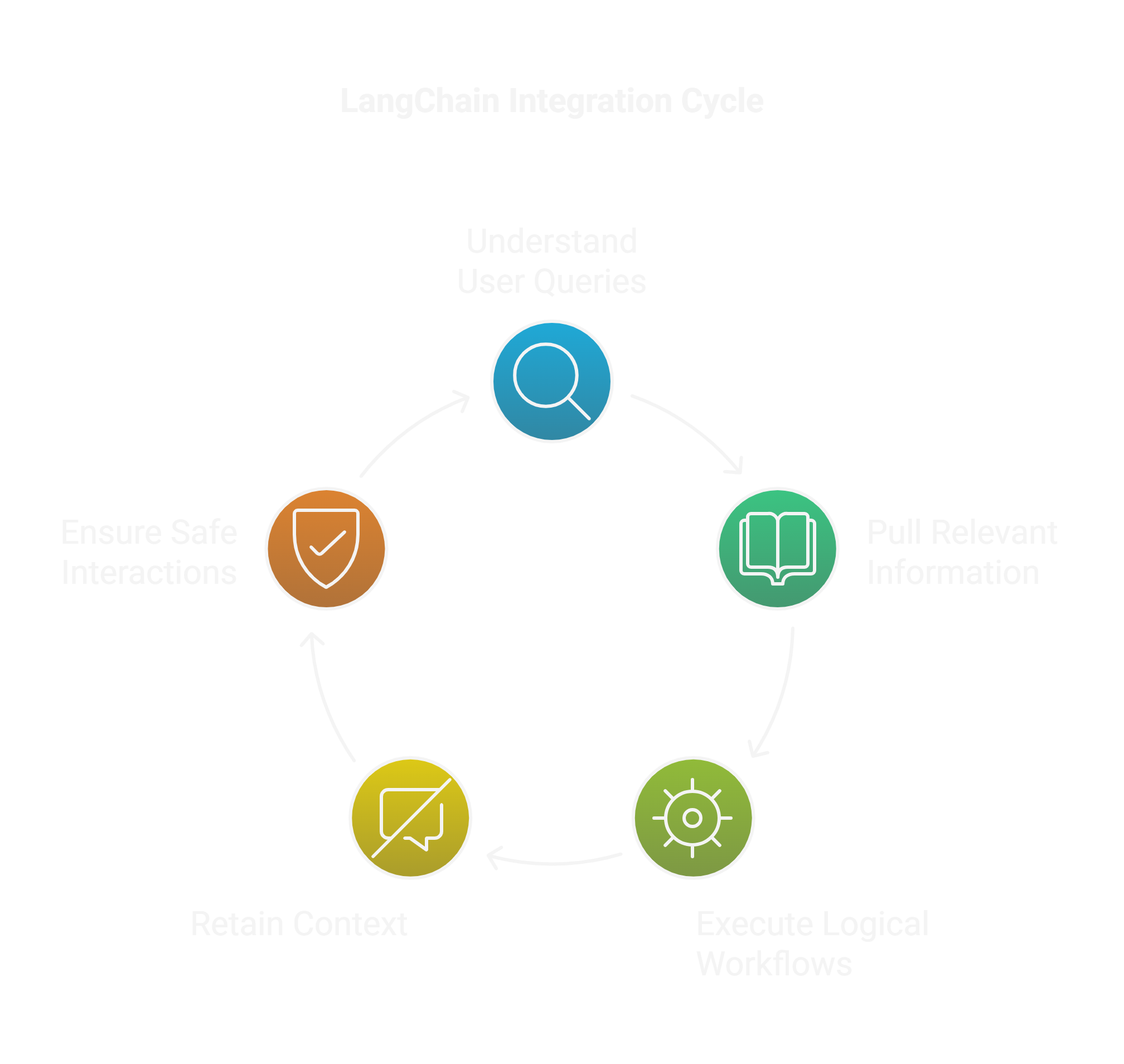

How LangChain Fits into AI Application Development

AI applications today need more than just language generation. They must:

- Understand user queries.

- Pull relevant information from trusted sources.

- Execute logical workflows.

- Retain conversational or user-specific context.

- Offer safe, compliant interactions.

LangChain provides the “glue” to seamlessly connect LLMs with:

- Knowledge Bases (e.g., RAG pipelines)

- Action Systems (e.g., API calls, database operations)

- Interaction Flows (e.g., user-agent conversations)

Without LangChain, developers often "reinvent the wheel" — creating brittle, error-prone custom solutions. LangChain accelerates development, ensures modularity, and helps future-proof applications against the rapid evolution of AI models.

In 2025, many companies are embedding LangChain into their MLOps pipelines, data fabric architectures, and autonomous agent ecosystems.

For instance, if you’re interested in building advanced AI workflows like OCR-based chatbots powered by LangChain, Vertex AI, ChromaDB, and Gradio, don’t miss our detailed walkthrough on Building an OCR-Based Chatbot. It’s a hands-on guide showing how LangChain enables dynamic document parsing and intelligent user interactions at scale.

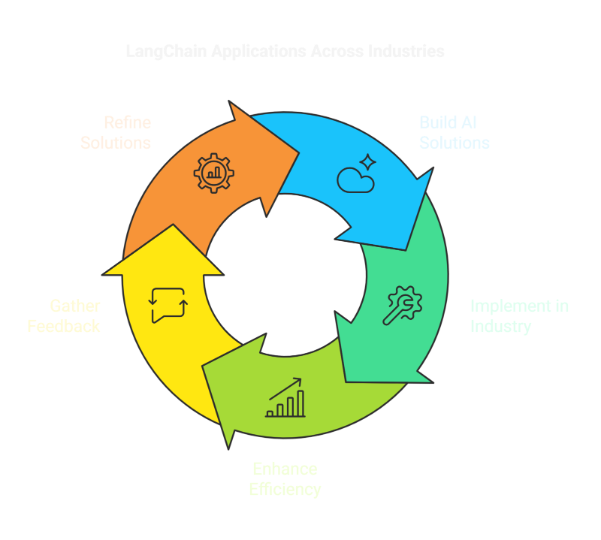

Real-World Examples

Here’s how LangChain is already making a difference across industries:

Healthcare

Healthcare providers are using LangChain to build patient triage bots that combine Retrieval-Augmented Generation (RAG) with agentic workflows. These systems suggest next steps based on patient inputs while ensuring strict adherence to HIPAA compliance standards. They help reduce wait times, enhance diagnostic accuracy, and improve patient experience.

Retail

Retailers are leveraging LangChain to deploy personal shopping assistants that combine customer profile memories, real-time inventory APIs, and LLM personalization. Shoppers receive highly tailored product recommendations, leading to increased conversion rates and enhanced customer loyalty.

Financial Services

In financial services, automated document analysis agents built with LangChain are transforming operations. These agents can read complex contracts, extract key terms, identify risks, and even suggest negotiation points, saving countless hours of manual review and boosting operational efficiency.

Education

Adaptive tutoring systems powered by LangChain are revolutionizing digital education. These systems dynamically pull from a vast curriculum database based on individual student queries, providing customized and responsive learning experiences that drive better educational outcomes.

Media

In the media sector, LangChain-based tools are enabling dynamic content summarization for podcasts and news feeds. By using multi-modal chains, these systems can process audio, text, and video content, delivering concise, relevant summaries to users faster than ever before.

Why LangChain Is a Foundation for Next-Gen AI

LangChain is a strategic catalyst for the future of AI innovation. Here's why it is considered foundational for next-generation AI systems:

1. Seamless Orchestration of Complex Workflows

Modern AI applications rarely rely on a single model or task. They require multi-step reasoning, data retrieval, memory retention, and decision-making, all orchestrated seamlessly. LangChain's ability to chain multiple LLM interactions, integrate APIs, and execute conditional logic makes it uniquely suited for building sophisticated AI workflows that adapt and evolve.

2. Bridging LLMs and Real-World Data

Out-of-the-box LLMs have limitations when it comes to accessing current, dynamic, or proprietary data. LangChain’s tools for Retrieval-Augmented Generation (RAG) allow developers to connect models with live databases, vector stores, and APIs, empowering AI applications to deliver more accurate, timely, and contextually rich outputs.

3. Enabling Agentic AI Systems

Next-gen AI is increasingly agentic, meaning systems can make decisions, perform actions, and refine their behavior based on goals. LangChain’s agent architecture lets developers build applications where LLMs act autonomously, accessing tools, invoking functions, and self-correcting through feedback loops.

4. Modular, Flexible, and Extensible

LangChain’s modular design means developers can plug and play different models, memory types, chains, and agents according to their application needs. Its flexibility supports experimentation and scaling, whether you are building a lightweight app or a production-grade enterprise solution.

5. Safety and Guardrails for Enterprise Adoption

Enterprise AI adoption demands security, compliance, and control. LangChain provides built-in safety mechanisms, such as validation layers, permissioned tool access, and ethical guidelines enforcement. This makes it a trusted choice for sectors like healthcare, finance, and education where regulatory compliance is non-negotiable.

6. Thriving Ecosystem and Community Support

LangChain has cultivated a vibrant developer community and ecosystem. With thousands of plugins, templates, and community-contributed tools, developers can rapidly accelerate their projects. The framework’s ongoing updates in 2025 continue to enhance compatibility with the latest AI models and cloud-native platforms.

In short, LangChain is shaping the very architecture of how intelligent systems are designed, deployed, and evolved.

Final Thoughts

LangChain has cultivated a vibrant developer community and ecosystem. With thousands of plugins, templates, and community-contributed tools, developers can rapidly accelerate their projects. The framework’s ongoing updates in 2025 continue to enhance compatibility with the latest AI models and cloud-native platforms.

The growth of LangChain reflects a broader industry shift. Now, AI applications are no longer isolated experiments; they are dynamic, interconnected systems solving real-world problems at scale. LangChain offers the blueprint for creating intelligent, adaptive solutions that deliver lasting value.

If you’re looking to stay ahead in 2025 and beyond, there’s never been a better time to explore what LangChain can help you create.

At InfoServices, we specialize in building cutting-edge AI applications using LangChain, Vertex AI, RAG architectures, autonomous agents, and more. Whether you're just starting or scaling enterprise-grade systems, our experts are ready to help you harness the full potential of next-generation AI.

👉 Contact us today to bring your AI vision to life.

The future is chained together, and it's just getting started.

Frequently Asked Questions (FAQs)

What is LangChain?

LangChain is an open-source framework designed to simplify the development of applications powered by large language models (LLMs). It enables integration with external data sources, supports complex reasoning, and facilitates multi-step workflows.

How does LangChain enhance AI application development?

By providing modular components like chains, agents, and memory, LangChain allows developers to build context-aware AI systems that can interact with various tools and data sources seamlessly.

What are the core components of LangChain?

The core components include LLMs, chains, agents, tools, and memory modules, each serving a specific function in building intelligent applications.

Can LangChain integrate with different LLM providers?

Yes, LangChain supports integrations with various LLM providers, including OpenAI, Meta, Google, Anthropic, and others, allowing flexibility in choosing the appropriate model for your application.

Is LangChain suitable for enterprise applications?

Absolutely. LangChain offers enterprise-grade features like privacy-compliant memory management, robust integration capabilities, and support for complex workflows, making it ideal for enterprise use cases.