How Enterprises Are Using LangChain to Power Autonomous Agents

Enterprise Innovation with LangChain

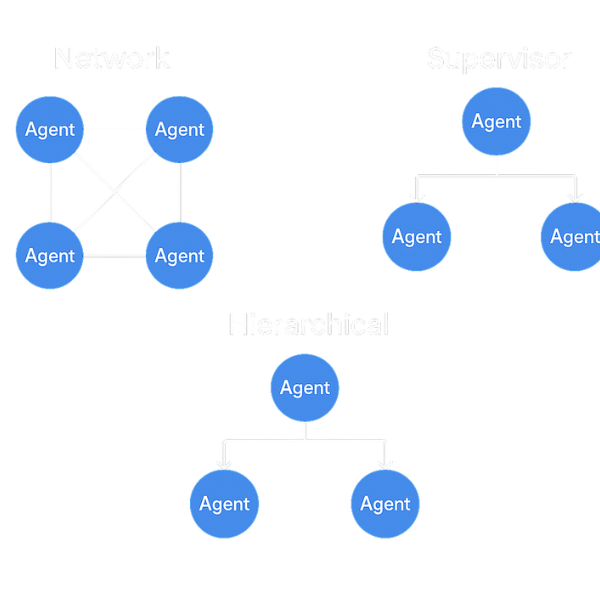

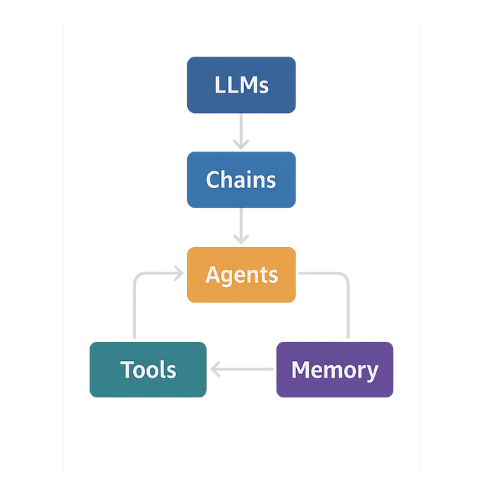

Enterprises across industries are embracing agentic AI to automate complex workflows, and LangChain sits at the core of this trend. This open-source framework makes it easy to chain LLMs, data, and tools into intelligent agents that plan, act, and learn. Unlike linear chatbots, LangChain systems spawn multiple specialized agents (planners, executors, evaluators, etc.) that collaborate on tasks. We have explored LangChain’s evolving architecture and real-world applications in our posts on LangChain’s 2025 multi-agent framework. In this article, we detail how enterprises leverage LangChain for autonomous agents, highlighting key use cases, architecture patterns, integrations, and core features.

- Agentic architecture: LangChain provides a modular foundation for building multi-agent systems. It natively supports orchestration, enabling developers to define workflows where planners, executors, communicators, and evaluators break down and solve problems collaboratively.

- Broad use cases: Companies are deploying LangChain agents for customer support, data analytics, HR assistance, compliance monitoring, and more (examples below).

- Seamless integrations: LangChain offers built-in connectors and tools to link with enterprise systems (CRM/ERP, databases, cloud APIs). It even provides enterprise-grade plugins (Snowflake, Databricks, SAP, Salesforce, ServiceNow) to streamline data access. Refer to the official LangChain integrations for more details.

- Advanced features: Core LangChain capabilities – memory modules, RAG, toolchains, evaluation loops, and orchestration graphs – let agents retain context, fetch knowledge, and self-correct on the fly.

Key Enterprise Use Cases

Enterprises are using LangChain agents to automate high-value tasks across domains. Notable examples include:

- Customer Support Bots: Multi-agent helpdesk systems use a Query Router agent to classify intent, retrieval agents to search knowledge bases, and escalation agents to involve human reps on complex issues. This layered approach can boost resolution rates by 35–45% over single-agent bots. LangChain simplifies RAG-based QA by connecting LLMs to product docs and ticket systems. For implementation details, see the LangChain RAG documentation.

- Enterprise Analytics Assistants: Data-driven agents translate business questions into SQL, fetch data, and create visualizations. For instance, a Planner agent defines analytical goals, a SQL Agent queries the data warehouse, and a Visualization Agent renders charts. These agents can even pull from dashboards or BI tools as needed, enabling self-service analytics via natural language.

- HR & Employee Self-Service: Onboarding or HR-chatbot agents can tap into HR/CRM APIs (e.g., ServiceNow, Workday, Salesforce) to answer employee queries. For example, an HR assistant might fetch policy text from a knowledge base (via RAG) and schedule training tasks. LangChain’s enterprise connectors (SAP, ServiceNow) allow agents to update tickets or user profiles automatically.

- Compliance & Legal Review: Automated compliance agents scan documents and communications for policy violations. For instance, healthcare triage bots use RAG to consult HIPAA guidelines and Evaluator Agents to ensure privacy rules are met. In finance, LangChain agents can read contracts (using RAG on legal databases) and flag risky clauses. These agents often invoke human-in-the-loop when uncertainty is high.

- Developer & Research Assistants: LangChain powers coding assistants that break down developer requests: a Planner splits a feature into steps, a Code Generator writes and debugs code, and a Tester/Review agent validates the output. Similarly, research bots use a Planner, a RAG agent to gather info from internal documents, and a Critic agent to verify facts. These multi-agent chains accelerate product development and innovation.

Each use case leverages LangChain’s strength in multi-step reasoning. By distributing tasks among specialized agents, the system handles dynamic scenarios with context-aware decision-making.

LangChain Architecture & Orchestration

LangChain’s architecture is built for scale and resilience. It introduces specialized agent roles and a powerful orchestration layer:

- Agent Roles: The framework defines core agent types – Planner (breaks goals into subtasks), Executor (performs actions like database queries or API calls), Communicator (formats and passes context between agents), and Evaluator (performs QA on outputs). This separation of concerns makes agents highly reusable and focused.

- Orchestration Engine: A unified orchestration layer (using LangGraph/LCEL or Python APIs) coordinates agents. Workflows can be declared graphically (LangGraph) or programmatically via a MultiAgentExecutor interface. The layer handles sequencing, parallelism, and context-sharing. For more information, refer to the LangGraph documentation.

- Tool Routing: Agents pull from a shared toolbox. LangChain’s Tool Routing lets any agent dynamically choose from available tools (APIs, search, code) based on need. This ensures flexibility and avoids duplication – for example, all agents use the same document-search tool when required.

- Asynchronous Execution: LangChain supports concurrent agent execution. Multiple agents can run in parallel on different subtasks (e.g., multi-document retrieval, simultaneous user queries), greatly improving throughput for large workloads.

- Context Sharing & Memory: Agents share a persistent context. Using LangGraph or LCEL, agents can read and update shared memory (user intent, previous answers, etc.) to coordinate smoothly. LangChain provides memory modules (buffer, vector, summarization) so agents remember long-term history and user preferences. Error Recovery & Evaluation: The framework builds in fail-safes. Evaluator Agents continuously check outputs (for correctness or confidence) and can reroute tasks on failure. Fallback flows may retry, switch agents, or flag a human if needed, making the system robust.

- Model & Ecosystem Agnostic: LangChain works with any LLM provider – GPT-5, Gemini, Claude, etc. – letting enterprises avoid vendor lock-in. It also plugs into myriad services: vector DBs (Pinecone, ChromaDB for memory), search engines, OCR, Python runtimes, etc. See the official LangChain integrations for hundreds of connectors.

- Scalable APIs: LangChain offers primitives like AgentExecutor (single agent), MultiAgentExecutor (multi-agent flow), and LangGraph AgentNode (graph nodes with memory) to go from prototypes to production. Agents and tools can be exposed via LangServe as REST services, making deployment in cloud or on-premise containers seamless.

LangChain’s orchestration and tooling set it apart from simpler frameworks. Its declarative graphs (LangGraph) and procedural executors give architects the flexibility to tailor agent workflows to complex enterprise needs.

Integration Patterns with Enterprise Systems

LangChain agents often need to work with existing enterprise software. Common integration patterns include:

- CRM & Ticketing Systems: Agents connect to Salesforce, Zendesk, or Dynamics via APIs. For example, a support agent might first query a customer record (via a Salesforce connector) before answering a ticket. LangChain’s extensible tools mean any REST or SOAP API can be wrapped as a “tool” an agent invokes. The framework even provides enterprise connectors for Salesforce and ServiceNow. Refer to the Salesforce integration guide for more details.

- ERP & Databases: Agents can call ERP backends (SAP, Oracle, Netsuite) or SQL databases for live business data. LangChain’s plugin ecosystem includes connectors for Snowflake and Databricks. For instance, a financial agent could run a SQL query on the company warehouse, retrieve the result, and feed it to an LLM to summarize. Vector databases like Pinecone or Chroma can store embeddings of internal docs, enabling RAG where agents “remember” past records.

- Cloud Services & APIs: LangChain plays well with cloud. Agents can invoke AWS Lambda/Azure Functions for on-demand code execution, or call cloud ML APIs. LLMs from Azure OpenAI or Google Vertex AI plug in effortlessly. For example, an agent might use AWS Comprehend or Google Vision as tools within a workflow.

- Enterprise Apps & Data Lakes: Agents integrate with platforms like Microsoft Graph, Tableau, or ERP data lakes. A retail agent, for example, might check real-time inventory via an ERP API and then recommend products. LangChain’s Snowflake and Databricks plugins allow agents to fetch large-scale data for analytics or RAG pipelines.

- Unified Resources: LangChain’s official resources guide these patterns. The GitHub repo and Docs include templates for connecting LLM-driven agents to CRMs, ERPs, databases, and more. Moreover, its Integrations page highlights hundreds of ready-made connectors. Enterprises can also expose agents via LangServe or Kubernetes, treating them as internal microservices.

By plugging into existing enterprise systems, LangChain agents act as intelligent intermediaries – fetching data, triggering transactions, and providing natural-language interfaces on top of legacy systems. This accelerates automation and maintains compliance with company workflows.

Core LangChain Features

LangChain’s rich feature set underpins all these applications. Key capabilities include:

- Memory Modules: LangChain offers multiple memory types. A buffer memory holds recent conversation history, vector memory (via Pinecone/ChromaDB) stores long-term knowledge, and summarization memory compresses extended interactions. This means agents can personalize responses (remember user preferences) and avoid losing context across turns. Memory is even GDPR/HIPAA-aware in enterprise editions.

- Retrieval-Augmented Generation (RAG): LangChain makes RAG a first-class citizen. Agents can seamlessly retrieve relevant documents or database entries to ground their answers. For example, a support agent might run a semantic search over product manuals before crafting a response. As one of our guides notes, LangChain excels at designing “RAG systems” and dynamic agentic workflows.

- Evaluation & Feedback: The framework supports Evaluator Agents and Evals integration. Agents can self-critique: an output is automatically checked for accuracy, completeness or adherence to rules, and a corrective loop can be triggered if it fails. This built-in QA is crucial for enterprise reliability (e.g. ensuring a compliance agent doesn’t hallucinate).

- Multi-Model Support: LangChain abstracts over LLM providers. It can mix and match models (GPT-5, Claude 4, Meta LLaMA, etc.) in a single workflow. This lets teams balance cost and performance by routing specific tasks to the optimal model.

- Orchestration Graphs: LangGraph is a graph-based orchestrator where developers lay out nodes (agents) and edges (task flows). Alternatively, the LangChain Expression Language (LCEL) provides a declarative way to specify sequences. These graphs can be visualized and versioned (e.g. LangGraph Studio), making complex workflows transparent and maintainable.

- Observability & Evals: (LangSmith) For production systems, LangChain offers observability via its Evals & LangSmith tools. Metrics, logs, and auto-evaluations help teams monitor agent performance and fine-tune prompts or logic over time.

In short, LangChain bundles everything needed for enterprise-grade agents: Memory for context, RAG for knowledge, Tools for action, Evaluators for quality, and Orchestration for structure. Its official docs and repo provide extensive guidance on each feature.

Conclusion

LangChain is rapidly becoming the backbone of enterprise autonomous agents. By providing a modular multi-agent architecture, rich integrations, and built-in intelligence (memory, RAG, evaluation), it lets businesses build AI-powered solutions that can plan, act, and adapt on their own. Today’s enterprises use LangChain to automate support, analytics, HR processes, compliance reviews, and more – with each agentic system tailored to domain needs. As LangChain continues to evolve, organizations leveraging it will gain a sustainable competitive edge in building the next generation of AI-driven workflows.

FAQ'S

1. How are large enterprises integrating LangChain into legacy systems?

Enterprises often wrap LangChain-powered agents with APIs that interface with legacy platforms like SAP, Oracle, or mainframes. By leveraging adapters and middleware, LangChain agents can ingest data from older systems, automate repeatable processes, and even initiate actions via RPA tools — effectively modernizing operations without full-stack replacement.

2. What enterprise problems do LangChain-based agents solve most effectively?

LangChain-powered agents excel at handling complex, context-driven workflows such as customer service triage, internal helpdesk support, contract summarization, and compliance checks. Enterprises deploy them to reduce reliance on human intervention, speed up response times, and minimize errors in high-volume, repetitive tasks that traditionally drain operational resources.

3. How does LangChain support enterprise-scale AI governance and compliance?

LangChain can be integrated with enterprise governance frameworks by embedding guardrails, logging, and data lineage tracking. Businesses often combine LangChain with tools like Azure Purview or AWS Audit Manager to maintain compliance, enforce access controls, and ensure agent decisions are transparent, reversible, and aligned with internal risk policies.

4. What role does LangChain play in enterprise-wide agent orchestration?

LangChain acts as a coordination layer between multiple tools, APIs, and data sources, enabling enterprises to create composable autonomous agents. These agents can reason across departments — for example, combining CRM, HR, and finance data to initiate workflows or decisions — supporting broader digital transformation and cross-functional automation initiatives.

5. How do enterprises measure ROI from LangChain-powered autonomous agents?

ROI is typically measured through KPIs such as task automation rate, error reduction, FTE (full-time equivalent) savings, and time-to-resolution improvements. LangChain enables real-time analytics integration, so enterprises can quantify agent performance, cost impact, and scalability — turning AI agents from experimental tools into measurable, strategic assets