The Role of LangChain in Multi-Agent Systems (2025 Architecture)

Multi-Agent Orchestration with LangChain

As AI development accelerates in 2025, the concept of agentic AI is taking center stage. A new wave of architectures has emerged where multiple autonomous agents collaborate, delegate tasks, and adapt to complex workflows. At the heart of this evolution lies LangChain, a powerful framework redefining the way developers build, orchestrate, and scale multi-agent systems. This blog explores the modern role of LangChain in multi-agent systems, its tooling, architecture, and how it compares with alternatives like AutoGen, while also examining its integration with OpenAI agents and the larger LangChain ecosystem in 2025.

Understanding LangChain's Place in the Multi-Agent AI Framework

The term "multi-agent system" refers to an ecosystem where several AI agents, each with defined capabilities and goals work together to solve a problem. These agents may interact with each other, divide tasks, share context, or compete to optimize solutions. LangChain offers a robust foundation for building such agentic systems, thanks to its composability, tooling integrations, and native support for orchestration. Developers interested in applying these techniques in real-world applications may also find our Building Smarter AI Applications with LangChain (2025) post insightful.

The modern LangChain multi-agent system is built upon the idea of chaining agents like modular functions, each with its own memory, toolset, and autonomy level. These chains can be orchestrated synchronously or asynchronously depending on the workflow requirements.

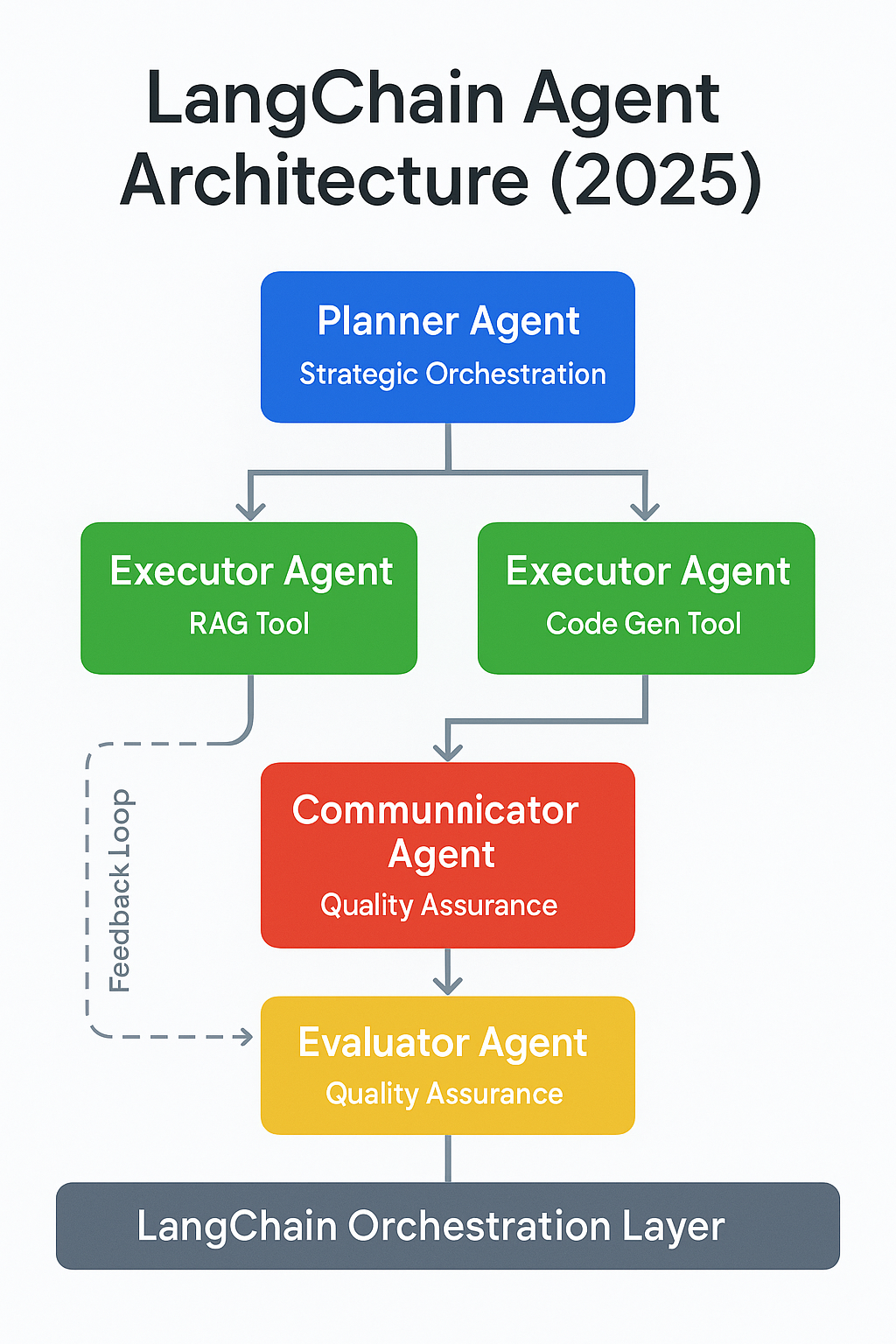

LangChain Agent Architecture in 2025

LangChain's agent architecture in 2025 has evolved into a modular, layered system where agents specialize in planning, execution, communication, and evaluation. Each agent type is decoupled, which allows for greater flexibility and scalability.

Core Agent Types:

- Planner Agent: Acts as the strategic brain of the system. It breaks down user intent or high-level goals into a sequence of subtasks. It determines the flow of logic, selects the required tools or agents, and dynamically adapts based on context or output.

- Executor Agents: These agents carry out specific subtasks defined by the Planner. Examples include:

- A RAG Executor that queries a vector database to retrieve documents.

- A Code Generator that writes and debugs scripts.

- A Translator that converts content between languages or formats

- Communicator Agent: Ensures smooth handoff between agents. It reformats outputs into compatible inputs for downstream agents, tracks conversational context, and ensures intent preservation across language models.

- Evaluator Agent: Conducts quality assurance on outputs. It evaluates results based on correctness, confidence, or relevance. If a failure or ambiguity is detected, it may reroute the task back to the planner or reassign it to another executor.

These agents work together under a unified orchestration layer.

Architectural Diagram:

LangChain’s orchestration layer connects these agents, enabling smooth collaboration, parallel execution, and fault recovery. Developers can use LangChain’s built-in MultiAgentExecutor or integrate runtimes like CrewAI and OpenAI's AutoGen to deploy these agents in production workflows. If you're looking to integrate such architecture into enterprise-grade use cases, our main services page showcases how we help teams build scalable AI solutions across domains.

LangChain Multi-Agent Orchestration

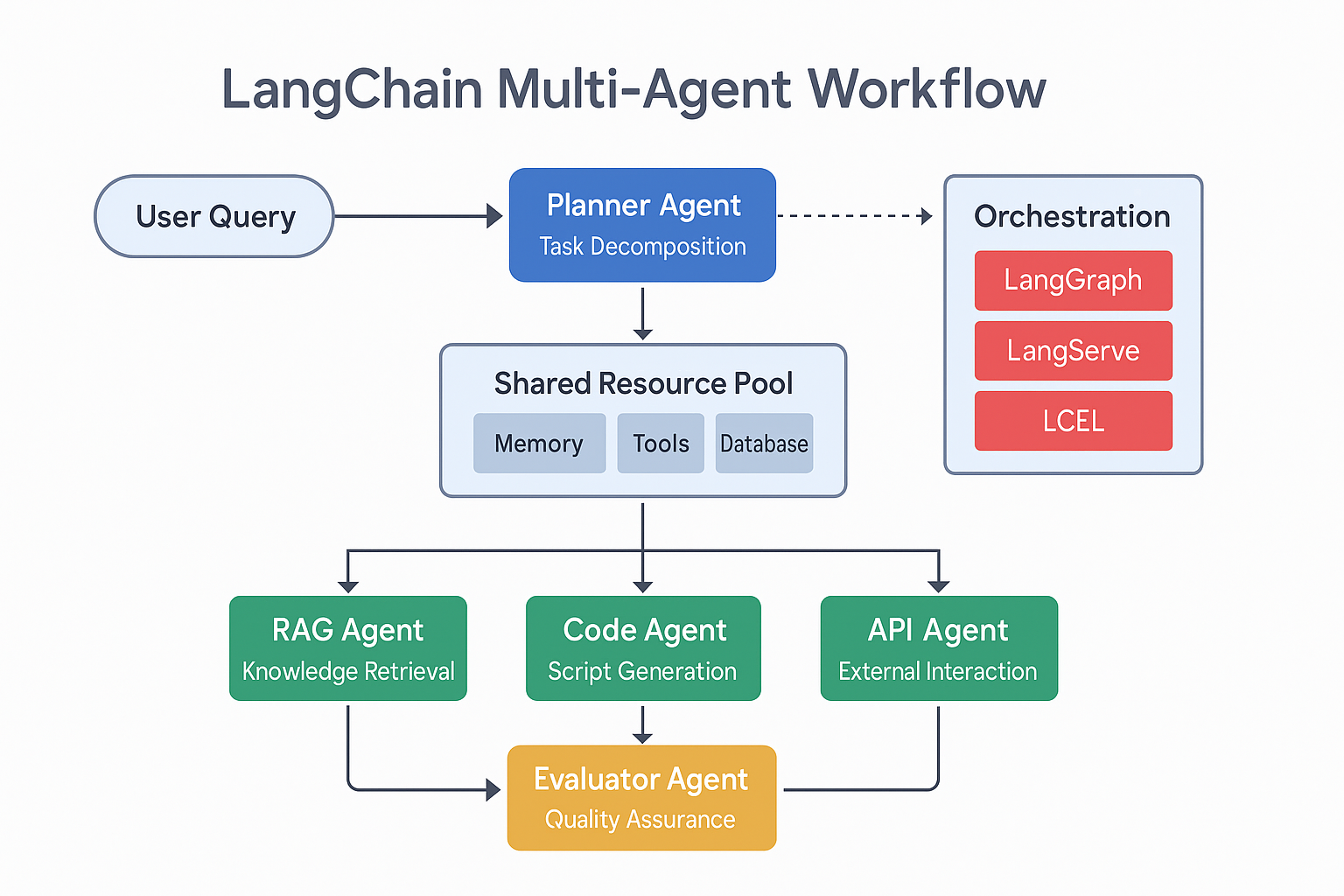

One of the defining advances in LangChain’s 2025 evolution is its sophisticated multi-agent orchestration engine. This orchestration layer acts as the conductor, coordinating how agents interact, sequence their tasks, share context, and respond to failures all within a structured but flexible framework.

At its core, LangChain allows developers to declaratively design workflows, specifying which agents are responsible for what, how they communicate, and which tools they can access. These designs can be graph-based (via LangGraph), declarative (using LCEL), or programmatic (via the MultiAgentExecutor interface).

Key Orchestration Features:

- Tool Routing: Agents can dynamically choose from a shared pool of tools based on their current task and context. This shared toolbox approach ensures flexibility, reusability, and minimizes redundancy across agents.

- Dynamic Context Sharing: Using LangGraph or LCEL, agents can access and update shared memory. This persistent context can include user intent, intermediate results, and conversation history, enabling better coordination and less repeated work.

- Asynchronous Execution: LangChain supports concurrent task execution, allowing multiple agents to work in parallel. This drastically improves throughput in multi-step workflows, especially for tasks like multi-document retrieval, large-scale evaluation, or simultaneous user interactions.

- Error Recovery Flows: Robust fallback mechanisms allow agents to retry failed tasks, escalate them to human-in-the-loop processes, or reassign them to alternative agents. Evaluator Agents often play a key role here by detecting anomalies or degraded outputs.

Together, these features elevate LangChain beyond linear chains or static flows. Developers can now create resilient, adaptive AI ecosystems where agents learn from failures, share insights in real time, and continuously optimize outcomes.

LangChain Agent vs AutoGen

A frequent point of discussion in the AI developer community is the comparison between LangChain agents vs AutoGen (by Microsoft). While both frameworks enable multi-agent interactions, their philosophies and tooling differ.

| Feature | LangChain | AutoGen |

| Modularity | High | Moderate |

| Tooling Ecosystem | Extensive (LangGraph, LangServe, LCEL) | Minimal built-in tools |

| Language Model Agnosticism | Yes | Mostly tied to OpenAI APIs |

| Orchestration Control | Full programmatic + declarative | Python script-based sequences |

| Ecosystem Integration | Vertex AI, Pinecone, ChromaDB, OpenAI, Cohere | Primarily OpenAI |

LangChain offers a richer multi-agent AI framework, especially for production-ready systems requiring granular control, memory management, and tool integration. For those just starting out, Agentic AI for Beginners gives a great overview of foundational principles and frameworks.

Building AI Agents with LangChain

LangChain makes building AI agents in 2025 is highly scalable and production-ready. Its modular architecture and comprehensive toolset enable developers to build, test, and deploy agents tailored to specific domains, such as data analysis, customer support, code generation, and autonomous research.

Core Building Blocks for LangChain Agents

LangChain agents are constructed by combining several primitives:

- Language Models (LLMs): Power the agent’s reasoning and language understanding. LangChain supports OpenAI, Anthropic, Cohere, Google Vertex AI, and Hugging Face models.

- Memory Modules: Let agents remember conversation history, tool outputs, or long-term user preferences. LangChain offers short-term buffer memory, vector memory (e.g., with ChromaDB), and summary memory.

- Tool Interfaces: Extend the agent's abilities beyond text by giving it access to APIs, search engines, Python interpreters, SQL engines, or custom business logic.

- Agent Executors: Coordinate planning and execution—either as a standalone agent or part of a multi-agent system.

Using a few lines of code, developers can bootstrap a tool-using agent:

python

CopyEdit

from langchain.agents import initialize_agent

from langchain.agents.agent_types import AgentType

agent = initialize_agent(

tools=[calculator, search_api],

llm=openai_model,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

memory=ConversationBufferMemory()

) his agent can now reason over user queries, call tools dynamically, and maintain context—all without hardcoded logic.

Types of Agents You Can Build with LangChain

LangChain supports various agent patterns:

| Feature | LangChain | AutoGen |

|---|---|---|

| Modularity | High | Moderate |

| Tooling Ecosystem | Extensive (LangGraph, LangServe, LCEL) | Minimal built-in tools |

| Language Model Agnosticism | Yes | Mostly tied to OpenAI APIs |

| Orchestration Control | Full programmatic + declarative | Python script-based sequences |

| Ecosystem Integration | Vertex AI, Pinecone, ChromaDB, OpenAI, Cohere | Primarily OpenAI |

Why LangChain?

LangChain’s rise in production environments is backed by adoption metrics and developer feedback:

- 60% of AI developers working on autonomous agents use LangChain as their primary orchestration layer, according to a 2025 State of Agentic AI survey.

- LangChain saw a 220% increase in GitHub stars and a 300% increase in npm and PyPI downloads from Q1 2024 to Q1 2025.

- 40% of LangChain users now integrate it with vector databases (like Pinecone, ChromaDB) to power long-term memory in agents.

- Enterprises deploying LangChain in customer support workflows report a 35–45% increase in resolution rates using multi-agent designs over single-agent bots.

These stats show that LangChain is a trusted for enterprise-scale deployment.

Modular APIs to Scale Agents

LangChain's abstractions make it easy to go from single-agent prototypes to multi-agent production systems:

- AgentExecutor: Runs a single agent with tools and memory.

- MultiAgentExecutor: Coordinates a fleet of agents across subtasks.

- RunnableAgent: LCEL-compliant interface for declarative chaining.

- LangGraph AgentNode: Graph-based node that encapsulates agent logic, memory, and control flow.

Developers can plug in external tools, wrap agents into APIs using LangServe, and deploy them to serverless or containerized environments seamlessly.

Best Practices When Building LangChain Agents

- Choose the right memory: Use vector memory for long documents, summary memory for cost optimization, and buffer memory for short conversations.

- Limit tool usage: Prevent agent confusion or cost sprawl by defining tool use policies (e.g., only use search when confidence is low).

- Evaluate frequently: Integrate Evaluator Agents to monitor response quality and route failures.

- Start small, scale later: Begin with simple single-agent chains and evolve to multi-agent structures as complexity grows.

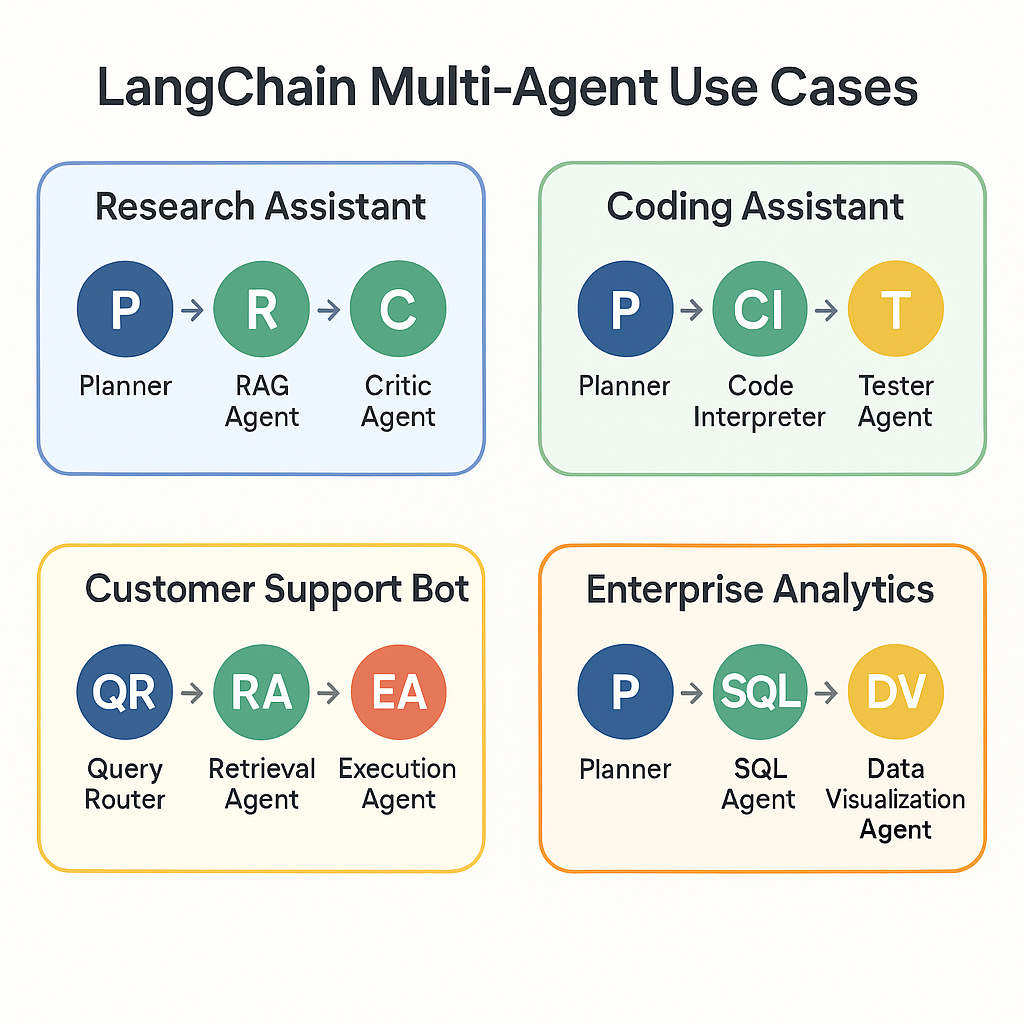

Agentic AI with LangChain: Use Cases

LangChain empowers developers to build advanced AI systems that go well beyond traditional single-agent chatbots. These agentic systems involve multiple specialized agents working together to complete complex tasks through planning, execution, and evaluation.

Here are some illustrative use cases:

- Research Assistants: This setup typically includes a Planner that outlines the research workflow, a RAG (Retrieval-Augmented Generation) Agent that fetches relevant information from knowledge sources, and a Critic Agent that evaluates the accuracy and quality of the findings.

- Coding Assistants: In this scenario, a Planner breaks down a coding request into sub-tasks. A Code Interpreter writes and tests the code, while a Tester Agent validates the output and suggests improvements.

- Customer Support Bots: These bots begin with a Query Router that identifies the user's intent and routes the query to the appropriate Retrieval Agent. If the issue is too complex, an Escalation Agent steps in to involve a human representative or apply advanced logic.

- Enterprise Analytics: A Planner defines analytical goals, a SQL Agent generates database queries, and a Data Visualization Agent converts results into user-friendly charts or dashboards.

Each of these examples demonstrates how LangChain facilitates real-time collaboration between agents. By distributing responsibilities and allowing each agent to specialize, LangChain supports intelligent systems capable of dynamic decision-making, iterative feedback loops, and task automation that adapts to context, all crucial for building reliable, scalable AI solutions.

LangChain Tools for Multi-Agent AI

In 2025, LangChain's tooling is its biggest asset for developers:

- LangGraph: Graph-based orchestration and memory sharing.

- LangServe: Expose agents and tools as RESTful APIs.

- ChromaDB & Pinecone: Vector DBs for memory persistence.

- EasyOCR & OCR Tools: For image-based data processing.

- Vertex AI & OpenAI: For embedding and LLMs.

These tools are critical in enabling real-time coordination, RAG pipelines, and evaluation feedback loops within multi-agent systems.

LangChain OpenAI Agent Integration

LangChain’s support for OpenAI agent integration has deepened in 2025. Developers can now:

- Use OpenAI Assistants with LangChain tool wrappers

- Send messages between LangChain and OpenAI Agents

- Share memory contexts across frameworks

- Hybridize workflows (e.g., Planner in OpenAI, Executor in LangChain)

This interoperability enables teams to combine the strengths of different ecosystems without vendor lock-in.

RAG vs Multi-Agent Workflows

While Retrieval-Augmented Generation (RAG) remains a strong paradigm for factual Q&A and document-based reasoning, it is limited in task orchestration. RAG vs multi-agent workflows boils down to:

- RAG is ideal for one-step knowledge injection.

- Multi-agent systems are suited for multi-step reasoning, decision trees, and tool use.

LangChain allows hybrid designs where a RAG agent feeds data into a planner, which then routes tasks to various executor agents.

The LangChain Ecosystem in 2025

As we move into 2025, LangChain has solidified itself as the go-to framework for building sophisticated, autonomous multi-agent systems. With enhanced orchestration features, advanced integration capabilities, and a growing ecosystem, LangChain provides developers with the infrastructure they need to build scalable, intelligent systems that go beyond traditional AI.

By empowering developers to create AI that collaborates, adapts, and optimizes in real-time, LangChain positions itself at the forefront of AI’s next great leap.

If your organization is exploring how to implement LangChain, LLM agents, or AI transformation at scale, explore our offerings at InfoServices—a trusted partner for digital transformation, AI solutions, and enterprise cloud services.

FAQ'S

1. What is LangChain used for in AI?

LangChain is a framework for building modular, tool-using, and memory-augmented AI agents, especially in multi-agent systems for complex workflows.

2. How do multi-agent systems work in LangChain?

LangChain enables agents like planners, executors, communicators, and evaluators to collaborate via an orchestration layer, allowing dynamic task handling and fault recovery

3. What is the difference between LangChain and AutoGen?

LangChain emphasizes modular orchestration, tool integration, and LLM agnosticism, while AutoGen is more script-based and closely tied to OpenAI’s APIs.

4. Can LangChain integrate with OpenAI agents?

Yes, LangChain supports OpenAI Assistant integration, enabling shared memory, hybrid workflows, and tool use across both ecosystems.

5. Is LangChain good for production-grade AI systems?

Yes, LangChain is widely adopted in 2025 for building scalable, autonomous AI systems used in research, customer support, and analytic