MCP vs API is a False Choice — Here's Why You Need Both

In the rapidly evolving AI landscape, there's a growing debate pitting traditional APIs against the new Model Context Protocol (MCP). Social media discussions often frame Model Context Protocol vs traditional API integration as competing approaches. But this framing fundamentally misunderstands the purpose and design of MCP. It's not APIs versus MCP—it's APIs and MCP working in harmony for effective AI context management.

Understanding the False Dichotomy in AI Context Handling

This comparison emerged as developers who traditionally connected large language models to external systems using APIs encountered MCP as an alternative approach. Articles suggesting MCP could replace traditional APIs in AI agent systems aren't just wrong—they fundamentally misunderstand how MCP actually works.

It's like claiming highways will kill cars, rather than give them somewhere to drive.

Model Context Protocol is not a replacement for APIs — it's a consumer of APIs, designed specifically for AI-human-tool interaction and persistent memory AI.

If you're new to MCP, check out our foundational guide:

Model Context Protocol: Core of Next-Gen AI Transformation

What is the Model Context Protocol (MCP)?

MCP is an open protocol that standardizes how applications provide context to Large Language Models (LLMs). Think of MCP like a USB-C port for AI applications—a standardized way to connect AI models to different data sources and tools for improved AI context management.

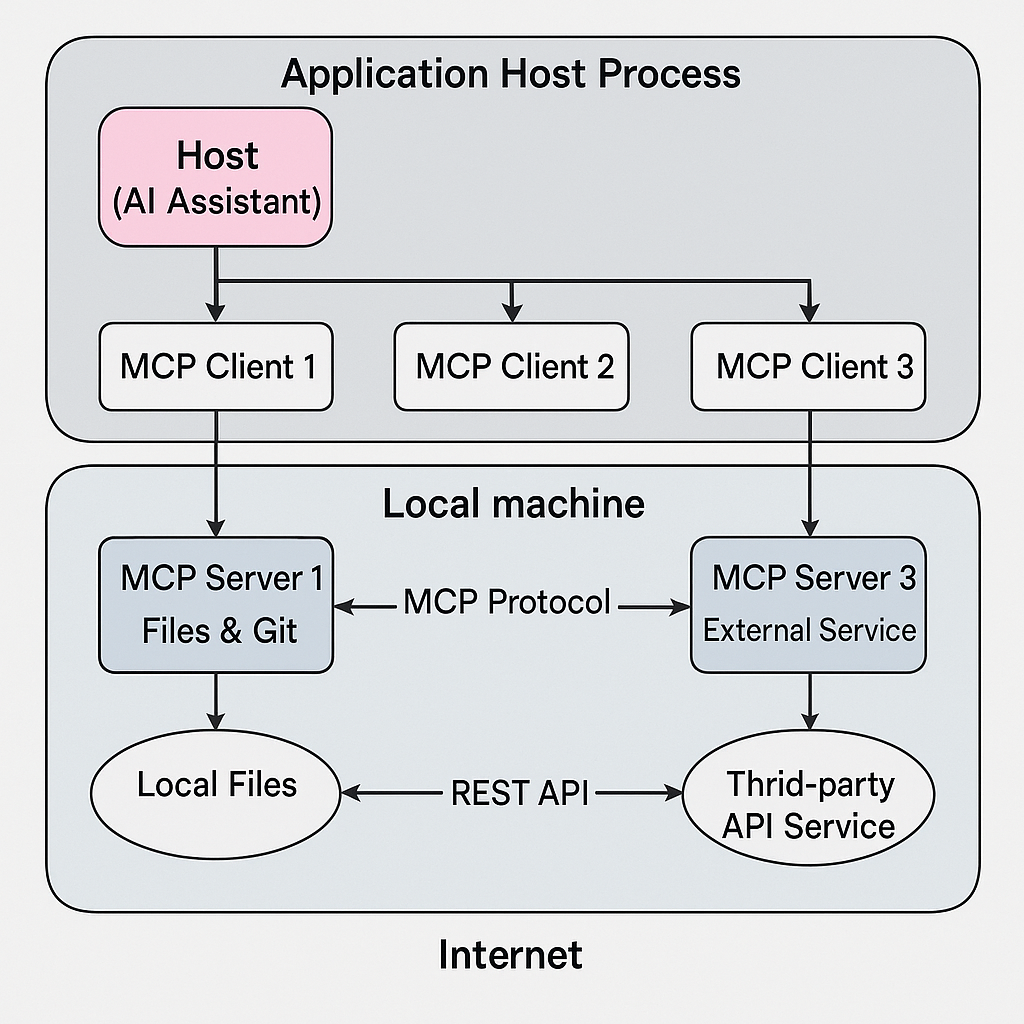

At its core, MCP follows a client-server architecture where AI applications (hosts) can connect to multiple servers:

This diagram reveals the core insight: MCP is built to consume APIs, not replace them. The host (an AI assistant) communicates with clients, which connect to servers that often access various resources via APIs. This architecture is foundational to understanding when to use MCP instead of APIs in AI workflows.

The Key Components of MCP

MCP defines several core primitives that enable AI-tool interaction for large language model context:

- Resources: File-like data that can be read by clients (e.g., API responses, file contents, database records)

- Tools: Functions that can be called by the LLM (with user approval) to perform actions

- Prompts: Pre-written templates that help users accomplish specific tasks

- Sampling: Capability for servers to request completions from LLMs

MCP vs APIs: A Comparative Analysis

Let's examine the key differences between Model Context Protocol and traditional API integration to understand why they complement rather than compete with each other:

| Aspect | Traditional APIs | Model Context Protocol |

|---|---|---|

| Primary Purpose | Machine-to-machine communication | AI-to-tool communication |

| Interface Nature | Fixed, predefined endpoints | Self-describing tools with semantic explanations |

| Context Handling | Generally stateless, limited context | Inherently context-aware |

| Adaptability | Changes often break client compatibility | Clients adapt to changes automatically |

| Interaction Model | Requires explicit programming for each interaction | Enables natural language understanding of tool capabilities |

This comparison helps explain the benefits of MCP over standard API calls in LLMs, particularly for context management.

It's APIs All the Way Down

The relationship between MCP and APIs is symbiotic, not adversarial. MCP servers are essentially specialized API clients with a standardized interface—they're not replacing APIs, they're consuming them at scale. This insight is crucial when evaluating if MCP is overkill if your AI uses RESTful APIs.

Some platforms are generating MCP servers directly from OpenAPI definitions. This approach transforms existing API descriptions into MCP-compatible interfaces for AI assistants, making APIs more valuable, not less.

The Power of MCP: Beyond Simple API Wrapping

Now that we've established the difference between MCP and API for AI context handling, let's explore what makes Model Context Protocol particularly powerful for AI applications.

1. Self-Describing Interfaces

MCP tools come with rich, semantic descriptions of what they do and how they work:

// Example MCP tool definition

{

name: "search_database",

description: "Search the product database for items matching specific criteria",

inputSchema: {

type: "object",

properties: {

query: {

type: "string",

description: "Search terms or keywords to find relevant products"

},

category: {

type: "string",

description: "Product category to filter results (optional)",

enum: ["electronics", "clothing", "home", "outdoors"]

},

max_results: {

type: "integer",

description: "Maximum number of results to return (default: 10)"

}

},

required: ["query"]

},

annotations: {

readOnlyHint: true,

openWorldHint: false

}

}This rich description allows AI models to:

- Understand what the tool does

- Know which parameters are required vs. optional

- Comprehend parameter meanings and constraints

- Make informed decisions about when to use the tool

This self-describing nature is one way MCP enhances AI memory vs API-based approaches.

2. Dynamic Discovery and Adaptation

MCP clients can dynamically discover available tools and resources at runtime, allowing for:

- Automatic adaptation to server changes

- Contextual tool availability

- Progressive enhancement as new capabilities are added

- Resilience against interface changes

These features are part of why developers consider Model Context Protocol or API: what's better for scalable AI systems depends on their need for adaptability.

3. Resource and Context Management

MCP provides sophisticated mechanisms for working with resources:

- Resource URIs (e.g.,

file:///logs/app.log,postgres://database/customers/schema) - Resource templates for dynamic content

- Subscription to resource updates

- Context-sensitive resource exposure

These capabilities address a common question: "Does MCP simplify context management better than APIs?" For many AI applications requiring persistent memory, the answer is yes.

4. Tool Annotations for Better AI Understanding

Tool annotations provide metadata about a tool's behavior:

"annotations": {

"title": "Delete File",

"readOnlyHint": false,

"destructiveHint": true,

"idempotentHint": true,

"openWorldHint": false

}These hints help AI models understand tool impacts and clients to present appropriate interfaces, which is a significant advancement in how MCP AI handles context.

Emerging Patterns for Model Context Protocol Implementation

As the MCP ecosystem matures, two primary patterns are emerging for how MCP servers interact with APIs:

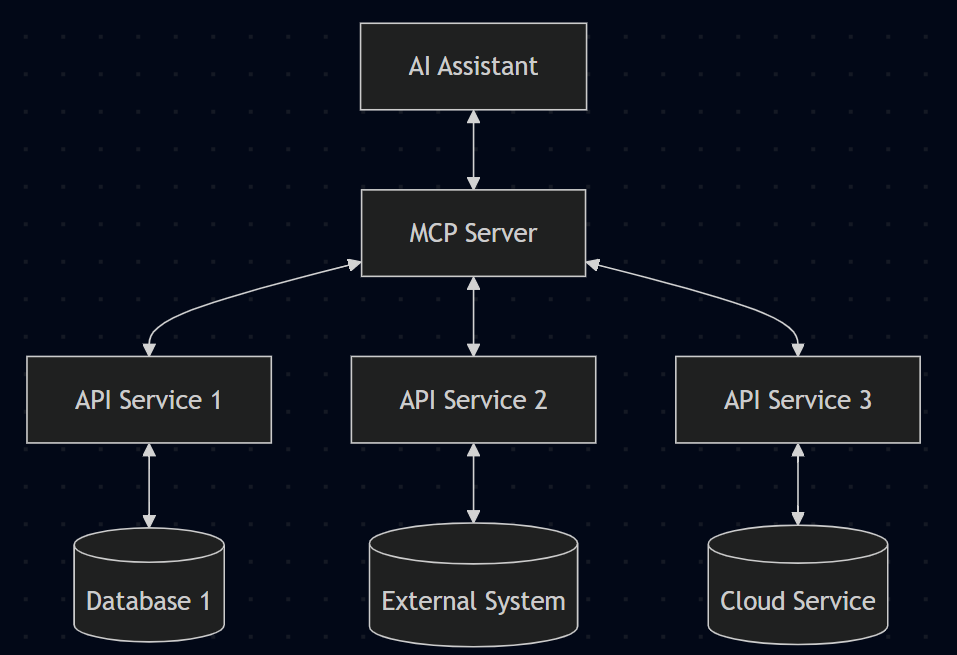

1. Aggregator Pattern

In this pattern, a single MCP server integrates with multiple APIs, acting as a centralized access point. This approach:

- Simplifies management of multiple services through a single integration point

- Provides consistent interface patterns across varied API implementations

- Reduces duplication of authentication logic and credential management

- Enables cross-service orchestration and complex workflows

- Abstracts away differences in API styles, versions, and error handling

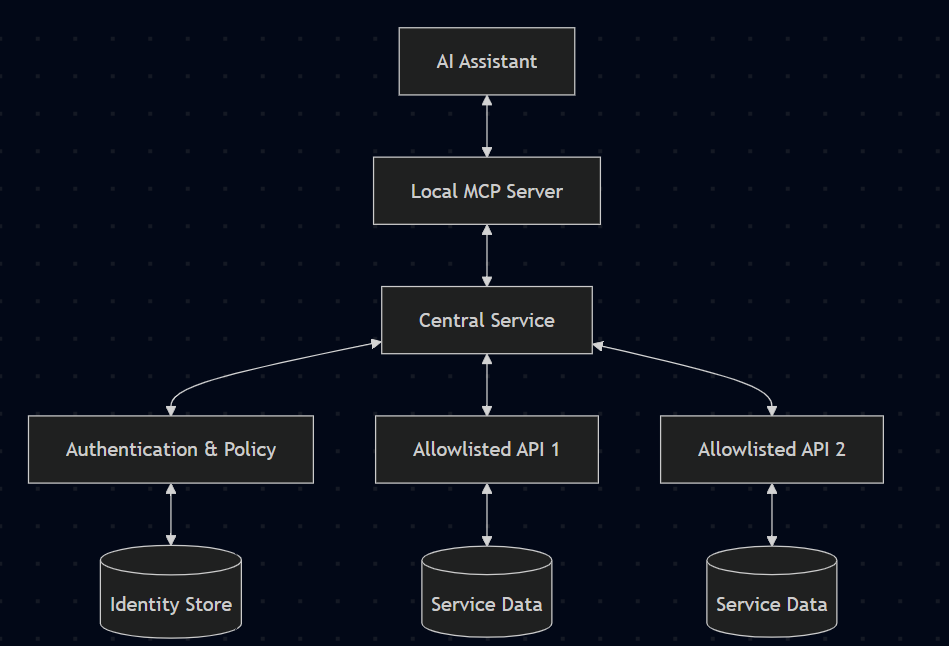

2. Client Facilitator Pattern

Here, local MCP servers connect to centralized services that manage access to allow-listed APIs. This pattern:

- Enhances security through centralized policy enforcement and unified authentication

- Enables remote management of available services without client updates

- Provides comprehensive usage analytics and governance across the organization

- Facilitates enterprise-wide standardization and compliance

- Creates a controlled environment for sensitive operations

- Allows for granular permission management while maintaining flexibility

Understanding these patterns helps clarify when MCP is not necessary in AI architectures versus when it provides significant advantages.

The MCP Ecosystem

The MCP ecosystem includes both clients that connect to MCP servers and servers that provide various capabilities. Desktop applications, development environments, and AI frameworks make up the client side, while servers cover everything from file systems and databases to development tools and productivity applications. This ecosystem continues to grow as more developers discover how MCP enhances AI context management.

Security Considerations in MCP AI

Security is a critical aspect of Model Context Protocol implementation. The protocol addresses several security concerns:

- Human-in-the-loop Approval: Most AI hosts require explicit user approval for tool usage

- Transport Security: Support for secure communication channels

- Tool Annotations: Hints about tool behavior and potential impacts

- Resource Access Control: Granular permissions for resource access

The MCP community continuously improves the specification with enhanced authentication mechanisms and service discovery protocols.

Designing for an AI-First Future

Organizations can prepare for AI advancements by designing AI-friendly APIs:

- Semantic Descriptions: Create rich documentation that explains not just what APIs do, but why and when to use them

- Consistent Patterns: Develop predictable interfaces that follow established conventions

- Clear Parameter Descriptions: Provide detailed explanations of parameter meanings and constraints

- Resource-Oriented Design: Design APIs that map naturally to business domains and resources

- Error Handling Clarity: Implement clear error messages that explain what went wrong and how to fix it

These practices make APIs more valuable in an MCP world, enabling smoother integration and addressing the question: "Do developers need MCP if using LangChain or RAG APIs?" MCP can complement these technologies by providing a standardized way for AI models to access context.

Model Context Protocol or API: A Practical Decision Framework

When deciding between MCP and traditional APIs for your AI projects, consider:

- Use APIs when: You need deterministic machine-to-machine communication, maximum performance, or are building core backend services

- Use MCP when: You're building AI agents that need tool access, creating interfaces that will evolve frequently, or need AI to dynamically discover capabilities

- Use both when: You need the reliability of traditional APIs with the flexibility of MCP for AI interactions

This framework helps answer the question: "Is MCP overkill if your AI uses RESTful APIs?" The answer depends on your specific AI memory and context management needs.

Conclusion: The Symbiotic Relationship

The choice between Model Context Protocol vs API is a false dichotomy. They solve different problems and work best when used together. APIs excel at deterministic machine-to-machine communication, while MCP provides a flexible, self-describing interface for AI-human-tool interaction.

When someone claims MCP will replace traditional APIs in AI agent systems, you can confidently respond: "Actually, it's APIs all the way down." MCP strengthens the API ecosystem by providing a standardized way for AI to consume APIs.

As you build AI systems with persistent memory requirements, consider how both protocols can complement each other in your architecture. The most powerful systems leverage traditional APIs for their core infrastructure while using MCP to create dynamic, adaptive AI experiences.

Ready to explore the next step? Our AI Enablement Services help you implement MCP and API ecosystems side-by-side to scale smarter.

In future posts, I'll dive deeper into building your own MCP servers and exploring how MCP enhances AI memory vs API-based approaches. Stay tuned!

Want to learn more about Model Context Protocol? Check out the official documentation or explore the growing ecosystem of MCP servers on the MCP website.