Designing a Social Network and Newsfeed System on AWS: A Comprehensive Guide

Modern social networks demand real-time updates, personalized content, and seamless scalability to serve millions of users. This blog explores the architecture of a social network and newsfeed system on AWS, leveraging managed services to balance performance, cost, and resilience.

Problem Statement

Designing a social network newsfeed involves addressing critical challenges:

- Real-Time Delivery: Reflect new posts in followers' feeds within seconds.

- Hybrid Feed Generation: Optimize for both normal users (1,000 followers) and celebrities (100M+ followers).

- Search and Discovery: Enable fast keyword/hashtag searches across billions of posts.

- Cost-Effective Scaling: Manage storage for 31B+ annual posts and 500M+ monthly active users.

- Global Availability: Serve low-latency media via CDNs and regional replication.

Key Design Choices

- Hybrid Feed Delivery:

- Push Model for normal users (precompute timelines).

- Pull Model for celebrity users (real-time retrieval).

- Graph Database: Store user relationships (e.g., follows, likes) for low-latency queries.

- Serverless Workflows: AWS Lambda and DynamoDB Streams for async post processing.

- Multi-Layer Caching: Redis for active user timelines, CloudFront for media.

System Requirements

Functional Requirements

- Publish posts (text, images, videos) with hashtags.

- Generate timelines from followed users/topics.

- Search posts by keyword, location, or hashtag.

- Support eventual consistency for likes/shares (2-minute lag acceptable).

Non-Functional Requirements

- Latency: <500ms for timeline retrieval, <2s for post creation.

- Scalability: 10K posts/sec, 100K timeline fetches/sec.

- Durability: 99.999% data durability for media (S3).

- Cost: Optimize storage for 29TB/year of new posts.

| Metric | Calculation | Result |

|---|---|---|

| Annual Posts Created | 10K/sec × 86,400 sec/day × 365 days | ~315B posts/year |

| Media Storage (Images) | 800 images/sec × 2MB | ~1.6TB/hour |

| Active Timelines in Redis | 500M users × 30 posts/user | 15B cached post IDs |

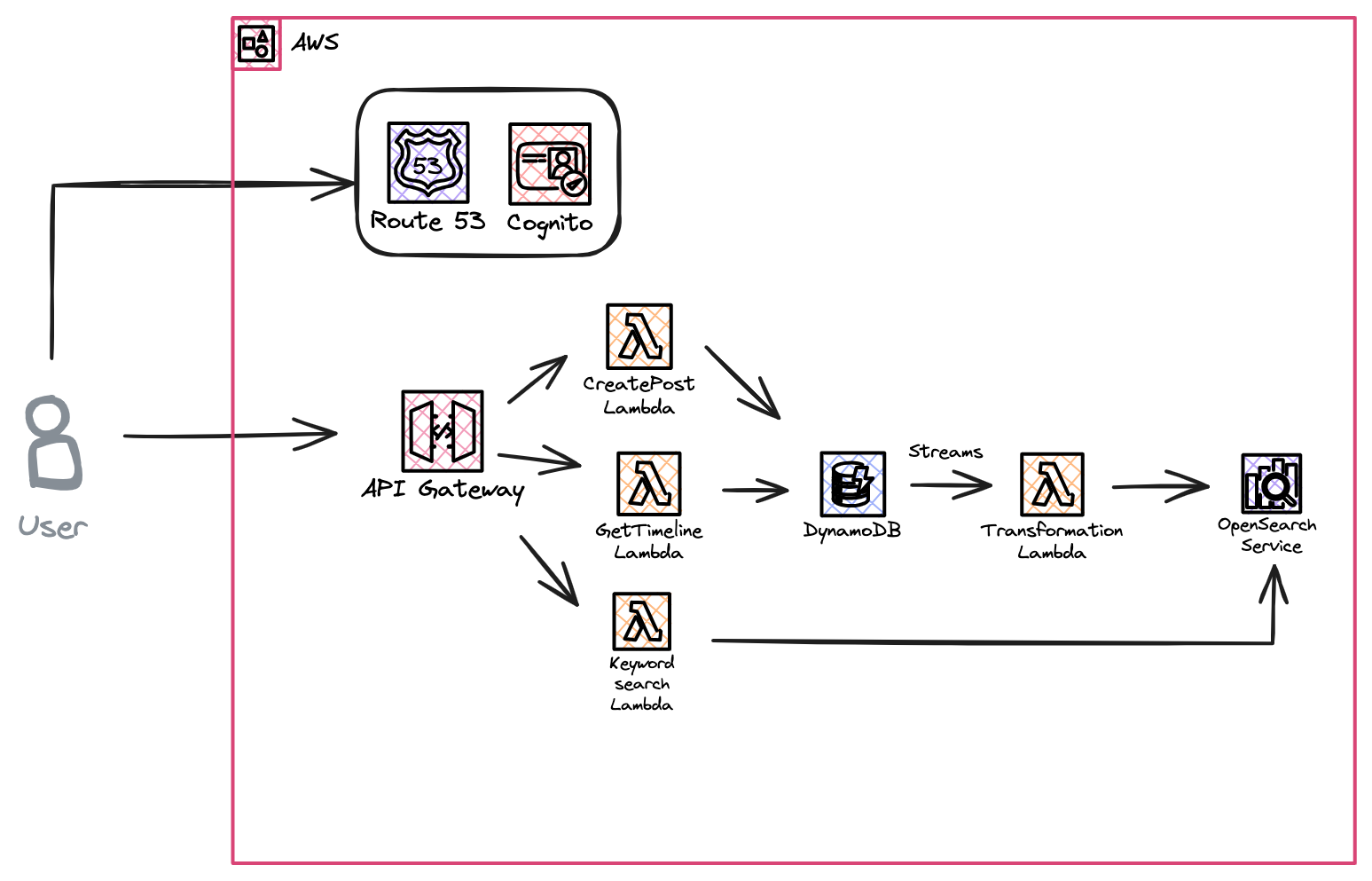

Day 0 Architecture: Getting Started

At launch, our social network prioritizes core functionality: user onboarding, post creation, and basic timeline delivery. We leverage AWS serverless services to minimize operational overhead while ensuring inherent scalability.

Core Components

- User Gateway (Amazon API Gateway)

- Handles authentication/authorization via Amazon Cognito

- Enforces rate limiting (1,000 requests/sec/user)

- Routes requests to backend Lambda functions

- Post Service (AWS Lambda + DynamoDB)

- Stores posts in DynamoDB with schema:

PK = u#{userId}#posts | SK = p#{postId}

- Handles media uploads to S3 with SHA-256 deduplication

- Generates unique post IDs using Snowflake-like sequencing

- Graph Service (DynamoDB)

- Manages follower relationships with optimized schema

User metadata: PK=u#{userId} | SK=metadata

Followers: PK=u#{userId}#followers | SK=u#{followerId}

Following: PK=u#{userId}#following | SK=u#{followedId}

- Timeline Builder (Lambda + DynamoDB Streams)

- Triggers on new post events from DynamoDB Streams

- Retrieves follower list via Graph Service

- Updates Redis Sorted Sets with

ZADD user_timeline:{followerId} timestamp postId

Data Flow for Post Creation

- User submits post via /posts API endpoint

- Post Service:

- Validates content (character limits, banned words)

- Stores post in DynamoDB with TTL=365 days

- Uploads media to S3 with x-amz-meta-origin-user tag

- DynamoDB Stream triggers Timeline Builder Lambda

- For each follower:

- Updates Redis timeline with LPUSH follower:

{userId} postId

- Maintains 500-post limit via

LTRIM 0 499

Search Integration

- Real-Time Indexing (Lambda + OpenSearch)

- DynamoDB Stream triggers Indexer Lambda

- Extracts hashtags/mentions via regex:

hashtags = re.findall(r'\B#\w*[a-zA-Z]+\w*', post_content)

- Updates OpenSearch index with inverted mappings:

{

"postId": "p123",

"content": "AWS architecture #cloud",

"hashtags": ["cloud"],

"mentions": [],

"timestamp": 1716840000

}

- Search API (Lambda + OpenSearch)

- Handles queries like GET /search?q=#cloud

- Uses OpenSearch DSL for relevance scoring:

{

"query": {

"match": {

"hashtags": {"query": "cloud", "boost": 2.0}

}

}

}

Initial Scalability Considerations

| Component | Scaling Mechanism | Throughput Target |

|---|---|---|

| API Gateway | Auto-scaling with 5000 RPS/stage | 50K RPS |

| Post Lambda | 1,000 concurrent executions | 10K posts/sec |

| Redis Cluster | 6 nodes (3 shards, 2 replicas) | 100K ops/sec |

| DynamoDB | On-demand capacity mode | 40K WCU/RCU |

Cost Optimization:

- Use DynamoDB auto-scaling with 70% utilization alerts

- Enable S3 Intelligent Tiering for media storage

- Configure Lambda power tuning (256MB-3GB memory)

Challenges & Mitigations

- Hot Partitions in DynamoDB

- Problem: Celebrity users with 10M+ followers creating uneven load

- Solution: Shard follower lists using virtual partitions:

PK = u#{userId}#followers#{shardId}

- Redis Memory Pressure

- Problem: 500M users × 500 posts = 250B cache entries

- Solution: Activate volatile-LRU eviction policy with 30-day TTL

- Search Latency Spikes

- Problem: Complex OpenSearch queries exceeding 500ms

- Solution: Pre-warm indices with 24-hour retention

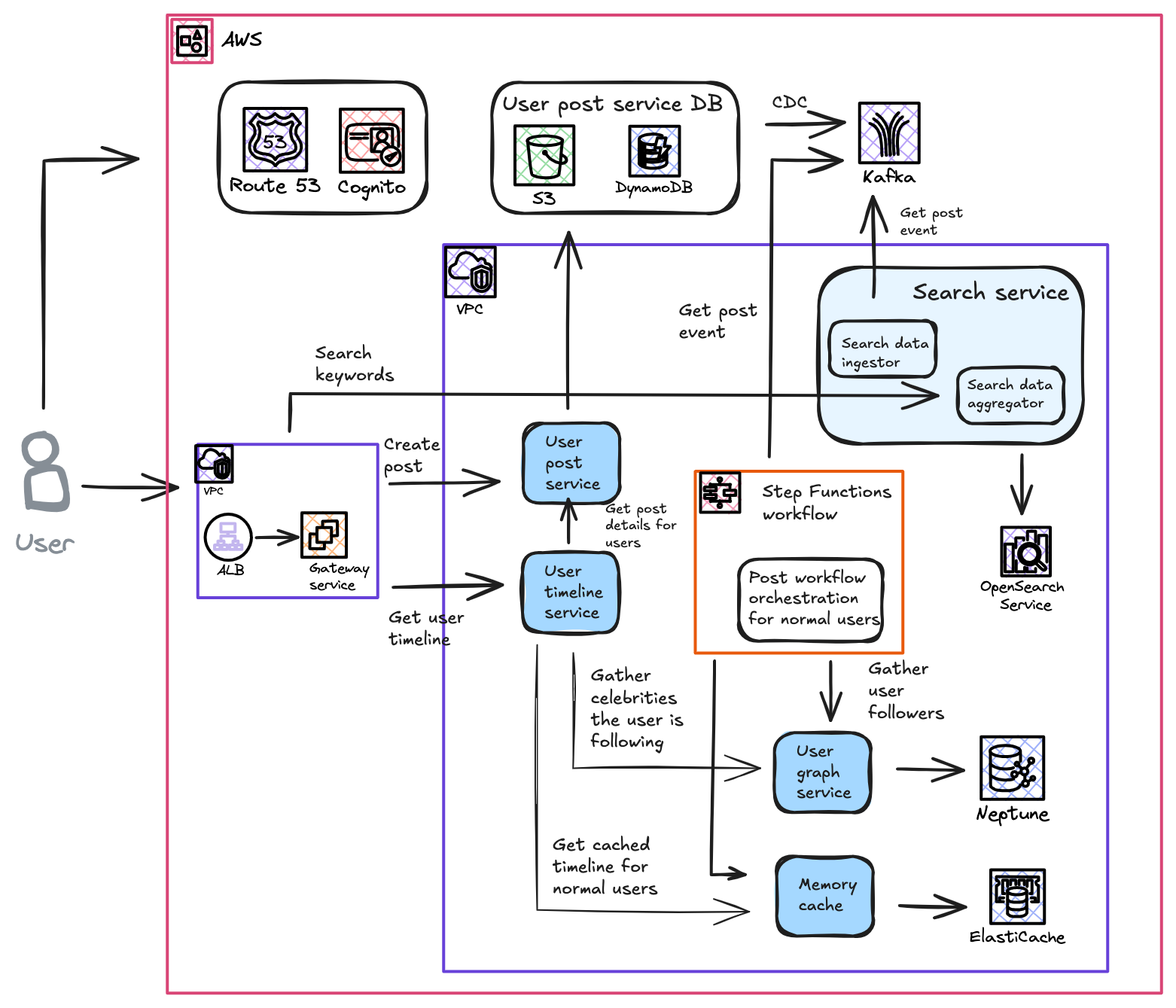

Hybrid Feed Delivery: Balancing Push and Pull Models

To optimize performance for diverse user bases-from casual users to celebrities with millions of followers-we implement a hybrid feed delivery strategy. This approach combines the push model (fan-out-on-write) for active users and the pull model (fan-out-on-read) for high-profile accounts, ensuring low latency while minimizing resource waste.

Push Model for Active Users

- When a user posts content, the system immediately retrieves their follower list and precomputes timelines.

- Post IDs are pushed to Redis Sorted Sets for each follower, maintaining a fixed-size list (e.g., 500 most recent posts).

- AWS Services Used:

- DynamoDB Streams: Triggers Lambda on new posts.

- Lambda: Executes fan-out logic (retrieves followers, updates Redis).

- ElastiCache (Redis): Stores precomputed timelines with ZADD and LTRIM commands.

- Optimizations:

- Batching: Use Redis pipelining to reduce I/O overhead for high-follower accounts.

- Sharding: Distribute follower lists across partitions to avoid DynamoDB hot partitions.

(e.g., u#{userId}#followers#{shardId})

Pull Model for Celebrity Accounts

Celebrities (e.g., 100M+ followers) generate unsustainable write amplification with pure push. Instead:

- Posts are stored in DynamoDB with a celebrity flag.

- Timelines for followers are built on-demand during read requests.

- Query Pattern:

# Retrieve celebrity posts during timeline fetch

celebrity_posts = query_dynamodb(

KeyConditionExpression="PK = 'celebrity' AND SK > :timestamp",

IndexName="CelebrityPostsIndex"

)

- AWS Services Used:

- DynamoDB Global Secondary Indexes (GSI): Enable fast lookups for celebrity posts.

- OpenSearch: Real-time indexing for keyword/hashtag searches.

Hybrid Workflow

- Post Creation:

- All posts are stored in DynamoDB and indexed in OpenSearch.

- For non-celebrity users:

- Lambda triggers via DynamoDB Streams to push post IDs to followers’ Redis timelines.

- For celebrities:

- Posts skip fan-out and rely on real-time retrieval.

- Timeline Retrieval:

- Active users:

- Fetch precomputed timeline from Redis (O(1) latency).

- Merge with real-time celebrity posts using a priority queue.

- Inactive users:

- Build timeline dynamically from DynamoDB/OpenSearch.

- Active users:

- Ranking:

- A Lambda-based ranking service applies ML models to prioritize posts (e.g., engagement likelihood).

AWS Architecture Highlights

| Component | Role | AWS Service |

|---|---|---|

| Post Ingestion | Store posts and metadata | DynamoDB, S3 |

| Fan-Out | Distribute posts to followers | Lambda, SNS/SQS |

| Timeline Cache | Store precomputed feeds | ElastiCache (Redis) |

| Real-Time Merge | Combine cached and celebrity posts | Lambda, API Gateway |

| Search & Discovery | Keyword/hashtag queries | OpenSearch |

Challenges & Solutions

- Celebrity Post Latency

- Solution: Edge caching with CloudFront for media, while metadata is fetched via DynamoDB Accelerator (DAX).

- Cache Invalidation

- Solution: TTL-based eviction (30 days) + LRU policy for Redis.

- Consistency

- Tradeoff: Accept 2-minute eventual consistency for likes/shares to reduce database load.

Day N Architecture: Beyond Getting Started

The scope of building a user timeline can grow over time and can include additional posts based on interests, location, or any other particular parameters. The primary requirement for building a timeline is to show all posts from the people a user is following, and additional components can be added as the system evolves. We should identify the critical components of the system for operating and for serving the users; ranking the posts at user level or adding more posts are extras that we’re building to keep users engaged.

One key recommendation is to assess and categorize the criticality of each application within the system. Each subsystem should be assigned a priority level. For example, Amazon employs a tiered model: Tier 1 covers highly critical systems that have a direct effect on customers, Tier 2 includes moderately critical systems where customer experience may be slightly degraded (e.g., an order shipping a day late), and Tier 3 involves low-priority systems that enhance the customer experience but are not essential. This classification enables teams to prioritize recovery efforts during downtime and ensures that Tier 1 systems undergo additional rigorous testing before any production changes are introduced.

Security and Cost Considerations

Security is foundational in any social network, especially at the scale described here. Leveraging AWS’s shared responsibility model, you benefit from robust infrastructure protections while retaining control over how you secure your own application and data9. Key security practices for this architecture include:

- Identity and Access Management (IAM): Assign individual IAM users for every admin or automation, enforce least-privilege access, and rotate credentials regularly.

- Authentication and Authorization: Use managed services like Amazon Cognito for user authentication, which provides out-of-the-box support for multi-factor authentication (MFA), compliance standards (HIPAA, PCI DSS), and seamless integration with other AWS services.

- Encryption: All sensitive data-whether user posts, media, or metadata-should be encrypted at rest (using AWS KMS) and in transit (using TLS 1.2 or above). S3 and DynamoDB both support server-side encryption by default.

- Network Security: Protect APIs and backend services using AWS WAF and AWS Shield for DDoS mitigation, and consider AWS Network Firewall for deeper inspection and filtering of VPC traffic.

- Monitoring and Logging: Enable AWS CloudTrail and Amazon CloudWatch to log API calls, detect anomalies, and support incident response.

- Data Protection: Avoid placing confidential information in tags or free-form fields, and use services like Amazon Macie to discover and secure sensitive data in S3.

Operating a global-scale social network on AWS requires careful cost management to avoid runaway expenses while maintaining performance. Some essential cost strategies include:

- Right-Sizing and Auto-Scaling: Use AWS Compute Optimizer to right-size EC2, RDS, and other compute resources. Enable auto-scaling for EC2, Lambda, and DynamoDB to match capacity with demand, preventing over-provisioning.

- Pricing Models: Mix On-Demand, Reserved, and Spot Instances for compute workloads. For predictable usage, Reserved Instances and Savings Plans can reduce costs by up to 72%.

- Storage Tiering: Move infrequently accessed data (old posts, media) to lower-cost storage classes like S3 Glacier or Intelligent-Tiering. Use S3 lifecycle policies to automate data transitions and deletions.

- Efficient Data Transfer: Minimize cross-region transfers and leverage AWS PrivateLink and Global Accelerator to reduce data transfer costs and latency.

- Serverless Optimization: Tune Lambda memory and execution time, and use API Gateway throttling to avoid unnecessary invocations. Enable DynamoDB on-demand scaling to prevent over-provisioning.

- Monitoring and Alerts: Set up AWS Budgets and Cost Explorer to track spending and receive alerts for unexpected spikes.

Conclusion

Designing a scalable, secure, and cost-effective social network and newsfeed system on AWS involves a blend of architectural best practices and cloud-native features. By segmenting user types (normal vs. celebrity), adopting a hybrid push-pull feed delivery model, and leveraging managed AWS services for storage, caching, search, and analytics, you can build a platform capable of serving hundreds of millions of users with low latency and high reliability.

Security is enforced at every layer-from IAM and encryption to network protection and monitoring-ensuring user data remains safe and compliant with global regulations. Cost optimization is an ongoing process, achieved through right-sizing, tiered storage, serverless efficiency, and vigilant monitoring.

Ultimately, the key to success lies in starting simple (Day 0), iterating as scale and requirements grow (Day N), and continuously evolving the architecture to meet the demands of a dynamic, global user base. AWS provides the building blocks; thoughtful design and disciplined operations turn them into a world-class social platform.