Meta Llama 4 on AWS Bedrock: A Deep Dive into Open-Source LLM Innovation

A Deep Dive into Meta Llama 4 on AWS Bedrock: Capabilities & Features

The generative AI world is still moving faster, and so is the release of Meta Llama 4, Meta's newest open-weight large language model (LLM). Introduced in April 2024, Meta Llama 4 represents a significant milestone for open-source LLMs. Its availability within AWS Bedrock enables companies to tap into this powerful AI processor without having to deal with infrastructure, which makes Llama 4 on AWS Bedrock an attractive option for businesses that require scalability, security, and ease of use.

In this post, we dive into the abilities and capabilities of Meta Llama 4, how it compares to others such as GPT-4, and what running Llama 4 on AWS does for your AI strategy.

What is Meta Llama 4?

Meta Llama 4 is part of Meta's LLaMA (Large Language Model Meta AI) series, designed to provide open-access, high-performing LLMs for research and enterprise innovation. Llama 4 is available in two primary variants: Llama 4 and Llama 4 Chat. According to Meta's official AI blog, the architecture emphasizes:

- Larger training datasets (15T+ tokens)

- Improved context understanding (128K context window)

- Better multilingual performance

- Enhanced reasoning and code generation

Unlike closed models such as GPT-4, Meta continues to champion openness by providing model weights for academic and commercial use.

Llama 4 Capabilities

Meta Llama 4 introduces a host of advanced capabilities that elevate it beyond its predecessors:

- Instruction Following: Fine-tuned to understand and respond to instructions in natural language, making it suitable for task automation, agents, and productivity tools.

- Enhanced Reasoning: Stronger performance on reasoning benchmarks like MMLU and GSM8K, beneficial for financial analysis, strategic planning, and decision support systems.

- Code Proficiency: Excellent at generating, completing, and explaining code in multiple languages (e.g., Python, JavaScript), enabling software engineering applications.

- Multilingual Understanding: Trained on a wider linguistic corpus to support international business use cases.

- Agentic Behavior: Supports the development of autonomous agents by maintaining context and goal-driven outputs.

These Llama 4 capabilities are instrumental for deploying intelligent solutions across industries, especially when used within the AWS ecosystem.

Why AWS Bedrock?

AWS Bedrock provides a serverless environment to host foundation models through API without any hardware provisioning. It hosts a vast portfolio of top models such as Amazon Titan, Claude (Anthropic), Mistral, Cohere, and now Meta Llama 4.

Hosting Llama 4 on AWS Bedrock helps organizations to:

- Steer clear of infrastructure complexity

- Scale applications seamlessly with auto-managed capacity

- Leverage AWS-native integrations for security and governance

- Prototype in a hurry with Amazon SageMaker, Lambda, and more

Discover more in our post on Advancing Generative AI with AWS Bedrock.

Official AWS Bedrock Documentation

Meta Llama 4 Features on AWS Bedrock

Some standout Meta Llama 4 features available via AWS Bedrock include:

- 128K Context Length: Enables deeper, document-level comprehension and long conversations.

- Multimodal Capability (upcoming): Meta plans to release a multimodal Llama model, setting the stage for vision + language interfaces.

- Optimized for Chat: Llama 4 Chat is fine-tuned for dialogue, customer support, and agentic applications.

- Scalability on Demand: Seamless scalability using AWS compute and Bedrock APIs.

- Secure Deployment: Enterprise-grade compliance with IAM, KMS, CloudWatch, and more

This positions Llama 4 as a key player in open source LLMs on AWS.

See how AWS is enhancing trust with Amazon Bedrock Guardrails.

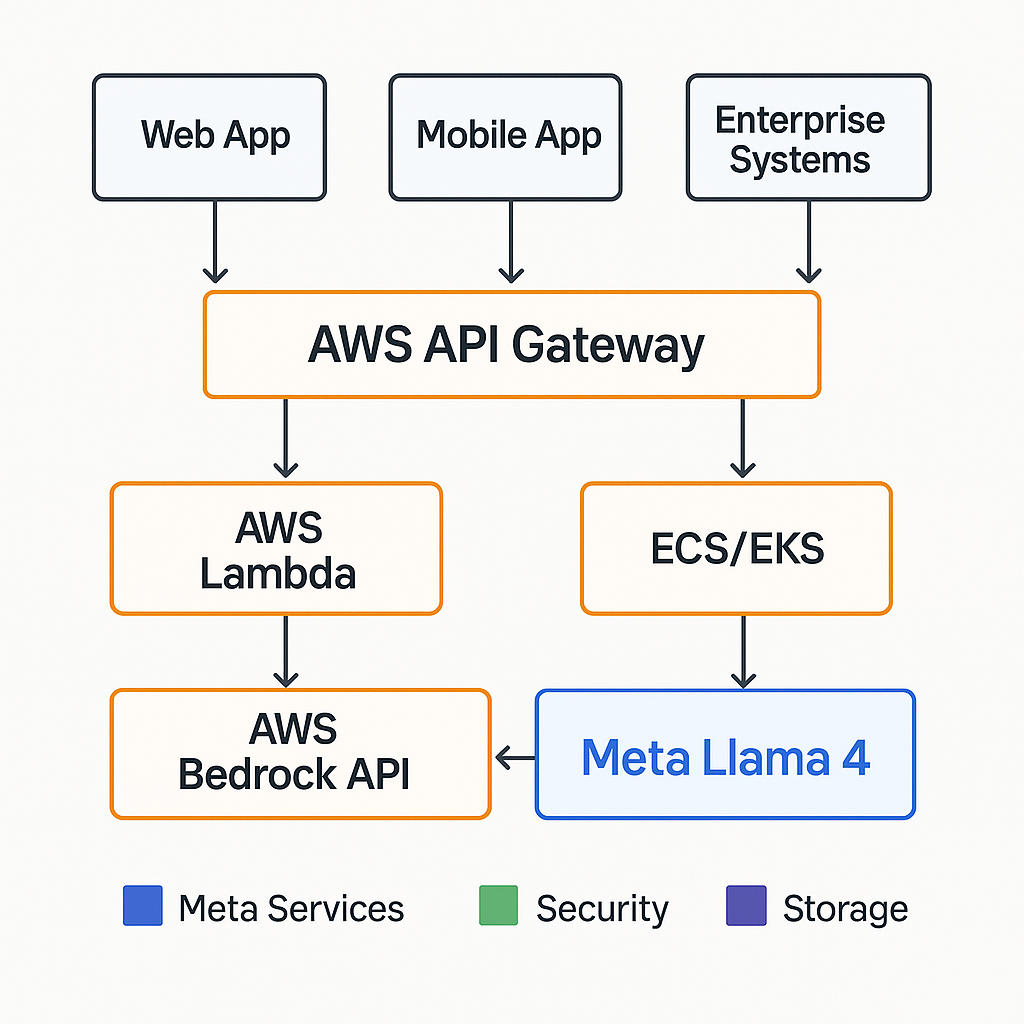

Architecture for Deploying Llama 4 on AWS Bedrock

Here’s a simplified reference architecture for deploying Llama 4 on AWS Bedrock:

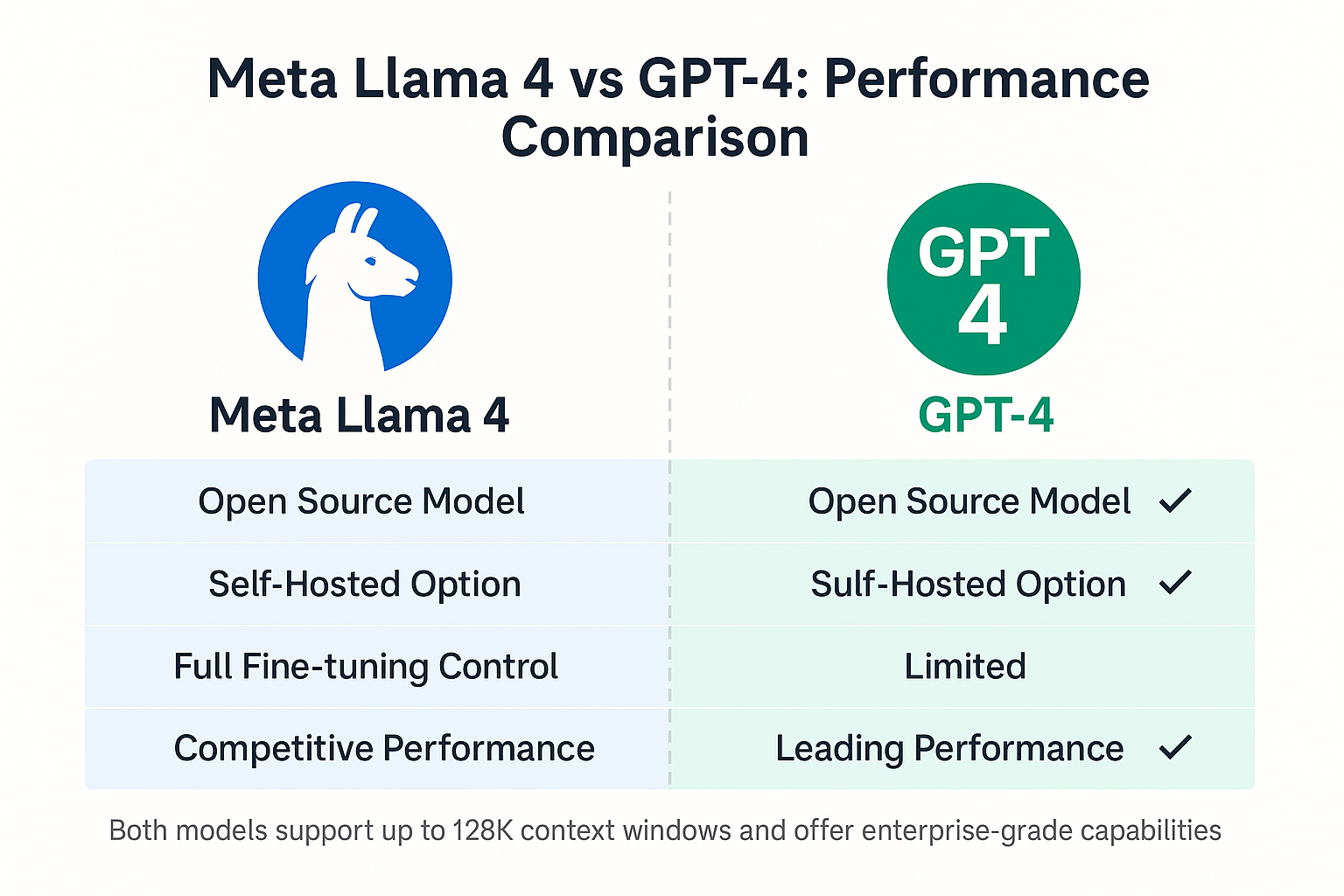

Llama 4 vs GPT-4: How Does It Compare?

Llama 4 vs GPT-4 is a hot topic in AI communities. Here's how they measure up:

| Feature | Meta Llama 4 | OpenAI GPT-4 |

|---|---|---|

| Licensing | Open (with usage terms) | Closed (API only) |

| Context Window | 128K | 128K |

| Fine-tuning Support | Allowed | Limited (via GPTs) |

| Code Understanding | High | Very High |

| Multimodal Support | Upcoming | Available |

Llama 4 excels in customization and cost-effectiveness, while GPT-4 is robust for black-box commercial APIs.

Use Cases Powered by Llama 4 on AWS

Here are some real-world use cases:

- Customer Support Chatbots: Enhance intent recognition and tone adaptation

- Document Summarization: Legal, research, and healthcare workflows

- Code Generation: IDE integrations, testing frameworks

- Semantic Search: Combined with Amazon Kendra or OpenSearch

- Multilingual Assistants: Education and tourism sectors

AWS Bedrock Supported Models

As of 2025, AWS Bedrock supported models include:

- Meta Llama 4

- Anthropic Claude 3

- Mistral 7B and Mixtral

- Amazon Titan

- Cohere Command R+

- Stability AI (image generation)

This rich ecosystem allows developers to mix and match LLMs based on performance, cost, and licensing needs.

For tools that boost LLM applications, see LangChain for Smarter AI Apps.

Key Advantages of Llama 4 on AWS for Enterprises

- Open Source Innovation: Greater transparency and auditability

- Seamless Integration: Plug into AWS services like SageMaker, IAM, and Bedrock APIs

- Multi-tenancy & Security: Support for VPC, private APIs, and encryption

- Low Latency & High Throughput: Ideal for production-grade AI workloads

Explore how Infoservices integrates AI into enterprise platforms like AWS and Databricks at infoservices.com.

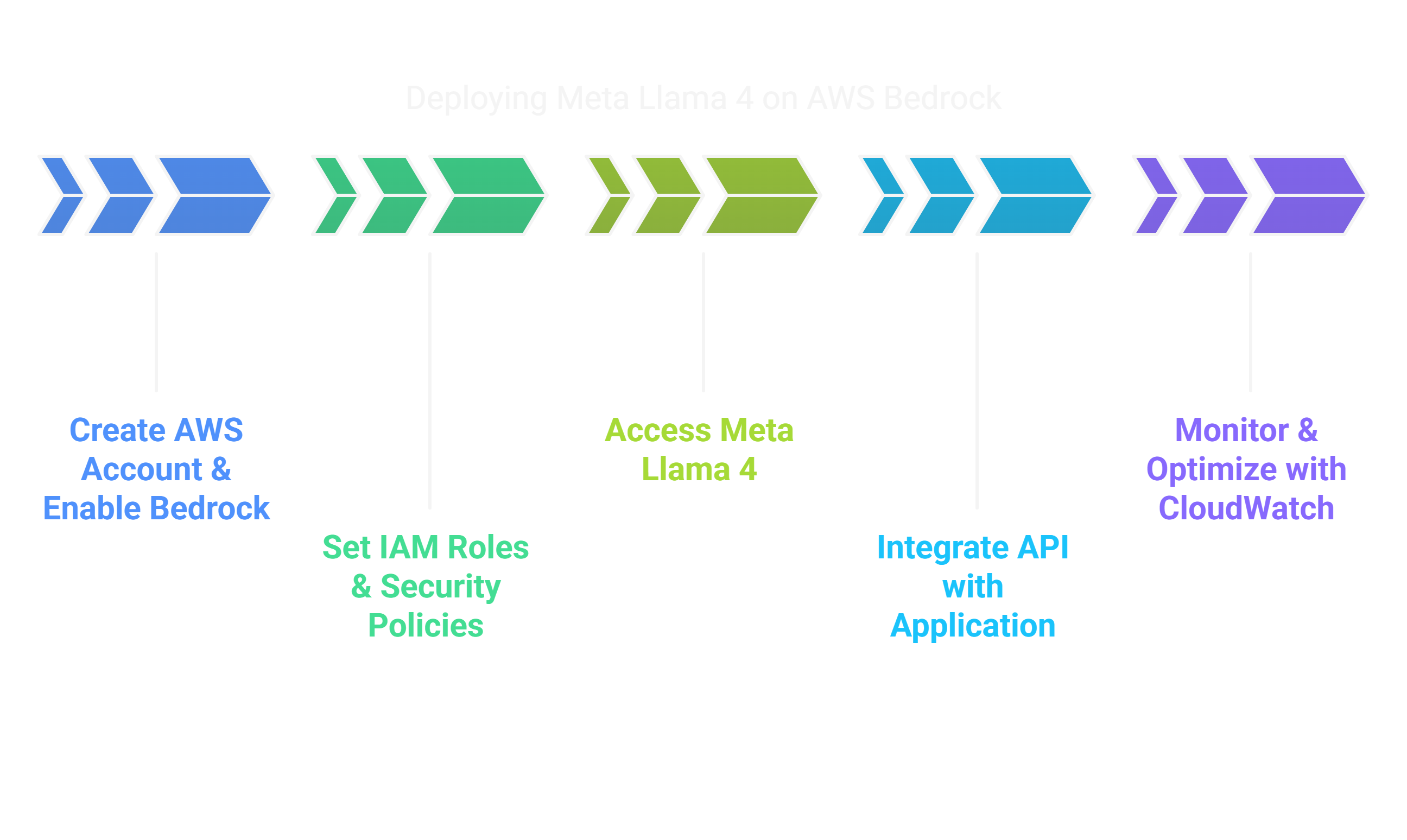

Getting Started: Steps to Deploy Llama 4 on AWS Bedrock

- Create AWS Account & Enable Bedrock

- Set IAM Roles & Security Policies

- Access Meta Llama 4 via Console or SDKs

- Integrate API with Your Application

- Monitor & Optimize with CloudWatch Metrics

Check this AWS Getting Started Guide for hands-on setup.

Final Thoughts

Meta Llama 4 on AWS Bedrock combines open innovation with enterprise-grade scalability. Whether you're exploring generative AI, deploying intelligent agents, or upgrading customer workflows, Llama 4 offers a flexible, cost-effective solution.

If you’re looking to harness Llama 4 for your enterprise AI strategy, Infoservices offers deep expertise in generative AI, cloud-native development, and data engineering.

FAQ'S

1. What is Meta Llama 4 and how is it different from previous Llama models?

Meta Llama 4 is the latest generation of Meta’s large language models, designed for improved performance, reasoning, and efficiency compared to earlier versions. It offers both base and instruction-tuned variants optimized for enterprise applications.

2. What are the core capabilities of Meta Llama 4 on AWS Bedrock?

When deployed via AWS Bedrock, Llama 4 supports text generation, summarization, question answering, RAG (retrieval-augmented generation), and secure multi-tenant model access without managing infrastructure.

3. How can developers access Meta Llama 4 through AWS Bedrock?

Developers can access Llama 4 via AWS Bedrock’s API, SDK, or integration with tools like Amazon SageMaker Studio, Amazon Kendra (for RAG), and LangChain for agent-based workflows.

4. Does Meta Llama 4 support multimodal input (text + image)?

No, the current Llama 4 models on AWS Bedrock are text-only. Meta has introduced a separate model for multimodal tasks called Llama 4-m (not yet on Bedrock as of April 2025).

5. What are the best use cases for Llama 4 on AWS Bedrock?

Ideal use cases include enterprise search, customer support chatbots, document summarization, and private RAG applications, all benefiting from Bedrock’s security and scalability.

6. Is Meta Llama 4 better than Claude, Mistral, or Gemini on AWS Bedrock?

Llama 4 excels at reasoning and instruction-following, but the “best” model depends on the specific use case. AWS Bedrock allows testing different models (Claude, Mistral, etc.) under a unified interface.

7. How does Llama 4 ensure data privacy on AWS Bedrock?

AWS Bedrock isolates customer data, doesn’t store prompts/responses, and uses AWS-native security controls like IAM, VPC, and KMS to enforce data privacy and compliance.