The Rise of Adversarial Attacks on Agentic Models: Is Your AI Prepared?

As artificial intelligence (AI) transforms industries by automating processes and enhancing decision-making capabilities, agentic AI models have become increasingly popular. These autonomous agents operate independently, learning from their environments and making decisions without human intervention. However, with this autonomy comes a rising concern adversarial attacks designed to exploit vulnerabilities in these sophisticated models. Understanding this growing threat and preparing your AI infrastructure accordingly is now crucial.

Understanding Adversarial Attacks on Agentic Models

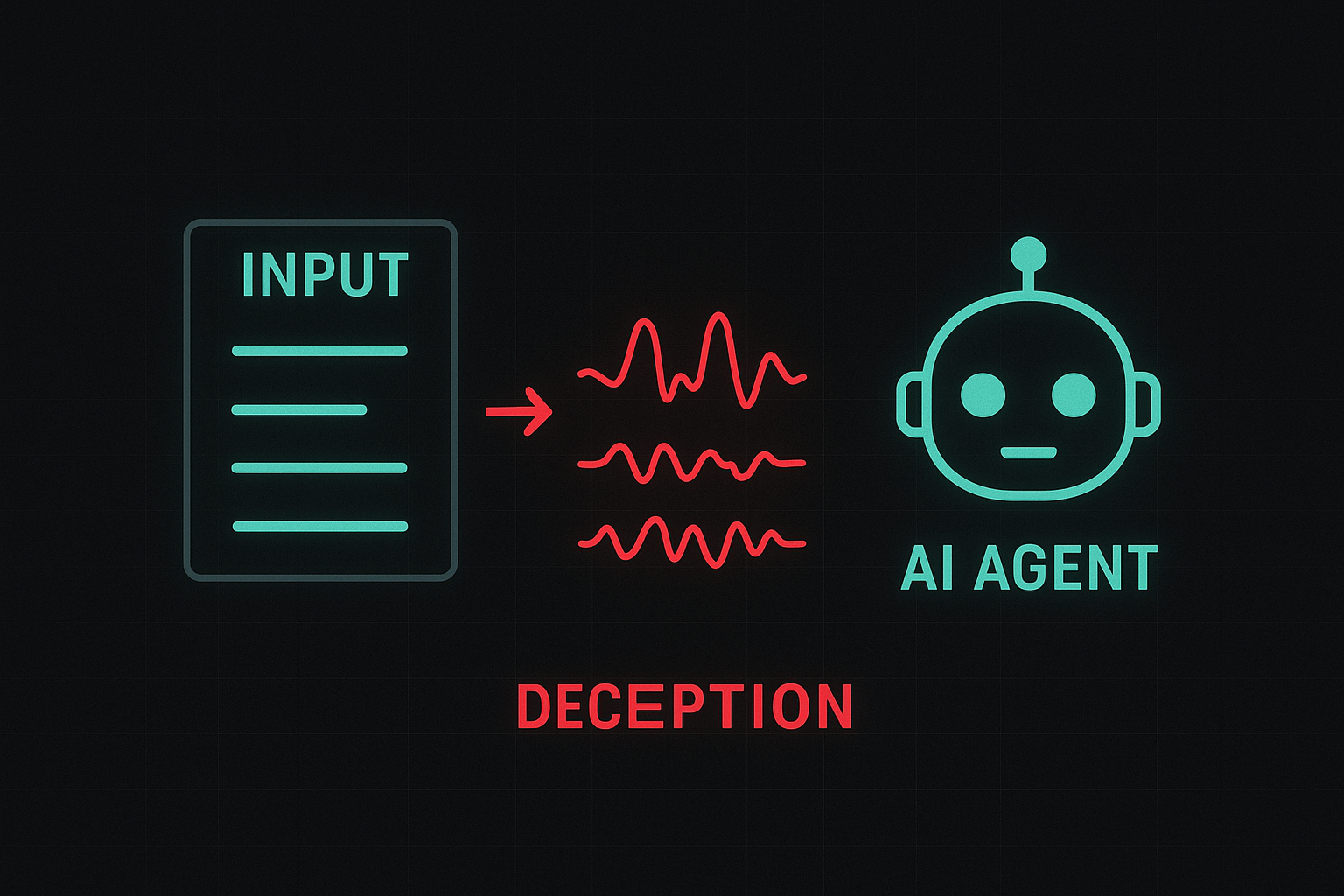

Adversarial attacks involve intentionally manipulated inputs, often subtle and difficult to detect, designed to deceive AI models into producing incorrect or unintended outputs. Agentic models, due to their autonomous decision-making abilities, are particularly susceptible because they continuously adapt based on environmental interactions.

Unlike traditional AI models, agentic systems often operate in dynamic, real-world scenarios. This characteristic, combined with their autonomy, makes them attractive targets. Attackers craft inputs that might appear normal to human observers but drastically mislead the agentic model, compromising functionality, safety, and trust. For a deeper understanding of adversarial attacks, visit IBM's resource on adversarial machine learning.

Why Adversarial Attacks are Rising

Several factors contribute to the rapid rise of adversarial attacks on agentic models:

- Increased Adoption of Agentic Models: Businesses in sectors like finance, healthcare, autonomous driving, and IoT are increasingly relying on autonomous agents for critical decisions, creating attractive targets.

- Availability of Attack Tools and Methods: The democratization of adversarial attack tools through open-source communities means attackers can easily experiment and deploy sophisticated techniques.

- Sophistication and Accessibility: Attack methods are evolving rapidly, becoming both more sophisticated and easier for even non-technical malicious actors to use.

- Expanding Attack Surface: As agentic AI is integrated into diverse industries, new vulnerabilities emerge from complex interactions across different domains and systems.

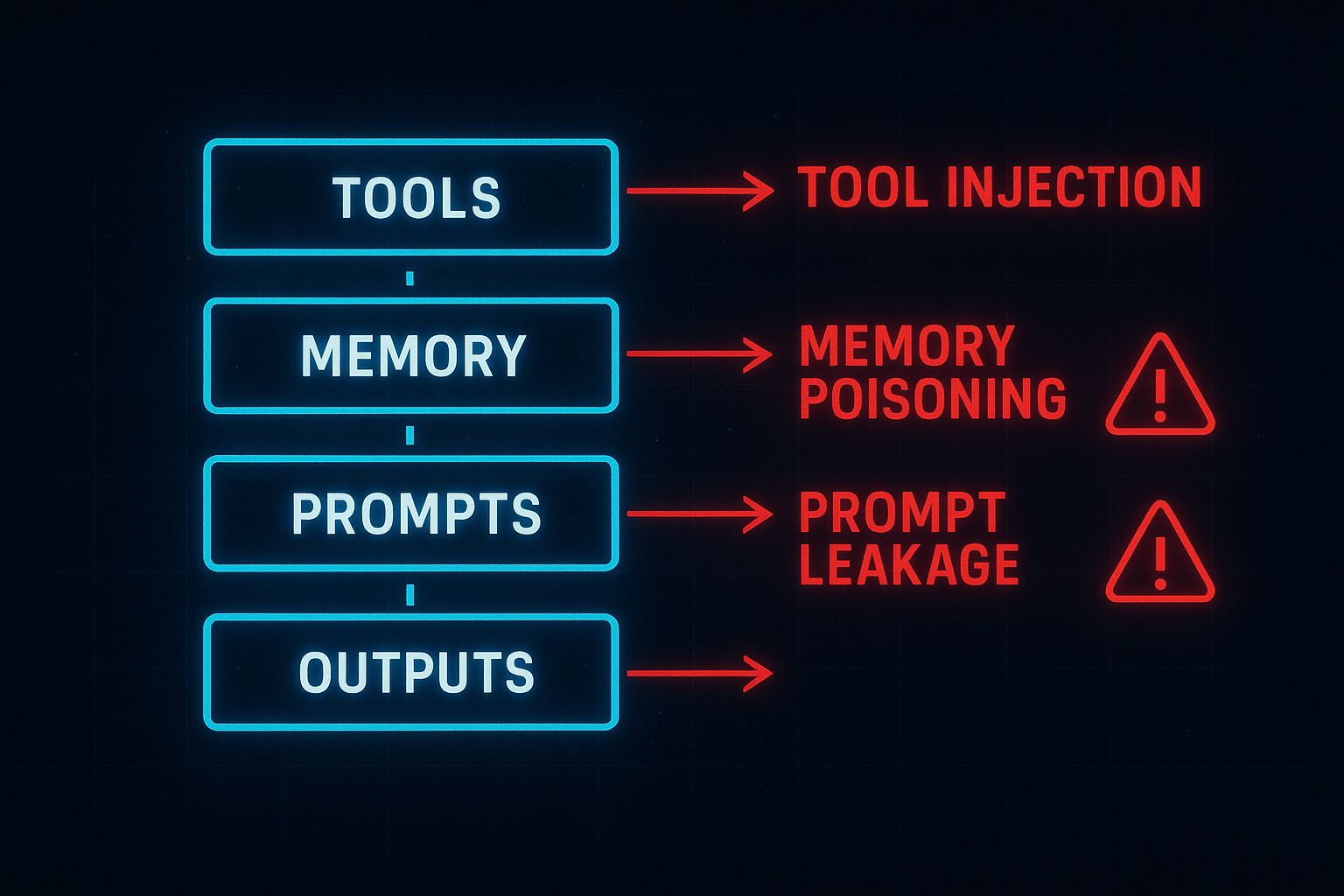

Adversaries aren’t just jailbreaking prompts anymore — they’re targeting the orchestration layer. Key vulnerabilities include:

- Tool Injection: Malicious inputs that trigger unintended tool use (e.g., invoking delete_file() instead of summarize()).

- Memory Poisoning: Polluting long-term memory with false context or misleading instructions.

- Prompt Leakage: Extracting system prompts, API keys, or internal logic via cleverly crafted queries.

- Cascading Hallucinations: One agent’s error propagates through the chain, leading to flawed outputs or unsafe actions.

- Vibe Scraping: Agents trained to mimic tone or style may inadvertently replicate toxic or manipulative content.

Real-World Examples and Implications

Recent incidents have highlighted the real-world implications of adversarial attacks on agentic AI:

- Autonomous Vehicles: Attacks involving subtly altered road signs have misled self-driving cars, potentially causing severe safety hazards. Read more on adversarial attacks on autonomous vehicles in IEEE Spectrum.

- Healthcare: Adversarial inputs have been demonstrated to trick AI-driven diagnostic tools into misclassifying medical images, potentially leading to life-threatening misdiagnoses. Explore healthcare-specific challenges in Nature Medicine's overview.

- Financial Systems: Autonomous trading agents have been manipulated using adversarial market data, leading to significant financial losses.

These incidents illustrate that adversarial attacks are not merely theoretical threats they carry severe, tangible consequences across multiple critical sectors.

Identifying Vulnerabilities in Agentic AI

Understanding how adversarial attacks exploit agentic models is essential. Vulnerabilities typically occur in the following areas:

- Perception Systems: Manipulating input data that the AI relies upon (e.g., visual recognition systems in autonomous vehicles).

- Decision-making Algorithms: Exploiting algorithmic weaknesses that arise when agents encounter novel or unexpected scenarios.

- Learning Mechanisms: Injecting malicious data during training phases, leading the model astray over time.

Proactive Strategies for Protecting Your AI

To mitigate the rising threats posed by adversarial attacks, businesses must adopt proactive and comprehensive security measures:

1. Robust Training and Validation

- Implement adversarial training where agentic models are exposed to adversarial examples during their training process, enhancing resilience.

- Regularly update and validate AI models with realistic attack simulations. Learn more about adversarial training methods at OpenAI's research page.

2. Enhanced Detection Systems

- Deploy advanced detection techniques that monitor AI behavior in real-time, flagging deviations indicative of adversarial influence.

- Utilize anomaly detection tools integrated with machine learning to continuously scan inputs.

3. Explainability and Transparency

- Integrate Explainable AI (XAI) techniques that enable teams to interpret agent decisions clearly, thereby detecting when an AI behaves anomalously.

- Maintain transparency through model audits and detailed documentation of decision-making processes.

4. Strengthening Infrastructure and Access Controls

- Enforce strict access controls to protect against unauthorized training data modification.

- Conduct regular security audits, including penetration testing tailored specifically to AI systems.

Future Trends in Adversarial AI Security

Looking forward, several trends will influence how adversarial threats are managed:

- AI Security Operations Centers (AI-SOCs): Specialized SOCs focused explicitly on detecting, responding to, and preventing AI-specific threats.

- Collaborative Threat Intelligence: Increased industry-wide collaboration to share intelligence on emerging adversarial threats, improving collective defense mechanisms.

- Regulatory Frameworks: New guidelines and regulations to enforce minimum security standards, ensuring that deployed agentic models meet strict cybersecurity criteria.

Conclusion: Preparing Your AI for the Adversarial Future

Adversarial attacks on agentic models represent a growing threat landscape with severe consequences for organizations across all industries. Ensuring preparedness means adopting a proactive, comprehensive security approach that integrates robust training, continuous monitoring, transparency, and collaborative intelligence.

Ultimately, AI security should be viewed not as a one-time fix but as an ongoing, evolving process integral to the development lifecycle of autonomous systems. Organizations that invest early and consistently in AI security will maintain a competitive edge, protecting their reputation, assets, and stakeholders. Is your AI truly prepared for the adversarial future? Now is the time to ensure that it is because the cost of unpreparedness may be far greater than the investment in proactive protection.

FAQ'S

Q1. What are adversarial attacks in AI?

Adversarial attacks are intentional manipulations of input data that trick AI models into making incorrect or unintended decisions. These inputs are often subtle, making them difficult for humans to detect but highly effective at misleading AI.

Q2. Why are agentic AI models more vulnerable to adversarial attacks?

Agentic AI models operate autonomously, learning and adapting in real-world environments. Their independence and continuous learning make them attractive targets because manipulated inputs can easily alter their decision-making process.

Q3. What industries are most at risk from adversarial attacks?

Industries such as autonomous vehicles, healthcare, finance, and IoT are highly at risk. Adversarial attacks in these sectors can lead to safety hazards, misdiagnoses, financial losses, or compromised trust.

Q4. What are the common types of adversarial vulnerabilities in agentic models?

Some of the key vulnerabilities include:

- Tool Injection – Malicious inputs trigger unintended tool usage.

- Memory Poisoning – Polluting AI memory with false or misleading data.

- Prompt Leakage – Extracting hidden system prompts or API keys.

- Cascading Hallucinations – Errors from one agent spreading across systems.