Mastering Vector Search with Azure AI Search: Step-by-Step Implementation

As AI adoption grows across industries, so does the demand for more intelligent, contextual, and semantically rich search experiences. Traditional keyword-based search engines often fall short in providing relevant results, especially when users pose complex or natural language queries. That’s where vector search comes in — and Azure AI Search now supports it natively.

In this blog, we'll explore how to implement vector search with Azure AI Search, from understanding the concept to hands-on steps, best practices, and integration tips.

What is Vector Search?

Vector search, also known as semantic search, is a method that finds similar items based on the proximity of high-dimensional vectors (embeddings) rather than matching keywords. Instead of relying on exact term matches, vector search translates text (like documents or queries) into numerical representations — called embeddings — that capture semantic meaning.

By comparing these vectors, vector search can surface more relevant results for natural language queries like:

- “What is the refund policy for flight bookings?”

- “Tell me about eco-friendly hotels in Europe.”

Rather than only looking for keyword overlaps, vector search finds items similar in meaning — making it ideal for:

- Document search

- Recommendation engines

- Chatbot retrieval

- Knowledge mining

- Enterprise search systems

Why Azure AI Search for Vector Search?

Azure AI Search (formerly Azure Cognitive Search) is Microsoft's cloud-native search service with powerful capabilities such as full-text search, filtering, cognitive enrichment, and most recently — native vector search support.

With Azure AI Search, you can now:

- Ingest documents from various sources

- Generate embeddings using Azure OpenAI or custom models

- Store vectors in an index

- Perform hybrid retrieval, Combine vector search with keyword search for a hybrid retrieval approach.

- Scale search with enterprise-grade performance and security

Whether you're building an intelligent FAQ, chatbot backend, or internal enterprise search tool, Azure AI Search provides a robust, secure, and scalable solution.

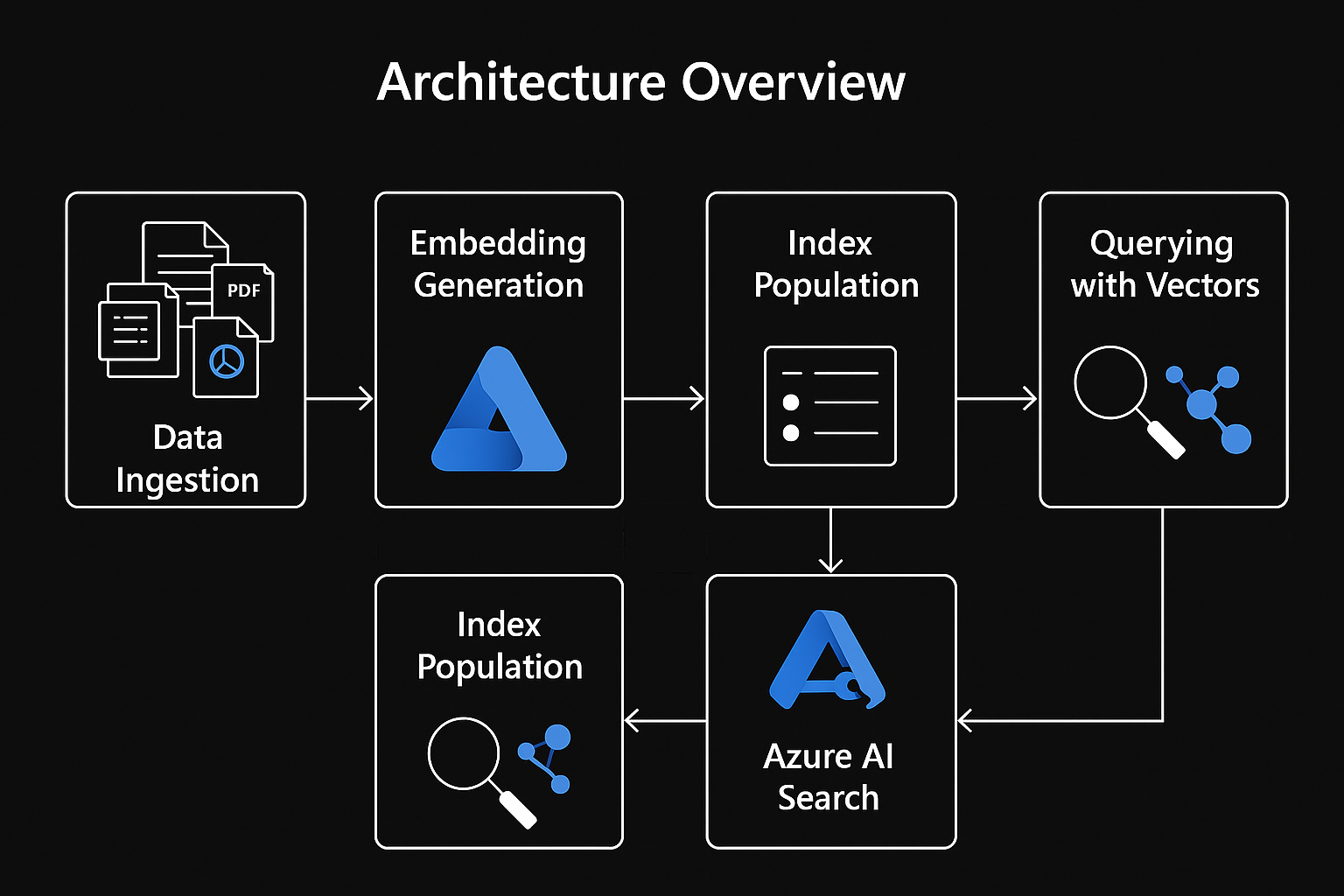

Architecture Overview

Here’s a high-level architecture for implementing vector search in Azure AI Search:

- Data Ingestion Upload documents, PDFs, webpages, etc., into Azure Blob Storage, Cosmos DB, SQL Database, or any data source supported by Azure Search indexers.

- Embedding Generation Use Azure OpenAI Service (e.g., text-embedding-ada-002) or integrate your own model to convert documents and queries into vectors.

- Index Creation Set up your search index with both keyword-based text fields and AI-powered vector fields for smarter results.

- Index Population Push your data (including vectors) into the Azure AI Search index.

- Querying with Vectors Convert user queries to vectors and perform a vector search, optionally combining with keyword search (hybrid search).

- Result Ranking & Display Fetch relevant results, apply filters/rankings, and show them in your application.

Step-by-Step Guide to Implement Vector Search with Azure AI Search

Step 1: Set Up Azure AI Search Service

- Go to the Azure Portal.

- Create a new Azure AI Search resource.

- Choose a pricing tier that supports vector search (e.g., Standard S2+).

- Enable AI enrichment if needed.

Step 2: Prepare Your Data

Let’s say you have customer support documents (FAQs, manuals, etc.).

- Store them in Azure Blob Storage or ingest through indexers from other sources like Azure SQL, Cosmos DB, or SharePoint.

- Clean and structure your data — use fields like title, content, category, timestamp.

Example JSON document:

{

"id": "faq001",

"title": "Refund Policy",

"content": "You can request a refund within 14 days of purchase...",

"category": "Payments",

"timestamp": "2025-04-01T08:00:00Z"

}

Step 3: Generate Embeddings Using Azure OpenAI

To turn documents and queries into vectors, use Azure OpenAI’s text-embedding-ada-002 or similar model.

Example: Using Python & Azure OpenAI SDK

from openai import AzureOpenAI

import os

client = AzureOpenAI(

api_key=os.getenv("AZURE_OPENAI_KEY"),

api_version="2024-04-01",

azure_endpoint="https://<your-endpoint>.openai.azure.com/"

)

def generate_embedding(text):

response = client.embeddings.create(

model="text-embedding-ada-002",

input=[text]

)

return response.data[0].embedding

Run this script on each document’s content and store the 1536-dimensional vector.

Step 4: Define a Vector-Aware Search Index

Use Azure’s REST API, .NET SDK, or Python SDK to define a new index.

Example vector-aware index schema:

{

"name": "faqs-index",

"fields": [

{ "name": "id", "type": "Edm.String", "key": true },

{ "name": "title", "type": "Edm.String", "searchable": true },

{ "name": "content", "type": "Edm.String", "searchable": true },

{ "name": "category", "type": "Edm.String", "filterable": true },

{

"name": "contentVector",

"type": "Collection(Edm.Single)",

"dimensions": 1536,

"vectorSearchConfiguration": "default"

}

],

"vectorSearch": {

"algorithmConfigurations": [

{

"name": "default",

"kind": "hnsw",

"parameters": {

"m": 4,

"efConstruction": 400,

"efSearch": 500,

"metric": "cosine"

}

}

]

}

}

Step 5: Push Documents with Vectors to the Index

Once your index is created, upload the documents including the vector:

{

"value": [

{

"@search.action": "upload",

"id": "faq001",

"title": "Refund Policy",

"content": "You can request a refund...",

"category": "Payments",

"contentVector": [0.005, 0.23, ..., 0.12] // 1536 floats

}

]

}

Use the Azure Search REST API or SDKs to batch upload data.

Step 6: Query the Index with Vector Search

Convert user input to vector and perform a vector query.

Example API call:

{

"vector": {

"value": [0.004, 0.12, ..., 0.15],

"fields": "contentVector",

"k": 5

}

}

Optionally combine this with keyword search using search parameter for hybrid retrieval

Hybrid search example:

{

"search": "refund",

"vector": {

"value": [...],

"fields": "contentVector",

"k": 5

},

"select": "id, title, content"

}

Best Practices for Vector Search in Azure

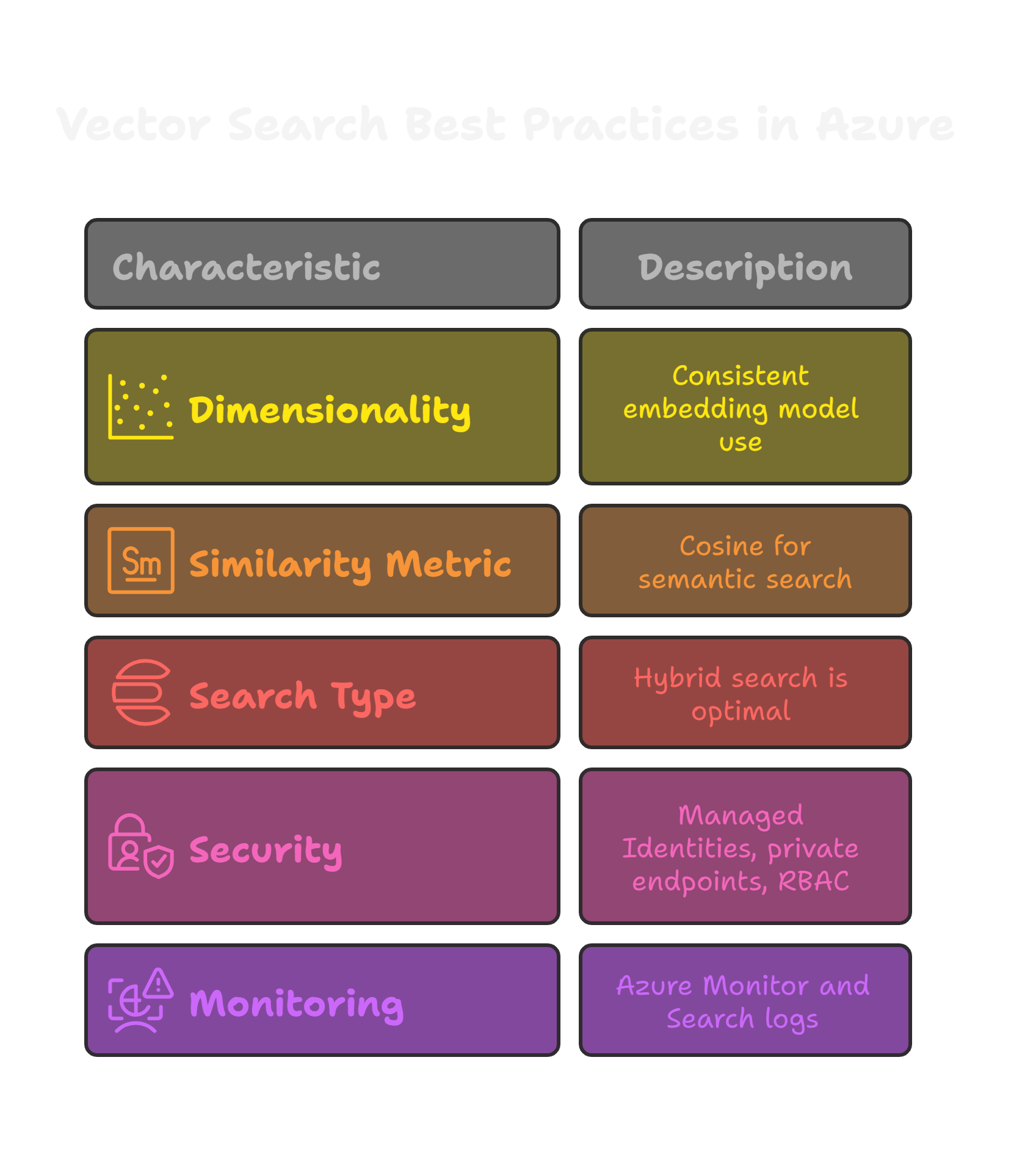

Use Dimensionality Consistently

Ensure the same embedding model is used for both indexing and querying — vector sizes must match.

Choose the Right Similarity Metric

Azure supports cosine, dotProduct, and euclidean distance. For semantic search, cosine similarity is preferred.

Hybrid Search Works Best

Combine keyword + vector search for optimal relevance, especially in enterprise search.

Secure Access

Use Azure Managed Identities, private endpoints, and RBAC policies to protect your search service.

Monitor & Optimize

Use Azure Monitor and Search logs to analyze performance, latency, and ranking accuracy.

Use Cases of Vector Search with Azure AI Search

Enterprise Knowledge Base

Employees can ask any questions like “What’s the leave policy?” and receive exact, semantically relevant policies — no keyword matching required.

LLM-Powered Chatbots

Vector search is the backbone of Retrieval-Augmented Generation (RAG), where GPT-based agents pull real-world context from a vector index to ground their answers.

Legal & Compliance Search

Search through large legal documents, cases, and policies using semantic meaning — not just keywords.

Semantic Product Search

Power smart eCommerce searches: users can ask “Show me affordable laptops good for travel” — and see laptops with relevant specs, even if those keywords weren’t used.

Conclusion

As we move toward AI-native applications, traditional keyword search no longer suffices. Vector search is the key to unlocking contextual, semantic, and intelligent search experiences.

With Azure AI Search, implementing vector search becomes accessible and scalable. Whether you’re enabling internal knowledge discovery, powering LLM chatbots, or rethinking product search, vector capabilities in Azure AI Search offer a powerful path forward.

FAQ'S

1.How can I combine semantic vector search with keyword-based ranking in Azure AI Search?

To implement hybrid search, store both text and vector embeddings in your Azure AI Search index. Then use a scoringProfile or semanticConfiguration to combine traditional full-text scoring with vector similarity using the vector clause in your search request for richer, more accurate results.

2. Which embedding model should I use for multilingual vector search on Azure?

For multilingual use cases, opt for Azure OpenAI’s multilingual models like text-embedding-3-large (if available), or integrate external multilingual embedding providers. Ensure your vector dimensions match the Azure AI Search index schema to avoid compatibility issues.

3. How can I secure vector data and embeddings in Azure AI Search?

Use role-based access control (RBAC), private endpoints, and managed identity to secure data pipelines. Store embeddings in encrypted fields, and integrate with Azure Key Vault to manage secrets and protect access to OpenAI embedding models or custom APIs.

4. What are the performance benchmarks for vector search in Azure AI Search?

Azure AI Search supports up to 1 million vectors per index (as of current limits). Query performance varies by vector dimensions and scoring profile, but latency is typically <500ms for well-optimized indexes using HNSW. Scale using partition and replica tuning.

5. Can I integrate Azure AI Search vector capabilities with my chatbot or Copilot app?

Yes. You can use Azure AI Search as a grounding source for Retrieval-Augmented Generation (RAG) pipelines. By integrating with Azure OpenAI or Bot Framework Composer, you can feed relevant, vector-ranked documents into your chatbot or Copilot for more accurate, context-aware responses.