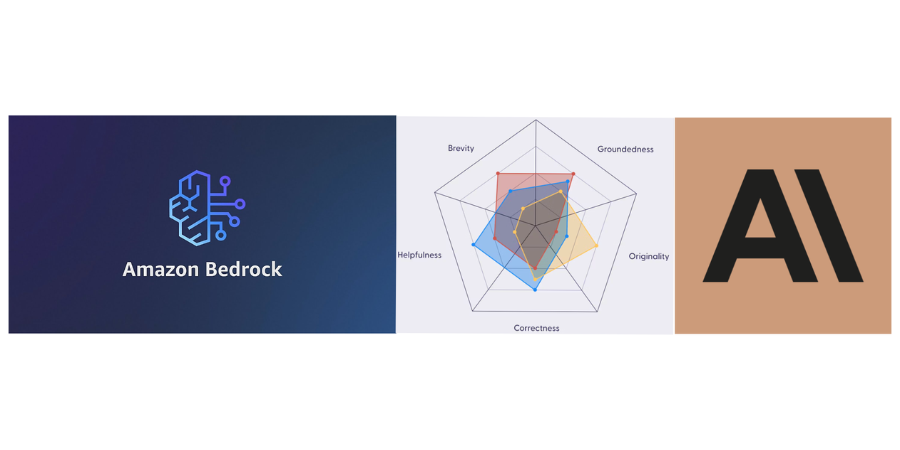

Natural Language Engine with AWS Bedrock, Anthropic Claude 2 & Langchain

In this blog post, I will cover about how we created a natural language engine for a client with AWS Bedrock, Anthropic Claude 2 and Langchain. AWS Bedrock, ensures the seamless scalability and security of cloud-based applications, setting the stage for groundbreaking advancements in artificial intelligence. Anthropic Claude 2, delivers an unprecedented level of natural language understanding, enabling machines to engage in human-like dialogue with remarkable nuance. At the intersection of these technologies stands LangChain, a framework that harnesses the power of Claude 2 within Bedrock’s secure environment to create conversational agents of unmatched capability.

Deep Dive into AWS Bedrock

AWS Bedrock is a fully managed service that simplifies the development of generative AI applications while ensuring privacy and security. It provides access to high-performing foundation models from leading AI companies, including AI21 Labs, Anthropic, Cohere, Stability AI, and Amazon. With AWS Bedrock, users can experiment with various FMs, customize them with their data, and create managed agents for complex business tasks, all without writing a single line of code.

Why should I Use Bedrock?

Amazon Bedrock offers a unique set of features and benefits that make it a compelling choice for those looking to harness the power of generative AI. Here’s why you should consider using Amazon Bedrock:

- Diverse Foundation Model Selection: Bedrock provides a streamlined developer interface to engage with a vast array of top-tier Foundation Models (FMs) from not only Amazon but also renowned AI entities such as AI21 Labs, Anthropic, Cohere, Meta, and Stability AI. This allows for swift experimentation in the playground and the convenience of a unified API for inference. This design ensures adaptability with models from various sources and facilitates seamless updates to the latest model iterations with minimal code adjustments.

- Effortless Model Personalization: Bedrock empowers you to tailor FMs with your proprietary data through an intuitive visual platform, eliminating the need for coding. Simply designate the training and validation datasets located in Amazon S3, and if necessary, tweak the hyperparameters to optimize model efficacy.

- Autonomous Agents with Dynamic API Invocation: Construct agents capable of managing intricate business operations, ranging from travel arrangements and insurance claim processing to ad campaign creation, tax preparation, and inventory management. These agents, backed by Bedrock, enhance the analytical prowess of FMs by segmenting tasks, formulating an orchestration strategy, and implementing it.

- Inherent Retrieval Augmentation with RAG: Bedrock’s Knowledge Bases allow for a secure integration of FMs with your data repositories, offering retrieval augmentation directly within the managed service. This not only amplifies the inherent capabilities of the FMs but also enriches them with domain-specific knowledge pertinent to your organization.

- Upholding Data Integrity and Regulatory Compliance: Bedrock prioritizes data security and has secured certifications for HIPAA eligibility and GDPR adherence. With Bedrock, the enhancement of base models doesn’t utilize your content, and no data is relayed to third-party model vendors. All data within Bedrock is encrypted both in transit and when stored, with an added option to use custom encryption keys. For enhanced security, AWS PrivateLink can be used with Bedrock to ensure private connectivity between your FMs and your Amazon VPC, keeping your data traffic away from public internet exposure.

- Scalability and Flexibility: As businesses grow and evolve, so do their data needs. Bedrock is designed to scale with your requirements, ensuring that as your data volume or complexity increases, the platform can handle it without compromising performance.

- Integrated Ecosystem: Bedrock seamlessly integrates with other AWS services, ensuring that you can build comprehensive solutions that leverage the broader AWS ecosystem. This integration ensures that data flow, processing, and storage are cohesive and efficient across the board.

- Availability: As of October 10, 2023, AWS Bedrock has expanded its reach and is now available in three global regions: US East (N. Virginia), US West (Oregon), and Asia Pacific (Tokyo).

Meet AWS Titan

Exclusive to Amazon Bedrock, the Amazon Titan foundation models (FMs) provide customers with a breadth of high-performing image, multimodal, and text model choices, via a fully managed API. Amazon Titan models are created by AWS and pretrained on large datasets, making them powerful, general-purpose models built to support a variety of use cases, while also supporting the responsible use of AI. Use them as is or privately customize them with your own data.

Amazon Titan Embeddings is a text embeddings model that converts natural language text including single words, phrases, or even large documents, into numerical representations that can be used to power use cases such as search, personalization, and clustering based on semantic similarity. Optimized for text retrieval to enable Retrieval Augmented Generation (RAG) use cases, Amazon Titan Embeddings, enables you to first convert your text data into numerical representations or vectors and then use those vectors to accurately search for relevant passages from a vector database, allowing you to make the most of your proprietary data in combination with other foundation models (FMs).

Meet Anthropic Claude

Claude is a family of large language models developed by Anthropic and designed to revolutionize the way you interact with AI. Claude excels at a wide variety of tasks involving language, reasoning, analysis, coding, and more. Claude models are highly capable, easy to use, and can be customized to suit your needs.

Integrating Claude 2 with Bedrock, developers can harness the power of a state-of-the-art language model while relying on the security and performance guarantees that AWS provides. It’s a combination that’s setting new standards for what conversational AI can achieve.

Meet Langchain Agents

At its core, LangChain utilizes a series of tools and chains to dissect and respond to queries. Think of it as a well-oiled assembly line where each component has a specific task, whether it be understanding intent, retrieving information, or crafting responses. This modular setup allows for flexibility and scalability, ensuring that conversational agents can adapt to a wide range of scenarios and industries.

In the LangChain universe, agents are specialized modules programmed with particular capabilities. They can be simple, like a weather lookup agent, or complex, like an agent for booking flights that understands dates, airports, and pricing.

The AgentExecutor acts as the conductor of an orchestra, seamlessly coordinating the agents. It determines the appropriate sequence of activation for these agents, handling concurrent processes and dependencies. This is crucial for maintaining the flow of conversation and ensuring that responses are contextually relevant and timely.

ReAct Prompting: A Method for Interactive AI

ReAct prompting is a novel approach to improve the interactivity of AI systems. It’s a cyclical process that involves:

- React: The AI reacts to the user’s input, drawing from its understanding of the query.

- Act: The AI takes action, which could be a database query, a computation, or generating a piece of text.

- Observation: The AI observes the outcome of its action, gauging its effectiveness.

- Thought: The AI contemplates the results, refining its approach if necessary.

- Final Answer: Finally, the AI presents its answer or a follow-up question to the user, aiming for clarity and completion.

This method ensures that the AI is not just passively responding but actively engaging in the conversation, much like a human would. By incorporating ReAct prompting, LangChain agents are not just answering questions; they’re participating in an ongoing dialogue that can lead to more insightful and productive interactions.

Enhancing Conversation AI with AWS OpenSearch Vector DB

In the context of AI, OpenSearch can be used to quickly search through vast amounts of conversational data, enabling agents to retrieve relevant information and learn from past interactions. Its scalable nature ensures that as the amount of data grows, OpenSearch can continue to deliver fast and precise search results.

VectorDB takes the search capability a step further by moving beyond simple keyword matching. It utilizes vectors to understand the context and semantic meaning within the data. This approach is based on the concept of word embeddings, where words or phrases from the vocabulary are mapped to vectors of real numbers.

Incorporating VectorDB into an AI application can significantly enhance the agent’s ability to understand and process natural language. By utilizing the semantic search capabilities of VectorDB, AI can discern nuances and provide responses that are contextually and semantically aligned with user queries.

High-level Flow of Conversation AI Agent

Copyright – Info Services

- Product related knowledge base is indexed into Opensearch in vector format using Titan Embeddings. This will enable Opensearch to perform vector similarity search and enables RAG implementation

- Historical data is stored in S3 and queried with Athena that does complex aggregations and filtering to retrieve multiple data records

- GraphQL endpoint is configured with AppSync to answer current state of records in real-time

- Langchain agents are configured with the above tools and chained with Claude 2.1 model accessed as an API via AWS Bedrock

- Langchain agents perform ReAct prompting and chooses the appropriate tool to answer user questions

Conclusion

The AWS ecosystem, with its plethora of services such as AppSync, GraphQL, S3, Athena, and OpenSearch VectorDB, and the foundational strength of AWS Bedrock, forms a comprehensive infrastructure that propels the development and deployment of sophisticated AI applications. Bedrock, in particular, offers a resilient and scalable platform that underpins the entire operation, ensuring the robustness and reliability required for high-end AI functionalities.

These services, when harmonized, deliver a full spectrum of capabilities for effectively managing data, executing complex queries, and performing intricate search operations — all fundamental to the AI experience. Coupled with LangChain’s advanced conversational frameworks and the intellectual prowess of large language models like Claude 2, we witness the creation of AI agents that do more than respond — they understand and contextualize.

References

- https://aws.amazon.com/bedrock/

- https://docs.aws.amazon.com/bedrock/latest/userguide/model-parameters-claude.html

- https://docs.anthropic.com/claude/docs/intro-to-claude

- https://aws.amazon.com/blogs/machine-learning/getting-started-with-amazon-titan-text-embeddings/

- https://python.langchain.com/docs/modules/agents/

Author: Raghavan Madabusi