Breaking Data Silos: Why Databricks Is the Go-To Migration Choice

Modern enterprises generate enormous amounts of data every day. However, legacy infrastructure—whether on-premise Hadoop clusters, traditional SQL servers, or outdated ETL systems—often prevents organizations from tapping into the full value of their data. In such a landscape, the unified data platform stands out as a solution that streamlines data engineering, advanced analytics, and machine learning workflows.

Migrating to this modern data workspace is not just about changing platforms—it's about unlocking performance, scalability, and cost efficiency. This blog will walk you through the complete picture of Lakehouse migration services, from why enterprises are making the switch to how you can make the transition smoothly and efficiently.

Why Migrate?

The analytics engine unifies the power of data lakes and data warehouses in one collaborative platform, leveraging open-source technologies such as Apache Spark and Delta Lake for flexibility and performance. Here’s why enterprises are choosing to migrate:

Unified Workflows

This intelligent environment brings ETL, real-time streaming, business intelligence, and machine learning into one workspace. This eliminates silos and allows teams to collaborate more effectively.

Cloud-Native Scalability

Being cloud-native, the Lakehouse solution can auto-scale based on workload demand. Whether it's a spike in traffic or a large model training job, the platform adapts without manual intervention.

Lower Costs

Organizations typically reduce infrastructure and licensing costs by up to 40%. With consumption-based pricing and autoscaling clusters, you pay only for what you use.

AI and ML Ready

With native MLflow integration, notebooks, and model serving, this data-first ecosystem is built for advanced analytics and machine learning out of the box.

Open Standards

The platform embraces open-source standards such as Delta Lake and Apache Spark, while offering seamless compatibility with orchestration and transformation tools like Apache Airflow and dbt.

Explore how our advanced data platform enables future-proof architecture on our Databricks Services page.

Core Components of a Migration

- Infrastructure Assessment A detailed evaluation of your current stack, including performance bottlenecks, high-cost operations, legacy dependencies, and security frameworks.

- Strategic Migration Planning A roadmap outlining phases, KPIs, success benchmarks, and rollback strategies to reduce risk.

- Data Transfer and Delta Lake Adoption Datasets from HDFS, RDBMS, or S3 buckets are migrated and structured into Delta Lake for scalability and ACID compliance.

- ETL Pipeline Modernization Legacy workflows are replaced using the platform’s native Spark engine and Delta Live Tables, improving speed, reliability, and observability.

- System Integration Seamless connection to data lakes, data warehouses, APIs, and BI tools like Power BI and Tableau.

- Security and Governance Setup Role-based access, identity provider integration (Azure AD, Okta), and governance using Unity Catalog.

- Optimization and Knowledge Transfer Post-migration performance testing, cluster tuning, and team enablement to ensure adoption.

Real-World Results

A global media and entertainment leader recently migrated their fragmented on-prem Hadoop workloads to this scalable environment with our help. Their goals included improving pipeline speed, reducing cloud costs, and enabling machine learning at scale.

Key Outcomes:

- 40% reduction in infrastructure and orchestration costs

- Unified and simplified ETL pipelines

- Reduced operational friction and downtime

This case study highlights how migration dramatically simplified operations and increased agility.

Common Migration Scenarios

- From Hadoop to the Lakehouse A modern, scalable alternative that reduces overhead and improves data processing.

- From On-Prem SQL and ETL Tools Migrate from SSIS, Informatica, or SQL Server to modern, automated pipelines built on a collaborative analytics platform.

- Cloud Data Warehouse to Unified Platform Eliminate disconnected workflows and empower teams to collaborate seamlessly.

Benefits Beyond Cost Savings

- Increased Agility: Develop and deploy data products in days instead of weeks.

- Higher Data Reliability: ACID-compliant pipelines with schema evolution and time travel.

- Seamless Collaboration: Unified workspace for data scientists, analysts, and engineers.

- Stronger Governance: Centralized control with Unity Catalog integration.

Best Practices for a Smooth Migration

- Start with a pilot

- Automate with tools like Terraform and Airflow

- Leverage parallelism for performance

- Monitor costs and job metrics

- Train cross-functional teams

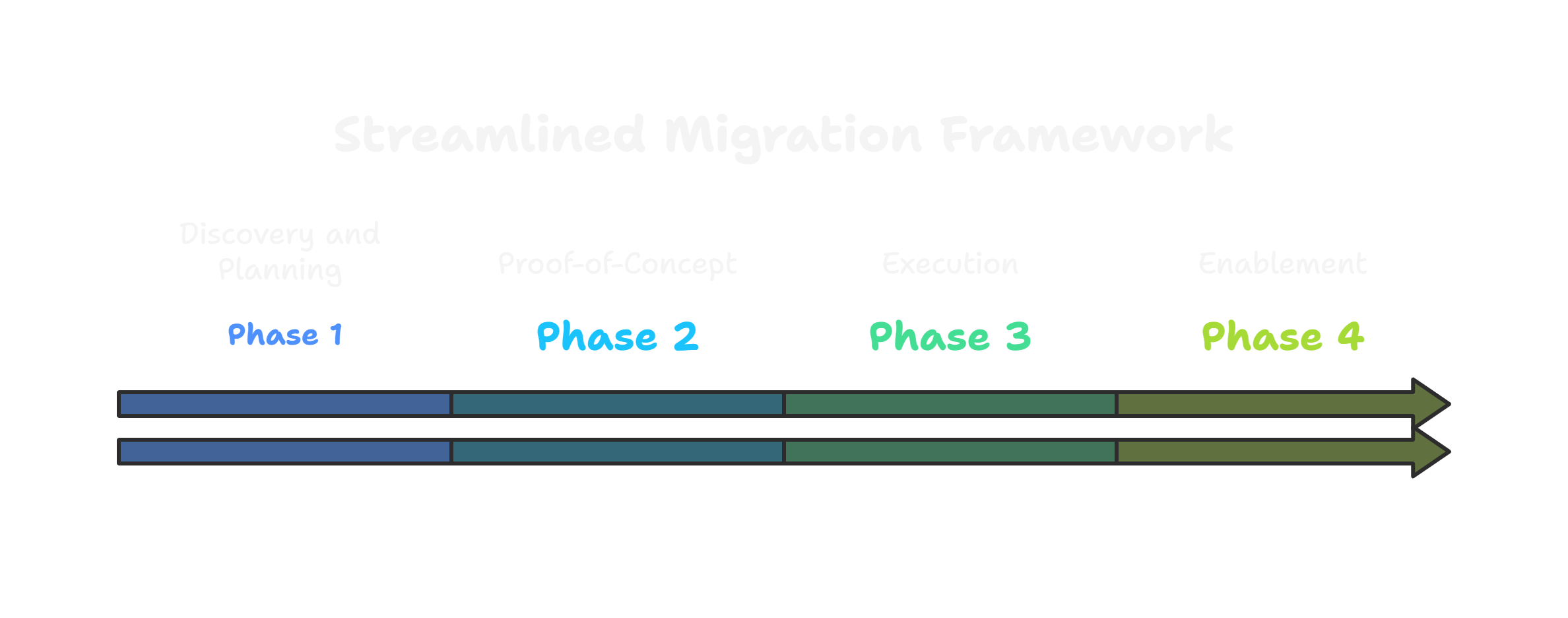

Our Migration Framework

Phase 1: Discovery and Planning Architecture review, cost modeling, and roadmap development

Phase 2: Proof-of-Concept Pilot use case and performance validation

Phase 3: Execution Data transfer, Delta Lake conversion, and pipeline re-engineering

Phase 4: Enablement Training, optimization, and governance implementation

We also offer managed services for ongoing improvement.

Metrics That Matter

Organizations that migrate with our framework typically see:

- 2–3x performance improvement

- Up to 40% cost savings

- 50–60% fewer pipeline failures

- Real-time dashboards and ML model deployments

Ready to Start?

This next-gen data platform isn’t just another tool—it’s a leap toward intelligent operations, faster insights, and enterprise-grade scalability. Whether you're modernizing legacy systems or building AI-powered pipelines, we're ready to help.

Talk to our experts today and unlock your next phase of data evolution. Let’s cut costs, streamline your pipelines, and bring AI-driven insights to life.

Additional resources

1. Why are enterprises migrating to Databricks from legacy systems?

Enterprises migrate to Databricks to overcome limitations of legacy systems, such as poor scalability, siloed data, and high costs. Databricks offers a unified platform that combines data engineering, analytics, and machine learning in the cloud—resulting in faster data workflows, better collaboration, and lower infrastructure overhead for businesses aiming to modernize their data architecture.

2. What types of data systems can be migrated to Databricks?

You can migrate on-prem systems like Hadoop, SQL Server, Oracle, and flat files, as well as cloud storage from AWS, Azure, or GCP. Databricks supports structured, semi-structured, and unstructured data formats, making it suitable for transitioning data warehouses, lakes, ETL pipelines, and real-time processing tools into a unified analytics environment.

3. How long does a Databricks migration project take?

The duration depends on data volume, system complexity, and business priorities. A focused pilot can take 2–4 weeks, while enterprise-wide migrations may span 3–6 months. Breaking the migration into phases—assessment, planning, execution, and enablement—helps reduce risk and ensures smoother transitions with minimal disruption to day-to-day business operations.

4. What are the cost benefits of migrating to Databricks?

Databricks offers consumption-based pricing, autoscaling, and the elimination of legacy license fees. Organizations typically see up to 40% reduction in infrastructure and processing costs. Unified workflows also cut down operational overhead, making teams more efficient. These factors combine to deliver a significant return on investment over traditional or fragmented platforms.

5. How does Databricks improve data engineering and machine learning workflows?

Databricks consolidates data processing, analytics, and ML in one platform. Its support for Apache Spark, Delta Lake, and MLflow simplifies workflow orchestration. Teams can build, train, and deploy models faster using collaborative notebooks. This integrated environment speeds up time-to-insight and enables continuous machine learning development and deployment at scale.