Designing Autonomous Agents in Databricks

Designing Intelligent LLM Systems for Real-Time Decision Making

As organizations grapple with ever-growing volumes of data and rising expectations for real-time decision-making, agentic systems powered by large language models (LLMs) are emerging as a transformative solution.

Databricks stands out as a versatile platform, seamlessly integrating data processing, governance, and model deployment, making it easier to design and deploy intelligent agents.

This blog dives deep into what Agent Design means in Databricks, explores how it differs from traditional workflows, and walks through the key building blocks for creating LLM-powered agents that solve real business problems.

Understanding Agent Design in Databricks

At its core, Agent Design in Databricks refers to building intelligent, autonomous systems that use LLMs. These agents not only respond, but also think, decide and act.

- Ingest and interpret all types of enterprise data (e.g., cost data, logs, user queries)

- Use reasoning and decision-making to determine next steps

- Call relevant tools or functions (e.g., SQL query builders, summarizers)

- Return insights or automate business actions

Rather than simply responding with static outputs, these agentic systems analyze, reason, and act, mirroring human-like problem solving within a governed and scalable architecture.

Agentic vs. Non-Agentic Systems

Before diving into the architecture, it's important to understand the fundamental difference between agentic and non-agentic systems.

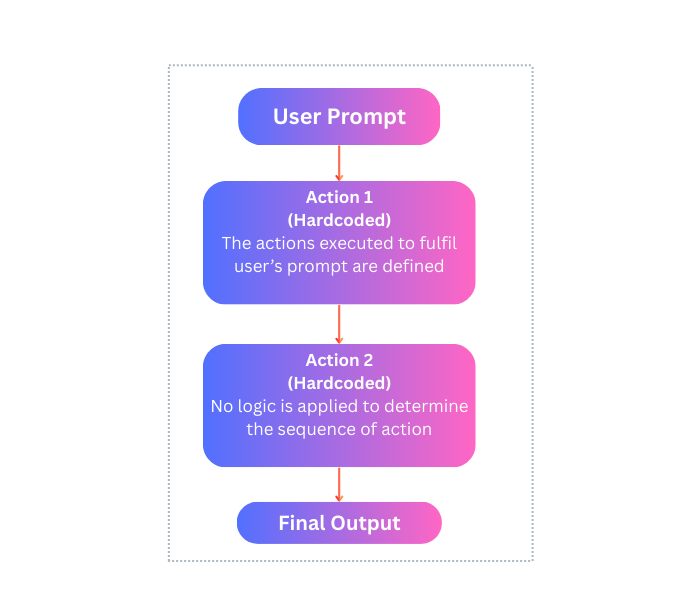

Non-Agentic Systems

A non-agentic flow follows a rule-based, hardcoded sequence of actions that remain constant regardless of context. Think of them as automation pipelines where every step is predetermined:

- The workflow is linear

- Decisions are static

- Logic is non-adaptive

There’s no reasoning, no dynamic planning, just a sequence of tasks strung together.

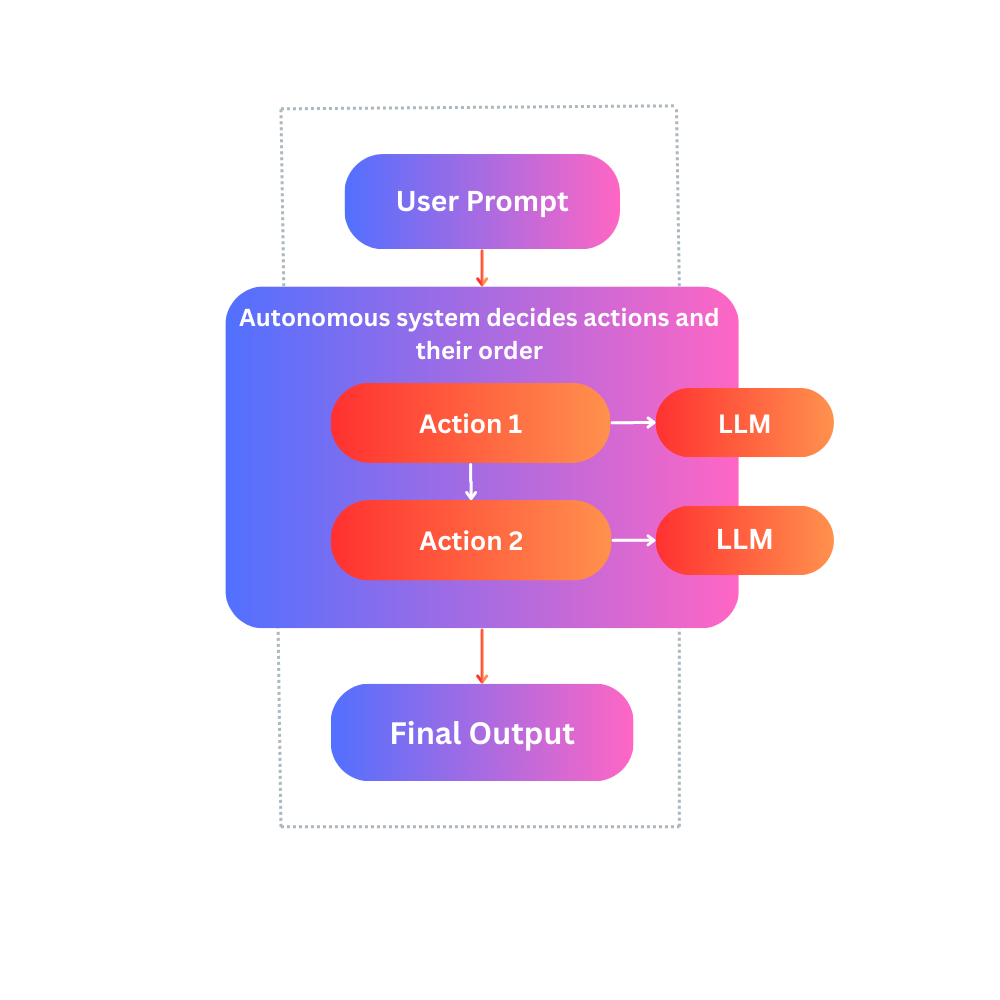

Agentic Systems

By contrast, an agentic system is dynamic and intelligent, using advanced LLMs like LLaMA-2 or 3, OpenAI GPT-4, Mistral 7B, Mixtral-8x7B, or Whisper to observe, reason, and decide the best course of action based on the current context.

Key capabilities:

- Observes user input in real time

- Thinks and plans multiple possible paths

- Executes actions by selecting the most relevant tools

- Learns and adapts across inputs and use cases

- Follows system prompts, stays within defined guardrails, and maintain context-aware decision making.

What Databricks Brings Together:

- Seamless Integration: Streaming, Delta, and AI work natively together without complex integrations.

- End-to-End Management: Governance, Automation, and Prompt Engineering are built-in, streamlining your workflows.

- Effortless Insights: Deliver data-driven insights through Chatbot interfaces, eliminating the need for complex dashboards.

- Complete Model Monitoring: Inference Tables offer full visibility into model performance and data pipelines.

- Smart Resource Management: Rate Limiting ensures efficient resource use, preventing overload.

- Accelerated Solutions: With DASF (Databricks Accelerated Solution Framework), speed up builds and reduce time to market.

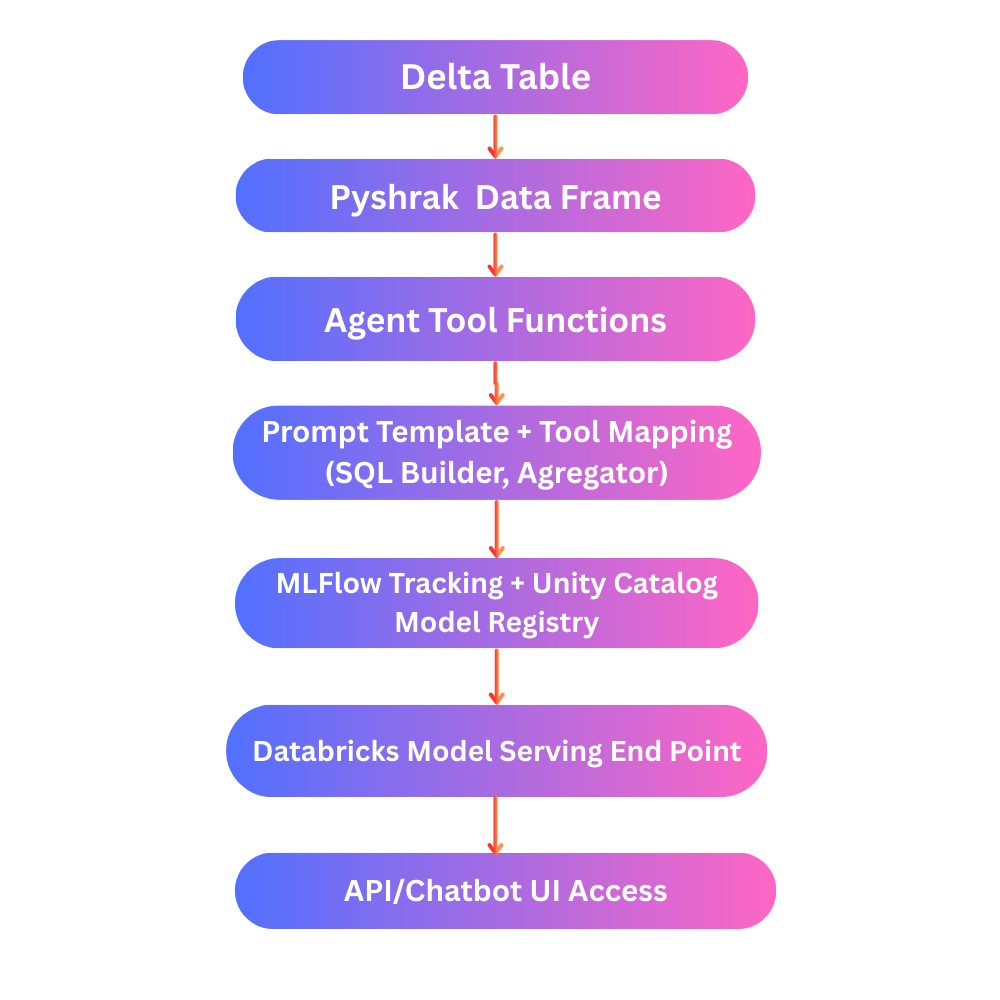

The Architecture of Agentic Systems in Databricks

Databricks provides a robust platform to implement agentic systems by combining structured data processing (via Delta Lake and PySpark) with modern MLOps tooling (MLflow, Unity Catalog) and LLM integration (OpenAI, Hugging Face, Mosaic).

Here’s a high-level overview of an agentic system architecture in Databricks:

1. Delta Table

Stores clean, structured data, such as cloud cost metrics, log files, or analytics events.

2. PySpark DataFrame

Reads and transforms Delta data to prepare for downstream tasks. Acts as the data access layer for the agent.

3. Agent Tool Functions

Modular logic blocks that perform specific tasks:

- Query generation

- Data processing

- Information Summarization

- KPI metric extraction

These are callable "tools" that the agent can invoke based on its reasoning and the task at hand.

4. Prompt Template + Tool Mapping

A structured prompt that includes system-level instructions, available tools, their input/output formats, and any rules or constraints for the LLM to follow. This mapping ensures consistency and control in how the agent makes decisions.

5. LLM (LLaMA-3 / MPT / OpenAI GPT)

Interprets the prompt and available tools. Uses reasoning to decide:

- What questions to ask the data

- Which tools to invoke

- What order to perform actions in

6. MLflow

Track experiments, monitor the performance of agent models and manage model lifecycles for consistent and reproducible results.

7. Unity Catalog

Apply access control to ensure proper permissions, govern and organize data to streamline access and usage across teams.

8. Model Serving Endpoint

Deploy the agent as a RESTful API that can be integrated into apps, dashboards, or chatbots.

9. API / Chatbot UI

Acts as the user-facing interface. Users input natural language queries; the agent responds with interpreted, contextualized, and actionable insights.

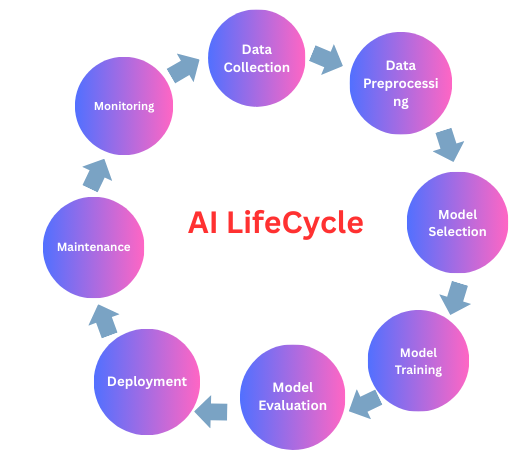

The Agent Loop in Databricks

A well-designed agent system in Databricks follows this loop to enable enterprise-grade decision intelligence that is automated and explainable:

- Analyze:

a. Data Preparation: Historical cost and usage data are read from Delta Lake using Spark for structured processing.

- Reason:

a. Agent Tooling: Custom agents are built with DataFrames, powered by prompt templates and system prompts to reason through the data.

- Act:

a. Model Management: Models are tracked via MLflow and governed using Unity Catalog for secure, organized management.

b. Model Serving: Exposed via REST API using Databricks Model Serving, enabling scalable, real-time access.

- Respond:

a. User Interaction: Users query the agent through a chatbot or API interface.

b. Insights Delivery: The agent delivers structured outputs like tables, summaries, and cost comparison charts.

Inside the Agent Workflow

1. Task Processing

- The agent reads the request and plans the flow, preparing to engage the necessary tools and resources.

2. Data Collection

- It pulls the required cost and usage data from integrated sources like AWS, Snowflake, and Databricks.

3. Data Analysis

- The agent applies ML models, performs trend analysis, and even conducts sentiment analysis if needed to gain deeper insights.

4. Report Generation

- The agent generates clear, actionable outputs tables, summaries, and cost comparison charts, ready to send back to the user.

What Makes This Powerful?

This architecture provides real-time, dashboard-ready outputs governed through Unity Catalog and served securely via APIs. It removes the manual effort of cost tracking, offering teams on-demand insights and enabling efficient decision-making.

Governed & Scalable

The entire pipeline is secure, automated, and fully governed on the Databricks Lakehouse, ensuring seamless scalability and data integrity.

Best Practices for Building Agentic Systems

Here are a few recommendations to follow when building agentic flows in Databricks:

- Use Unity Catalog

Manage model and tool access with fine-grained controls. - Track with MLflow

Log runs, track metrics, and version your agents like any ML model. - Apply System + Few-Shot Prompting

Combine consistent instructions with curated examples to guide reasoning. - Wrap agent actions as LangChain Tools

Use LangChain’s function calling and routing capabilities to modularize logic. - Test with Both Agentic and Non-Agentic Flows

Validate if dynamic reasoning improves performance over fixed workflows.

Example Use Cases in Databricks

| Use Case | Agent Task |

|---|---|

| Cloud Cost Analysis | Summarize top services by cost |

| Marketing Analytics | Suggest campaign with highest ROI |

| ML Governance | Report models nearing expiry |

| Metadata Analysis | Detect stale or unused datasets |

These examples show how agentic systems can unlock value across operations, marketing, governance, and data discovery.

Final Thoughts

Agent Design in Databricks marks a new era of intelligent automation, one where systems don’t just execute instructions but interpret them, reason through them, and act with contextual intelligence.

By combining structured data processing with LLM-based reasoning, organizations can build agents that offer high-value insights, reduce operational load, and improve decision-making, all while remaining scalable, secure, and explainable.

As the ecosystem of tools (LangChain, LLaMA-3, OpenAI, Unity Catalog, MLflow) continues to mature, the path to intelligent, agent-driven enterprises is already here.