Next-Gen ML Lifecycle: MLflow 3.0 for GenAI

Introduction: The Shift Toward Generative AI Lifecycle Management

Generative AI (GenAI) has transformed the way enterprises think about artificial intelligence. From large language models powering conversational bots to image and document generation accelerating productivity, the possibilities are endless. Yet, with this explosion in GenAI use cases comes a critical challenge: how do organizations manage the entire lifecycle of these powerful models?

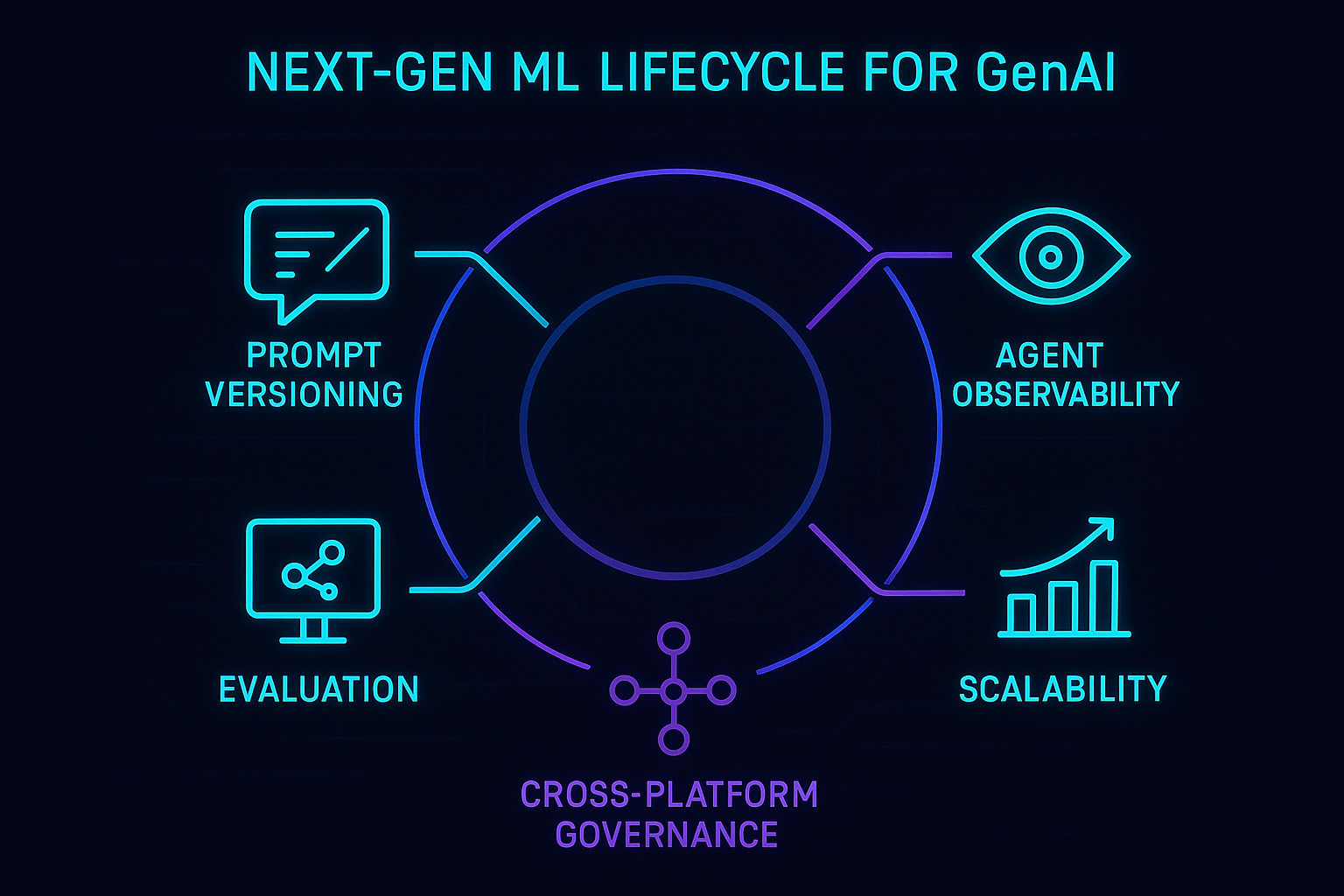

Traditional MLOps practices designed for supervised learning are insufficient when applied to GenAI. Enterprises need tools that handle prompt versioning, observability, governance, and deployment at scale. This is where MLflow 3.0, introduced by Databricks, becomes a game-changer.

In this blog, we’ll explore how MLflow 3.0 redefines ML lifecycle management for GenAI, enabling organizations to innovate faster, control costs, and meet enterprise-grade compliance requirements.

The Evolution of MLflow: From MLOps to GenAI

When MLflow launched in 2018, it was focused on reproducibility, experiment tracking, and deployment for machine learning models. Over the years, it has become the de facto open-source standard for MLOps, trusted by thousands of enterprises worldwide.

But the rise of GenAI changed the game. Unlike traditional ML models trained on structured datasets, GenAI applications depend on prompts, embeddings, fine-tuned LLMs, and agent workflows. Managing this complexity requires more than tracking hyperparameters it requires a holistic framework.

MLflow 3.0 answers this demand, extending its lifecycle management capabilities to the unique workflows of GenAI systems. The result is a platform that bridges the gap between data science innovation and enterprise governance.

Key Innovations in MLflow 3.0 for GenAI

1. Prompt Versioning and Experiment Tracking

Prompts are the lifeblood of GenAI applications. A slight tweak in wording can drastically change an LLM’s output. Without systematic version control, teams risk losing valuable iterations or deploying unstable prompts.

MLflow 3.0 introduces prompt versioning as a first-class citizen. Every experiment can now track:

- Prompt templates and variations

- Model responses and evaluation metrics

- Associated metadata such as embeddings and parameters

This ensures reproducibility and enables teams to roll back to high-performing prompt designs critical for enterprises running production-grade GenAI.

2. Agent Observability

Modern GenAI applications are no longer single-prompt pipelines they’re agentic systems combining LLM reasoning, tool invocation, and decision-making. Observing how these agents behave in production is vital for reliability.

MLflow 3.0 introduces agent observability dashboards that capture:

- Agent reasoning chains

- Tool call sequences and results

- Error handling and performance metrics

Even when agents are deployed on external platforms like LangChain or LlamaIndex, MLflow can integrate logs back into its monitoring framework. This cross-platform observability is a breakthrough for enterprises juggling hybrid stacks.

3. Cross-Platform Deployment and Governance

Enterprises rarely operate in a single ecosystem. A team may deploy an LLM to Azure OpenAI, fine-tune a model on Hugging Face, and run inference through Databricks. Without a centralized governance layer, compliance risks skyrocket.

MLflow 3.0 extends its reach to multi-platform deployments. Teams can register models, prompts, and fine-tuned checkpoints in the MLflow registry regardless of where they are ultimately served. This provides a single source of truth for compliance and auditability.

For regulated industries such as healthcare, finance, and pharmaceuticals, this governance capability is not optional, it’s mandatory.

4. Evaluation and Monitoring for GenAI Outputs

Unlike traditional ML, GenAI outputs are qualitative, making evaluation complex. Accuracy cannot be judged by a simple F1 score. Instead, enterprises need custom evaluation metrics: relevance, safety, factual consistency, and user satisfaction.

MLflow 3.0 integrates evaluation pipelines that support:

- Human feedback loops (RLHF-style evaluation)

- AI-based scoring models for toxicity or bias detection

- Longitudinal monitoring of model drift and performance decay

This means enterprises can confidently measure whether their chatbots, copilots, or content generators are truly delivering business value while adhering to ethical standards.

5. Scalability for Enterprise GenAI

The adoption of GenAI introduces unique scaling challenges. Models can serve thousands of concurrent requests, with complex orchestration across GPUs and vector databases.

MLflow 3.0’s model serving enhancements now support high-throughput GenAI workloads with enterprise-grade SLAs. Combined with Databricks’ serverless GPU compute and vector search integrations, organizations can run retrieval-augmented generation (RAG) pipelines efficiently at scale.

Real-World Use Cases of MLflow 3.0 for GenAI

1. Financial Services: Risk-Aware AI Assistants

Banks and insurance companies can use MLflow 3.0 to deploy conversational AI agents for customer support while tracking prompt history, monitoring hallucinations, and ensuring compliance with regulatory policies.

2. Healthcare: Clinical Decision Support

Hospitals deploying GenAI models for diagnostic assistance can leverage MLflow’s evaluation frameworks to detect bias, ensure factual correctness, and maintain patient safety.

3. Retail & eCommerce: Personalized Recommendations

Retailers running GenAI recommendation engines can use MLflow to monitor agent workflows, test new prompts for product suggestions, and optimize models for conversion metrics.

4. Manufacturing: Intelligent Document Processing

Manufacturers digitizing large volumes of contracts, manuals, or IoT reports can use MLflow’s structured prompt evaluation and observability tools to ensure document parsing accuracy at scale.

The Strategic Value for Enterprises

The arrival of MLflow 3.0 signals more than a feature update, it’s a strategic shift. Enterprises adopting GenAI must think beyond experimentation and towards production governance.

By aligning data scientists, ML engineers, compliance officers, and business stakeholders on a unified lifecycle platform, MLflow 3.0 enables:

- Faster time to market for GenAI solutions

- Reduced compliance risk via centralized governance

- Improved ROI by optimizing prompts and monitoring outcomes

- Future readiness as LLM architectures and deployment platforms evolve

In short, MLflow 3.0 future-proofs enterprises against the rapidly shifting landscape of GenAI.

Best Practices for Implementing MLflow 3.0

To maximize impact, enterprises should follow these practices:

- Standardize Prompt ManagementTreat prompts like code version them, test them, and promote them through CI/CD pipelines.

- Implement Human + AI Evaluation LoopsCombine automated scoring with human judgment to ensure quality and safety of GenAI outputs.

- Leverage Multi-Platform IntegrationsCentralize governance even if deploying across Azure, AWS, or Hugging Face.

- Monitor Agents, Not Just ModelsTrack reasoning chains and decision logic to avoid unpredictable behavior in production.

- Focus on Scalability EarlyDesign workflows that can handle both bursty demand and enterprise SLAs.

Looking Ahead: The Future of GenAI MLOps

MLflow 3.0 is not the end of the journey, it’s the foundation for the next generation of GenAI MLOps. As large models become more open-sourced, and as enterprises blend private fine-tuned models with public APIs, the need for lifecycle control, observability, and compliance will only intensify.

We can expect future iterations of MLflow to integrate more deeply with vector databases, RAG frameworks, synthetic data generation, and real-time evaluation agents. The trajectory is clear: the enterprises that succeed with GenAI will be those that treat lifecycle management as a first-class discipline, not an afterthought.

Conclusion: MLflow 3.0 as the Cornerstone of Enterprise GenAI

The leap from MLflow 2.x to MLflow 3.0 is more than a version upgrade, it represents a paradigm shift for GenAI lifecycle management.

By offering prompt versioning, agent observability, cross-platform governance, GenAI evaluation, and enterprise scalability, MLflow 3.0 equips organizations with the tools they need to deploy AI responsibly and at scale.

FAQ'S

1. What is MLflow 3.0 and why is it important for Generative AI?

MLflow 3.0 is the latest release of Databricks’ open-source MLOps platform. Unlike earlier versions, it introduces features like prompt versioning, agent observability, and multi-platform governance designed specifically for Generative AI (GenAI) use cases. This makes it essential for enterprises adopting LLMs and GenAI applications at scale.

2. How does MLflow 3.0 improve prompt management for LLMs?

MLflow 3.0 treats prompts as first-class citizens, allowing versioning, tracking, and rollback of prompt templates. This ensures reproducibility and stability in enterprise LLM applications where a small prompt change can significantly alter model output.

3. Can MLflow 3.0 integrate with platforms like LangChain, Hugging Face, and Azure OpenAI?

Yes. MLflow 3.0 supports cross-platform governance, enabling enterprises to register and manage models, prompts, and fine-tuned checkpoints across ecosystems like Azure OpenAI, Hugging Face, and LangChain. This centralized governance reduces compliance risk.

4. How does MLflow 3.0 help with evaluating Generative AI outputs?

Unlike traditional ML models, GenAI outputs are qualitative. MLflow 3.0 includes evaluation pipelines with metrics like relevance, safety, factual consistency, and user satisfaction. It also supports human feedback loops and AI-based bias detection.