Why Edge AI with Multi-Cloud is a Game-Changer

What if you want to scale up, ensure backup, and tap into hybrid intelligence? This is where Multi-Cloud steps in. By blending Edge AI with platforms like AWS, Azure, or Google Cloud, you unlock incredible benefits:

✅ Instant decisions (thanks to Edge AI)

✅ Effortless data backup and scaling (through Multi-Cloud)

✅ Savings on costs (due to less reliance on the cloud)

Curious about how it all works? Let’s break it down together with a brief introduction about Edge AI.

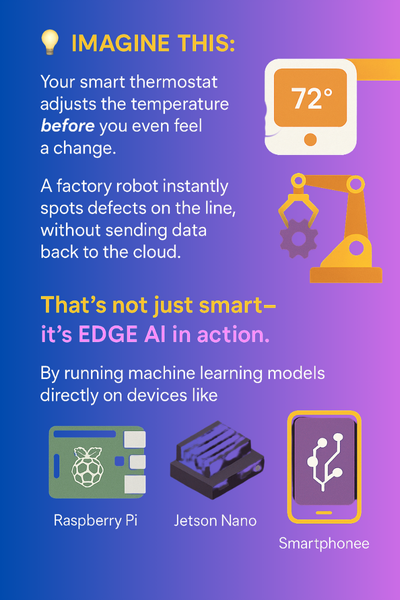

1. What is Edge AI?

Edge AI means running AI models directly on embedded devices instead of sending data to the cloud. Think:

- Smart cameras detect intruders instantly

- Wearable health monitors predicting heart issues in real-time

- Autonomous drones avoiding obstacles without latency

How Multi-Cloud Fits In

While Edge AI handles instant decisions, Multi-Cloud helps with:

- Training & retraining models (using cloud GPUs)

- Storing and synchronizing edge data (AWS S3, Azure Blob)

- Hybrid deployments (some AI on-edge, some in-cloud)

2. Key Challenges (And How to Solve Them)

Challenge 1: Tiny Devices, Big AI Models

Edge devices have limited RAM and CPU. Solution?

- Model Quantization (shrinking models from FP32 → INT8)

- Pruning (removing unnecessary neural connections)

- TinyML (TensorFlow Lite, PyTorch Mobile)

Challenge 2: Real-Time Processing Needs

Cloud latency can be deadly (e.g., self-driving cars). Fix?

- On-device inferencing (no round-trip to cloud)

- Federated Learning (train locally, update globally)

Challenge 3: Security Risks

Hacking an edge device = big trouble. Protect with:

- Secure Boot & TEEs (Trusted Execution Environments)

- Encrypted OTA Updates (no tampering)

3. Step-by-Step Guide: Deploying ML Models on Edge Devices Using Multi-Cloud

Deploying machine learning (ML) models on edge devices via a multi-cloud approach involves training in the cloud, optimizing for edge constraints, and managing deployments across multiple platforms. Below is a detailed, step-by-step breakdown of the entire process.

Step 1: Train the Model in the Cloud

Since edge devices lack the computational power for training, ML models are first developed and trained in the cloud.

1.1 Choose a Cloud ML Platform

- Google Cloud Vertex AI (Best for AutoML & custom training)

- AWS SageMaker (Strong integration with IoT services)

- Azure Machine Learning (Good for enterprise deployments)

1.2 Train the Model

- Use high-performance GPUs/TPUs for faster training.

- Leverage pre-trained models (e.g., ResNet, YOLO) for transfer learning.

- Preserve your datasets using cloud storage. (S3, Google Cloud Storage, Azure Blob).

Example (AWS SageMaker):

import sagemaker

from sagemaker.tensorflow import TensorFlow

estimator = TensorFlow(

entry_script='train.py',

role=sagemaker.get_execution_role(),

instance_count=1,

instance_type='ml.p3.2xlarge', # GPU instance

framework_version='2.5',

py_version='py37'

)

estimator.fit({'train': 's3://my-bucket/training-data'})

1.3 Export the Trained Model

- Save in TensorFlow (SavedModel), PyTorch (.pt), or ONNX format.

- Store in cloud storage for further optimization.

Step 2: Optimize the Model for Edge Deployment

Edge devices have limited RAM, storage, and compute, so models must be optimized.

2.1 Quantization (Reduce Precision)

- Convert FP32 → INT8 to shrink model size and speed up inference.

- Tools: TensorFlow Lite, ONNX Runtime.

Example (TensorFlow Lite Quantization):

import tensorflow as tf

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model')

converter.optimizations = [tf.lite.Optimize.DEFAULT] # Quantize

tflite_model = converter.convert()

with open('model_quant.tflite', 'wb') as f:

f.write(tflite_model)

2.2 Pruning (Remove Unnecessary Weights)

- Eliminate less essential neurons to reduce model size.

- Tools: TensorFlow Model Optimization Toolkit.

2.3 Compile for Edge Hardware

- Google Coral Edge TPU: Compile with edgetpu_compiler.

- NVIDIA Jetson: Convert to TensorRT.

- AWS DeepLens: Optimize for AWS SageMaker Neo.

Example (Google Coral Compilation):

edgetpu_compiler model_quant.tflite -o edge_tpu_modelStep 3: Deploy to Edge Devices via Multi-Cloud

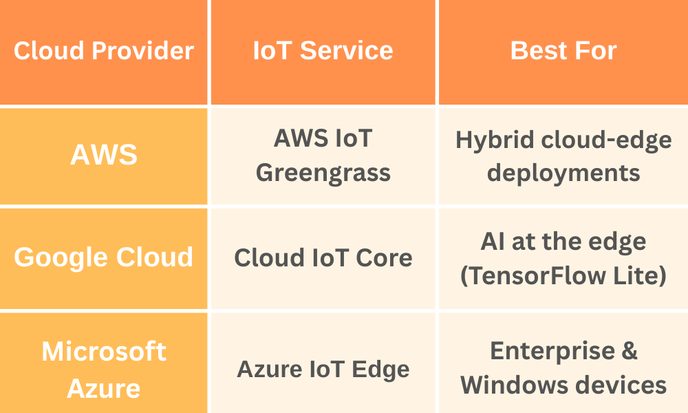

The optimized model is now deployed to edge devices using multi-cloud IoT services.

3.1 Choose a Multi-Cloud IoT Platform

3.2 Register & Manage Edge Devices

- Each cloud provider has a device registry to authenticate and manage edge devices.

Example (AWS IoT Core Device Registration):

- Create a Thing in AWS IoT Core.

- Generate X.509 certificates for secure communication.

- Install AWS IoT Greengrass Core on the edge device.

3.3 Deploy the Model via Cloud-to-Edge Pipeline

- AWS: Use Greengrass ML Inference to push models to devices.

- Google Cloud: Use Cloud IoT Core + Coral Dev Board.

- Azure: Deploy via Azure IoT Edge Modules.

Example (Azure IoT Edge Deployment):

{

"modules": {

"mlmodule": {

"version": "1.0",

"type": "docker",

"status": "running",

"settings": {

"image": "myregistry.azurecr.io/ml_model:latest"

}

}

}

}

Step 4: Run Inference on Edge Devices

Once deployed, the model runs locally on the device without needing cloud connectivity.

4.1 Load the Model on the Device

Example (Raspberry Pi + TensorFlow Lite):

import tflite_runtime.interpreter as tflite

interpreter = tflite.Interpreter(model_path='model_quant_edgetpu.tflite')

interpreter.allocate_tensors()

# Get input/output details

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Run inference

interpreter.set_tensor(input_details[0]['index'], input_data)

interpreter.invoke()

result = interpreter.get_tensor(output_details[0]['index'])

4.2 Handle Real-Time Data

- Cameras: Process video frames locally.

- Sensors: Analyze IoT data in real time.

4.3 Sync with Cloud for Analytics (Optional)

- Send aggregated insights (not raw data) to the cloud for storage.

- Use AWS Lambda, Google Cloud Functions, or Azure Functions for post-processing.

Step 5: Monitor & Update Models

5.1 Remote Monitoring

- AWS IoT Device Management

- Google Cloud Monitoring

- Azure IoT Hub Metrics

5.2 Over-the-Air (OTA) Updates

- Push new model versions automatically.

- Rollback if errors occur.

Example (AWS Greengrass OTA Update):

aws greengrass create-deployment --group-id "MyGreengrassGroup" --deployment-type NewDeployment --deployment-config '{"components": {"ml_model": {"version": "2.0"}}}'4. Real-World Use Cases Where Edge AI and Cloud Deliver Maximum Impact

- Smart Cities – Traffic cameras detecting accidents in real-time.

- Industry 4.0 – Predictive maintenance on factory machines.

- Healthcare – Wearables alerting doctors before emergenci

5. Future Trends (What’s Next?)

- 5G + Edge AI = Ultra-low latency applications

- Neuromorphic Chips = Brain-like efficiency

- Serverless Edge = Pay-as-you-go AI inferencing

Final Thoughts

Edge AI + Multi-Cloud is not just hype—it’s the future. By running AI locally while leveraging the cloud for scalability, businesses get speed, efficiency, and reliability.

Want to try it yourself? Start with a Raspberry Pi + TensorFlow Lite + AWS IoT Core. The possibilities are endless!

FAQ'S

1. How can enterprises scale Edge AI across global operations?

By using container orchestration (like Kubernetes) and Multi-Cloud IoT platforms, enterprises can deploy and manage AI workloads uniformly across thousands of edge nodes while ensuring centralized policy control and performance monitoring.

2. What ROI can enterprises expect from Edge AI with Multi-Cloud?

ROI comes from reduced cloud compute costs, faster decision-making, lower bandwidth usage, and improved customer experiences—especially in sectors like manufacturing, retail, and logistics where real-time AI decisions drive operational efficiency.

3. How do large organizations ensure security at the edge?

Enterprises use hardware-level encryption, zero-trust models, and secure boot protocols. Integrating with Multi-Cloud identity services ensures role-based access, anomaly detection, and audit logging across edge and cloud endpoints.

4. What governance practices are vital for Edge AI in enterprises?

Effective governance includes data lineage tracking, model version control, and compliance monitoring. Tools like Azure Purview or Google Cloud's Data Catalog help manage metadata and enforce privacy policies across hybrid environments.

5. Can Edge AI integrate with enterprise data lakes or warehouses?

Yes. Aggregated insights from edge devices can be securely synced with enterprise data lakes (e.g., Amazon Redshift, BigQuery, or Azure Synapse) for deep analytics, reporting, and strategic decision-making.